Difference between revisions of "Transcribing Interviews"

| Line 40: | Line 40: | ||

While typing, the text may be structured and punctuations may be added to improve legibility, while the impact this act has on the transcript should be acknowledged. The same is true for the use of paragraphs and line-breaks in the text, which are advisable for turn-by-turn dialogic interactions or longer monologues, but also influence the way the expressed arguments are represented in the written text. To allow for quotations, the lines of '''the transcript should be numbered''' or time codes should be added at the end of each paragraph (2). A transcript template may be advisable to help standardize the transcripts (1). | While typing, the text may be structured and punctuations may be added to improve legibility, while the impact this act has on the transcript should be acknowledged. The same is true for the use of paragraphs and line-breaks in the text, which are advisable for turn-by-turn dialogic interactions or longer monologues, but also influence the way the expressed arguments are represented in the written text. To allow for quotations, the lines of '''the transcript should be numbered''' or time codes should be added at the end of each paragraph (2). A transcript template may be advisable to help standardize the transcripts (1). | ||

| − | Programs like e.g., f4transkript or the transcription function of MAXQDA offer a range of settings to easily handle different of the above mentioned aspects. Autotranscription programs can also be used, albeit only for the denaturalistic approach. However, the time saver effect is limited because training the program for voice recognition takes time, and transcription accuracy still has to be validated and corrected by hand. | + | Programs like e.g., f4transkript or the transcription function of [[MAXQDA]] offer a range of settings to easily handle different of the above mentioned aspects. Autotranscription programs can also be used, albeit only for the denaturalistic approach. However, the time saver effect is limited because training the program for voice recognition takes time, and transcription accuracy still has to be validated and corrected by hand. |

For ethical reasons, the '''identity of the interviewee should be secured''' in the transcribed text by attributing a number, pseudonym or code to the interview (1). This should be done in a way that supports easy data management and file access. If member checking is planned, the transcriber should leave space in the transcript file for potential comments (1). | For ethical reasons, the '''identity of the interviewee should be secured''' in the transcribed text by attributing a number, pseudonym or code to the interview (1). This should be done in a way that supports easy data management and file access. If member checking is planned, the transcriber should leave space in the transcript file for potential comments (1). | ||

| − | |||

== References == | == References == | ||

Revision as of 15:32, 19 September 2023

In short: This entry revolves around Transcription, which is the reconstruction of recorded audio into written text for the analysis of Interviews. For more on Interview Methodology, please refer to the Interview overview page.

Contents

What is Transcription?

Transcription is not a scientific method in itself, but an important step between the conduction of Interviews and the Coding process and subsequent analysis. Transcription entails the process of writing down what has been said in an Interview based on video or audio recordings. Transcription has become common practice in qualitative research (1). The transcription process is an analytical and interpretative act, which influences how the transcript represents what has actually been said or done by the interviewee(s) (1, 3). A well-conceived transcription process will support the analysis, and a bad transcription may lead to an omission or distortion of important data. This entry revolves around important elements of the transcription process. For more details on Interviews and the subsequent analysis of the transcripts, please refer to Interviews and Content Analysis.

Transcriptions are mostly relevant for qualitative Interview approaches, such as Open Interviews, Semi-structured Interviews, Narrative Interviews, Ethnographic Interviews or Focus Groups. It may also be relevant for Video Research, or serve as a source for supplementary data when conducting Surveys. Transcripts enable researchers to analyze what Interviewee's or observed individuals said in a given situation without the risk of missing crucial content. Knowing that the data will be there later on allows for the researcher to engage with the Interview situation instead of imposing the need to write everything down right away. Recorded material can be re-visited long after the data gathering process and transcribed.

Denaturalized and naturalized transcription

In a denaturalized approach, the recorded speech is written down word by word. Denaturalized transcription revolves mostly around the informational content of the interviewee's speech. Denaturalism "(...) suggests that within speech are meanings and perceptions that construct our reality" (Oliver et al. 2005, p.1274). This approach is more about what is said, than how it is said, and is mostly relevant to research interested in how people conceive and communicate their world, for example in Grounded Theory research (although there are also exceptions here) (Oliver et al. 2005, p.1278).

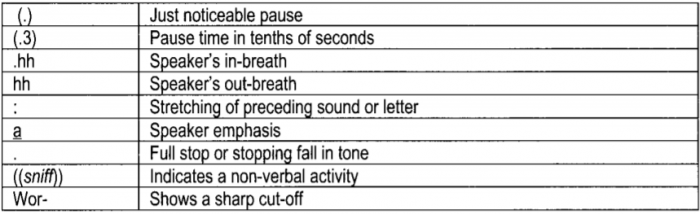

Naturalized transcription is as detailed as possible, including stutters, pauses and other idiosyncratic elements of speech. It attempts to provide more details and a more 'realistic' representation of the interviewee's speech. The idea is to reduce a loss of information, be more 'objective' and true to the Interviewee(s), and thus impose less assumptions through the researcher. Naturalized Transcription is, for example, of interest when a conversation between individuals is recorded (in a group interview, or a Focus Group), and the way in which these individuals interact with each other is of interest (overlapping talk, turn-taking etc.) (Oliver et al. 2005, p.1275.) Grammatical or spelling mistakes are not corrected in the transcript for naturalistic transcription (1). Also, verbal cues that support the spoken word may elicit more insights for the researchers, such as dialects, increasing or decreasing volumes and specific emphases for individual words, or pauses. For this purpose, the transcript may include textual symbols to provide more information despite the words themselves:

Naturalized transcription concerns itself more with how individuals speak and converse. However, the researcher needs to weigh whether this level of detail is necessary for the specific research intent, since it leads to a considerable increase in transcription effort.

Normativity of transcriptions

No matter the approach to transcription, it is important to acknowledge that any transcript is a re-construction of the Interview done by the researcher. As Skakauskaite (2012, p.24) highlights: "Transcribing (...) is a form of analysis that is shaped by the researchers' examined and unexamined theories and assumptions, ideological and ethical stances, relationships with participants, and the research communities of which one is a member". A researcher that bases his or her analysis on Interview transcripts needs to acknowledge this role he or she imposes on the data in the subsequent analysis and interpretation. A lack of reflexivity in this regard may distort the research results, and impede interpretation especially in-between different research communities. Oliver et al. (2005) therefore suggests researchers to reflect upon the chosen approach and which challenges emerge based on it.

Likewise, Skakauskaite (2012, p.25) calls for more transparent information on the construction of transcripts in research publications: "What we can learn and know about human activity and interaction depends on how we use language data and what choices we make of how to turn audio (and/or video) records into written texts, what to represent and not represent, and how to represent it. Given that there is no single way of transcribing, making transparent transcribing decisions and theories guiding those decisions, can provide grounded warrants for claims researchers make about observed (and recorded) human actions and interactions." Therefore, a proper documentation of the chosen approach, the underlying rationale, and the challenges that were recognized is advisable. Also, asking peers to assess the transcript, or attempting member checking - i.e., interviewees check the transcripts for accuracy - may improve the transcript to this end (1).

Challenges in the transcription process

Oliver et al. (2005) list a range of challenges in the translation of recorded speech to text. These include technical issues, such as bad recording quality. Further, transcribers may mis-interpret pronunciation, slang or accents, and write down incorrect words. Also, involuntary vocalizations, such as coughing or laughing, may provide additional information to supplement speech, but also not be of meaning, which may be hard to decide. Lastly, non-verbal cues such as waving or gesticulations may add information to the spoken word, but are only analyzable if recorded on video and/or noted down during the interview, and subsequently added to the transcript.

The act of transcription may prove cumbersome. Typically, the transcription of recorded text may take between 5-10 times as long as the Interview, although this may differ between researchers and depending on the transcription approach. In any case, the time needed for transcription needs to be factored into the research schedule. Further, long sessions of transcribing Interviews are exhaustive, and may lead to inaccuracies in later datasets. Researchers should therefore consider transcribed Interviews right after they were conducted, if possible.

For larger research projects, with several interviews conducted possibly in different regions, there will typically be more than one transcriber, and the transcriber may not always be the researcher. Therefore, to maintain accurate and comparable transcripts, and provide intersubjective data readibility, the approach to transcribing and a clear denomination of what is important, what is not, and how to deal with specific data, is important to define and discuss prior to the process, and whenever issues emerge (Oliver et al. 2005).

Technical tips

The most crucial point for a smooth transcription is the quality of recording. The researcher should pay great attention to producing a high quality recording during the interview situation, or later in working with the recorded material, to save time in the transcription process. This includes, for example, proper handling of the recording device, avoiding or filtering out background noises, or, if necessary, asking interviewees to repeat phrases that were not understandable during the interview itself.

Generally, it is advisable to transcribe data not too long after gathering it, in case certain phrases are not understandable in the recording, and memory can better help recall. However, a time lag between recording and transcription can help the researcher distancing themselves from the interview situation and allow for greater objectivity in listening. If the data gathering took time long before the transcription, it is recommendable for the transcriber to listen to the complete recording again before starting the transcription in order to recall the conversation as a whole, and be able to pay attention to details and the informational content, which can be helpful to properly create the transcript (1).

When transcribing, it is advisable to play the recorded text at a slower speed and type simultaneously - the author of this entry has made good experiences with a 0.7x speed for denaturalistic transcription. However, the preferable speed depends on the speed that the transcriber can type at (see Speed Typing), and while slower replays of the recordings will extend the transcription process, a quicker replay is more prone to mistakes and misunderstandings. This is especially relevant for fast speaking interviewees, heavily accented speech, or naturalistic transcription approaches, where more time will be needed to perceive all details. The replay should be done using a device or software that can easily be accessed, like a phone, recording device, or second screen on the computer. This is because sections of the recording may be necessary to replay several times. A single skip should be possible by the press of a button, and adjusted to a preferable time span, e.g. 2 seconds back, to simplify this.

While typing, the text may be structured and punctuations may be added to improve legibility, while the impact this act has on the transcript should be acknowledged. The same is true for the use of paragraphs and line-breaks in the text, which are advisable for turn-by-turn dialogic interactions or longer monologues, but also influence the way the expressed arguments are represented in the written text. To allow for quotations, the lines of the transcript should be numbered or time codes should be added at the end of each paragraph (2). A transcript template may be advisable to help standardize the transcripts (1).

Programs like e.g., f4transkript or the transcription function of MAXQDA offer a range of settings to easily handle different of the above mentioned aspects. Autotranscription programs can also be used, albeit only for the denaturalistic approach. However, the time saver effect is limited because training the program for voice recognition takes time, and transcription accuracy still has to be validated and corrected by hand.

For ethical reasons, the identity of the interviewee should be secured in the transcribed text by attributing a number, pseudonym or code to the interview (1). This should be done in a way that supports easy data management and file access. If member checking is planned, the transcriber should leave space in the transcript file for potential comments (1).

References

(1) Widodo, H.P. 2014. Methodological Considerations in Interview Data Transcription. International Journal of Innovation in English Language 3(1). 101-107.

(2) Dresing, T., Pehl, T. 2015. Praxisbuch Interview, Transkription & Analyse. Anleitungen und Regelsysteme für qualitativ Forschende. 6th edition.

(3) Skakauskaite, A. 2012. Transparency in Transcribing: Making Visible Theoretical Bases Impacting Knowledge Construction from Open-Ended Interview Records. Forum Qualitative Social Research 13(1,14).

(4) Oliver, D.G. Serovich, J.M. Mason, T.L. 2005. Constraints and Opportunities with Interview Transcription: Towards Reflection in Qualitative Research. Social Forces 84(2). 1273-1289.

The author of this entry is Christopher Franz.