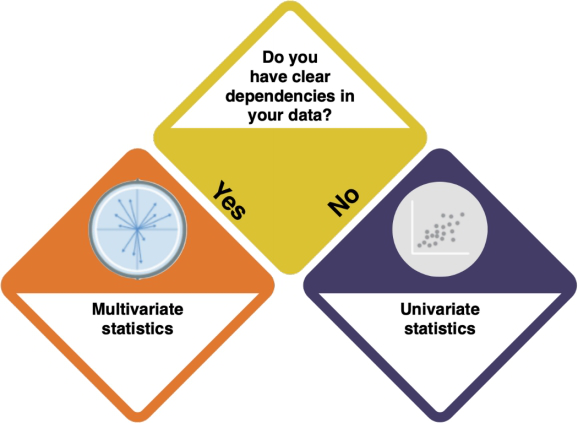

An initial path towards statistical analysis

Learning statistics takes time, as it is mostly experience that allows us to be able to approach the statistical analysis of any given dataset. While we cannot take off of you the burden to gather experience yourself, we developed this interactive page for you to find the best statistical method to analyze your given dataset. This can be a start for you to dive deeper into statistical analysis, and helps you better design studies.

Go through the images step by step, click on the answers that apply to your data, and let the page guide you.

If you need help with data visualisation, please refer to the entry on Introduction to statistical figures.

Start here with your data! This is your first question.

How do I know?

- Inspect your data with

strorsummary. Are there several variables? - What does the data show? Do the data suggest dependencies between the variables?

Contents

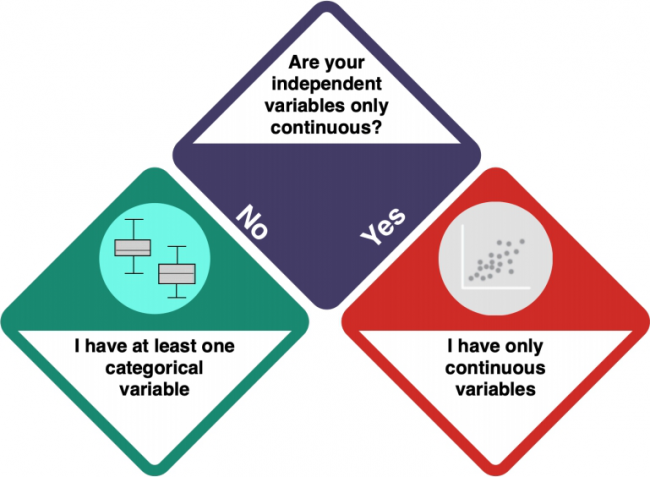

Univariate statistics

You are dealing with Univariate Statistics. This means that you either have only one variable, and/or there are dependencies between the variables. But what kind of variables do you have?

How do I know?

- Check the entry on Data formats to understand the difference between categorical and numeric variables.

- Investigate your data using

strorsummary. integer and numeric data is not categorical, while factorial, ordinal and character data is categorical.

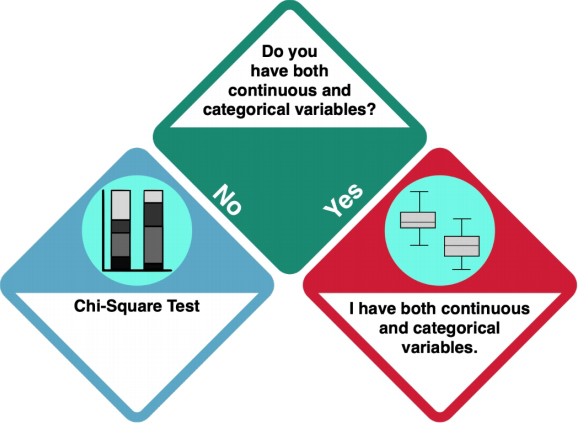

At least one categorical variable

Your dataset does not only contain continuous data. Does it only consist of categorical data, or of categorical and continuous data?

How do I know?

- Investigate your data using

strorsummary. integer and numeric data is not categorical, while factorial, ordinal and character data is categorical.

Only categorical data: Chi Square Test

If you have only one categorical variable, you could just plot the number of your data points in a barplot. The key R command is barplot(). Check the entry on barplots to learn more.

If you have more than one categorical variables, you could do a Chi Square Test.

A Chi Square test can be used to test if one variable influenced the other one, or if they occur independently from each other. The key R command here is: chisq.test(). Check the entry on Chi Square Tests to learn more.

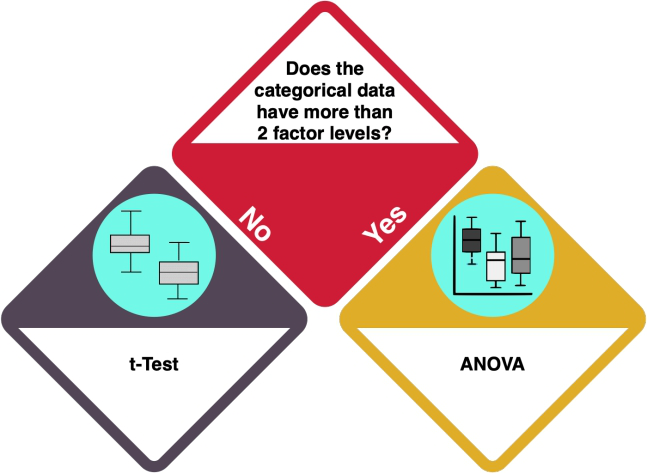

Categorical and continuous data

Your dataset consists of continuous and categorical data. How many levels does your categorical variable have?

How do I know?

- A 'factor level' is a category in a categorical variable. For example, when your variable is 'car brands', and you have 'AUDI' and 'TESLA', you have two factor levels. Investigate your data using

strorsummary. How many different categories does your categorical variable have?

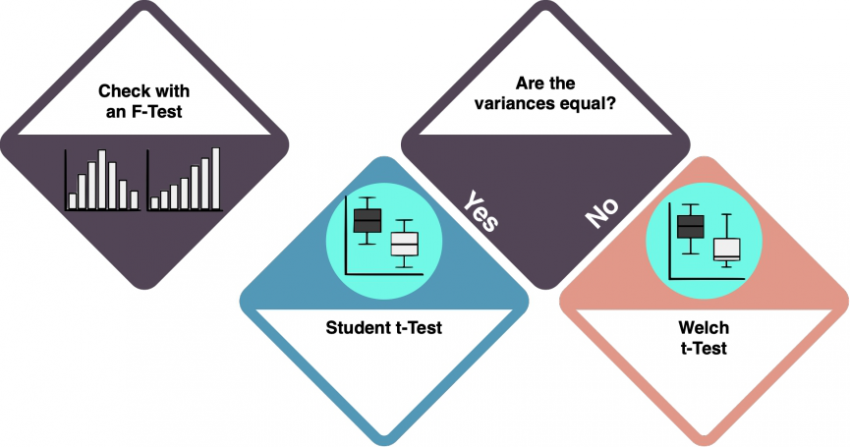

One or two factor levels: t-test

With one or two factor levels, you should do a t-test.

A one-sample t-test allows for a comparison of a dataset with a specified value. A two-sample t-test allows for a comparison of two different datasets or samples and tells you if the means of the two datasets differ significantly. The key R command is t-test(). Check the entry on the t-Test to learn more.

Depending on the variances of your variables, the type of t-test differs.

How do I know?

- Use an f-Test to check whether the variances of the datasets are equal. The key R command for an f-test is

var.test().

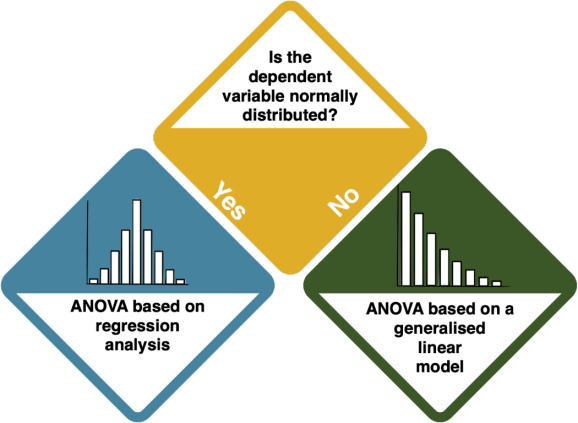

More than two factor levels: ANOVA

Your categorical variable has more than two factor levels: you should do an ANOVA.

An ANOVA compares the means of more than two groups by extending the restriction of the t-test. An ANOVA is typically visualised using Boxplots.

The key R command is aov(). Check the entry on the ANOVA to learn more.

However, the kind of ANOVA that you should do depends on the distribution of your dependent variable.

How do I know?

- Inspect the data by looking at histograms. The key R command for this is

hist(). Compare your distribution to the Normal Distribution. - Perform a statistical test like the Shapiro-Wilk test, which helps you assess whether you have a normal distribution. The key R command for this is

shapiro.test().

You might end here, but you might also want to do a Multiple ANOVA with model reduction. WORK IN PROGRESS

Only continuous variables

So your data is only continuous.

If you only have one variable, you might want to do a Histogram. This helps you visualize the distribution of your data. Check the entry on Histograms to learn more.

You could also just calculate the mean, median and range of your data distribution to understand it better. Also, you could visualize the mean of your data in a barplot, or better, in a boxplot. Key R commands are barplot() and boxplot(). Check the entries on Barplots and Boxplots to learn more.

If you have more than one variable, you need to check if there dependencies between the variables.

How do I know?

- Consider the data from a theoretical perspective. Is there a clear direction of the dependency? Does one variable cause the other? Check out the entry on Causality.

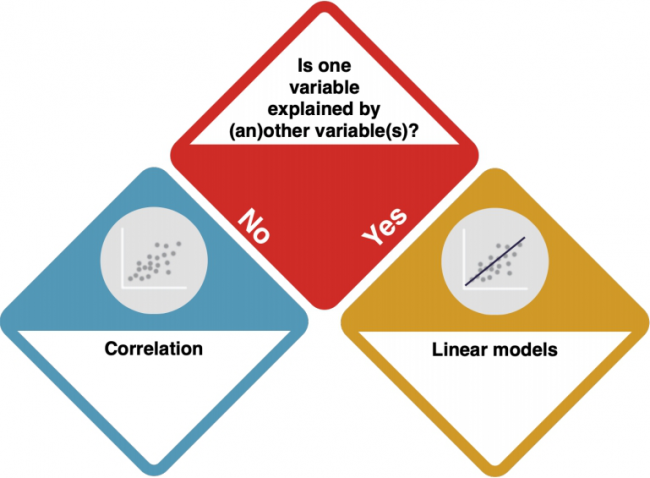

No dependencies: Correlations

If there are no dependencies between your variables, you should do a Correlation.

A correlation test inspects if two variables are related to each other. The direction of the connection (if or which variable influences another) is not set. Correlations are typically visualised using Scatter Plots or Line Charts. Key R commands are plot() to visualise your data, and cor.test() to check for correlations.

Check the entry on Correlations to learn more. NEEDS R EXAMPLE FOR CORRELATIONS; INCLUDING INFO ON RESIDUALS AND CORRELATION COEFFICIENT

The type of correlation that you need to do depends on your data distribution.

How do I know?

- Inspect the data by looking at histograms. The key R command for this is

hist(). Compare your distribution to the Normal Distribution. - Perform a statistical test like the Shapiro-Wilk test, which helps you assess whether you have a normal distribution. The key R command for this is

shapiro.test().

LINK BOTH BOXES TO R EXAMPLE PAGES ON PEARSON AND SPEARMAN CORRELATIONS

Clear dependencies

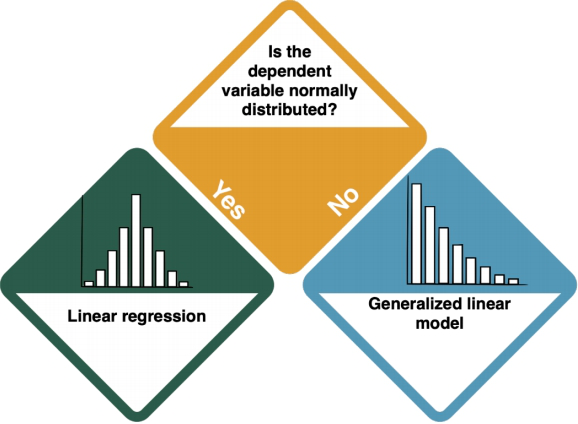

Your dependent variable is explained by one at leats one independent variable. Is the dependent variable normally distributed?

How do I know?

- Inspect the data by looking at histograms. The key R command for this is

hist(). Compare your distribution to the Normal Distribution. - Perform a statistical test like the Shapiro-Wilk test, which helps you assess whether you have a normal distribution. The key R command for this is

shapiro.test().

Normally distributed dependent variable: Linear Regression

If your dependent variable(s) is/are normally distributed, you should do a Linear Regression.

A linear regression is.. ADD

Check the entry on Linear Regressions MISSING to learn more.

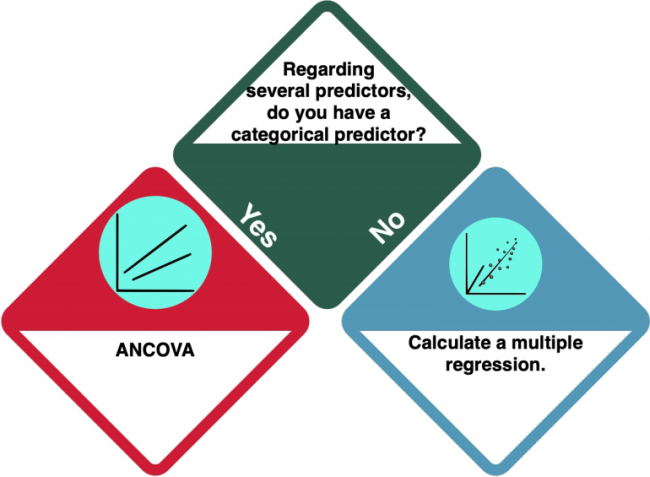

There may be one exception to a plain linear regression: if you have several predictors, there is one more decision to make:

Is there a categorical predictor?

You have several predictors in your dataset. WHAT DOES THIS MEAN? But is (at least) one of them categorical?

How do I know?

- Check the entry on Data formats to understand the difference between categorical and numeric variables.

- Investigate your data using

strorsummary. integer and numeric data is not categorical, while factorial and character data is. - HOW ELSE DO I KNOW IF THERE IS A CATEGORICAL PREDICTOR?

ANCOVA

If you have at least one categorical predictor, you should do an ANCOVA. An ANCOVA is.... (ADD) Check the entry on Ancova to learn more.

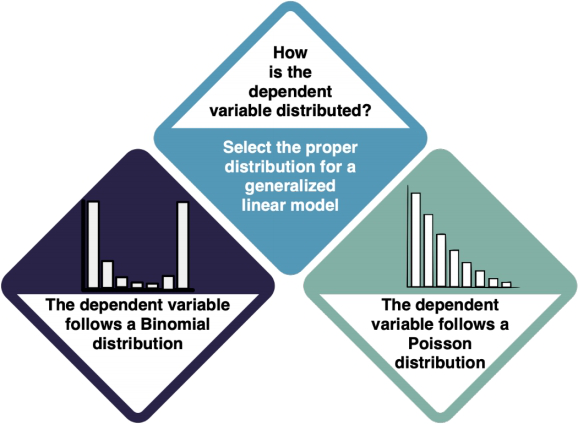

Not normally distributed dependent variable

The dependent variable(s) is/are not normally distributed. Which kind of distribution does it show, then? For both Binomial and Poisson distributions, your next step is the Generalised Linear Model. However, it is important that you select the proper distribution type in the GLM.

How do I know?

- Try to understand the data type of your dependent variable and what it is measuring. For example, if your data is the answer to a yes/no (1/0) question, you should use a Binomial distribution. If it is count data (1, 2, 3, 4...), use a Poisson Distribution. DO WE 'USE' THE DISTRIBUTION; OR DOES THE DATA ALREADY HAVE IT AND WE JUST LET THE GLM KNOW THAT? - WORDING NOT QUITE CLEAR HERE

- Check the entry on Non-normal distributions to learn more.

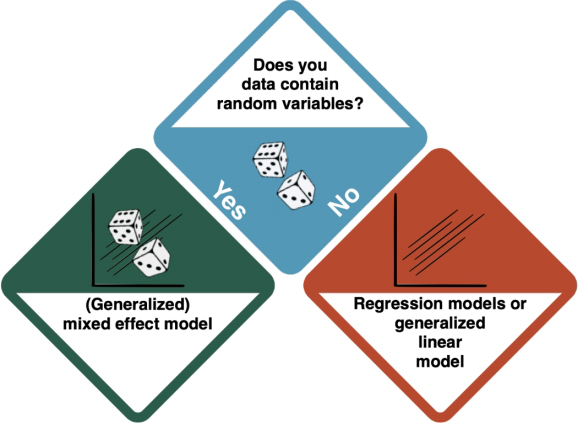

Generalised Linear Models

You have arrived at a Generalised Linear Model (GLM). GLMs are... ADD The key R command is glm().

Depending on the existence of random variables, there is a distinction between Mixed Effect Models, and Generalised Linear Models, which are based on regressions.

How do I know?

- Are there any variables that you cannot influence but whose effects you want to "rule out"? IF SO: WHAT DO WE CHOOSE THEN?

MAKE SURE THAT THE GLM ENTRY AND THE MIXED EFFECT MODEL ENTRY EXPLAIN THE ROLE OF RANDOM VARIABLES; AND POSSIBLY ALSO EXPLAIN THE ROLE OF DIFFERENT DISTRIBUTIONS (BINOMIAL; POISSON)

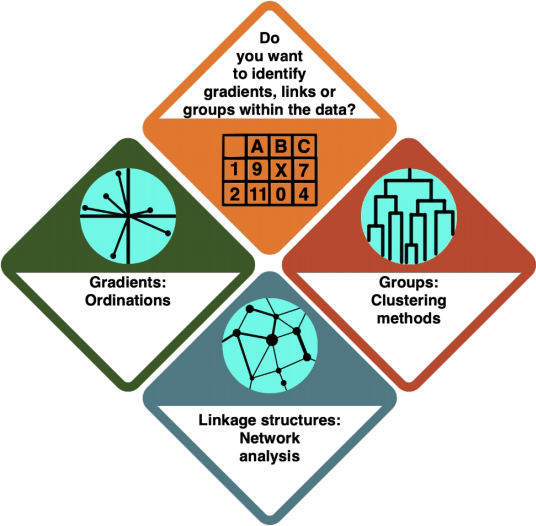

Multivariate statistics

You are dealing with Multivariate Statistics. This means that ... ADD You have multiple variables, and maybe they also have internal dependencies (CORRECT?). Which kind of analysis do you want to conduct?

How do I know? (ARE THE DESCRIPTIONS OK?)

- In an Ordination, you arrange your data alongside underlying gradients in the variables to see which variables most strongly define the data points.

- In a Cluster Analysis, you group your data points according to how similar they are, resulting in a tree structure.

- In a Network Analysis, you arrange your data in a network structure to understand their connections and the distance between individual data points.

Ordinations

You are doing an ordination. In an Ordination, you arrange your data alongside underlying gradients in the variables to see which variables most strongly define the data points. Check the entry on Ordinations MISSING to learn more.

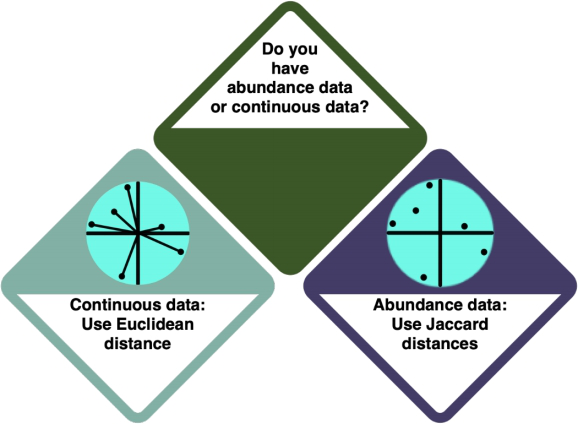

There is a difference between ordinations for different data types - for abundance data, you use Euclidean distances, and for continuous data, you use Jaccard distances.

How do I know?

- Check the entry on Data formats to learn more about the different data formats.

- Investigate your data using

strorsummary. Abundance data is marked as FORMATNAME, and continuous data is marked as FORMATNAME.

ORDINATIONS ENTRY SHOULD EXPLAIN LINEAR-BASED ORDINATIONS (EUCLIDEAN) AND CORRESPONDENCE ANALYSIS (JACCARD) (IS THIS EVEN CORRECT?)

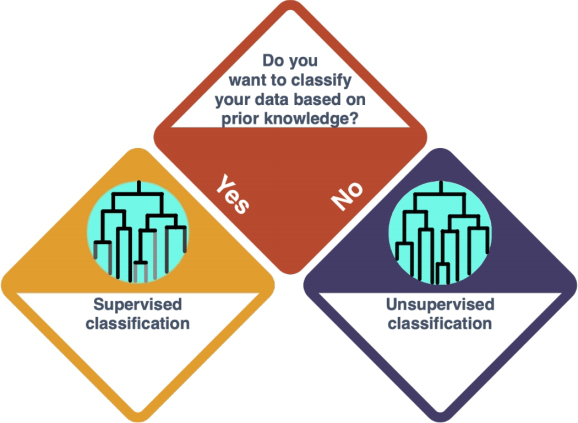

Cluster Analysis

So you decided for a Cluster Analysis. In a Cluster Analysis, you group your data points according to how similar they are, resulting in a tree structure. Check the entry on Clustering Methods to learn more.

There is a difference to be made here, dependent on whether you want to classify the data based on prior knowledge (supervised) or not (unsupervised). DISTINCTION BETWEEN SUPERVISED AND UNSUPERVISED IS NOT MADE IN THE CLUSTERING ENTRY - ADD THIS!

How do I know?

- HOW DO I KNOW IF IT IS BASED ON PRIOR KNOWLEDGE OR NOT?

- WHERE DO WE LINK FOR SUPERVISED AND UNSUPERVISED?

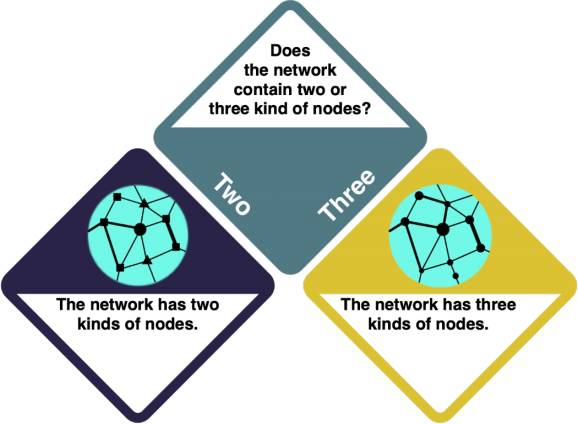

Network Analysis

WORK IN PROGRESS You have decided to do a Network Analysis. In a Network Analysis, you arrange your data in a network structure to understand their connections and the distance between individual data points. Check the entry on Social Network Analysis and the R example entry MISSING to learn more. Keep in mind that network analysis is complex, and there is a wide range of possible approaches that you need to choose between.

How do I know what I want?

- Consider your research intent: are you more interested in the role of individual actors, or rather in the network structure as a whole?

Some general guidance on the use of statistics

While it is hard to boil statistics down into some very few important generalities, I try to give you here a final bucket list to consider when applying or reading statistics.

1) First of all, is the statistics the right approach to begin with? Statistics are quite established in science, and much information is available in a form that allows you to conduct statistics. However, will statistics be able to generate a piece of the puzzle you are looking at? Do you have an underlying theory that can be put into constructs that enable a statistical design? Or do you assume that a rather open research question can be approached through a broad inductive sampling? The publication landscape, experienced researchers as well as pre-test may shed light on the question whether statistics can contribute solving your problem.

2) What are the efforts you need to put into the initial data gathering? If you decided that statistics would be valuable to be applied, the question then would be, how? To rephrase this statement: How exactly? Your sampling with all its constructs, sample sizes and replicates decides about the fate of everything you going to do later. A flawed dataset or a small or biased sample will lead to failure, or even worse, wrong results. Play it safe in the beginning, do not try to overplay your hand. Slowly edge your way into the application of statistics, and always reflect with others about your sampling strategy.

3) The analysis then demands hand-on skills, as implementing tests within a software is something that you learn best through repetition and practice. I suggest you to team up with other peers who decide to go deeper into statistical analysis. If you however decide against that, try to find geeks that may help you with your data analysis. Modern research works in teams of complementary people, thus start to think in these dimensions. If you chip in the topical expertise of the effort to do the sampling, other people may be glad about the chance to analyse the data.

4) This is also true for the interpretation, which most of all builds on experience. This is the point were a supervisor or a PhD student may be able to glance at a result and tell you which points are relevant, and which are negotiable. Empirical research typically produces results where in my experience about 80 % are next to negliable. It takes time to learn the difference between a trivial and an innovative result. Building on knowledge of the literature helps again to this end, but be patient as the interpretation of statistics is a skill that needs to ripen, since context matters. It is not so much about the result itself, but more about the whole context it is embedded in.

5) The last and most important point explores this thought further. What are the limitations of your results? Where can you see flaws, and how does the multiverse of biases influence your results and interpretation? What are steps to be taken in future research? And what would we change if we could start over and do the whole thing again? All these questions are like ghosts that repeatedly come to haunt a researcher, which is why we need to remember we look at pieces of the puzzle. Acknowledging this is I think very important, as much of the negative connotation statistics often attracts is rooted in a lack of understanding. If people would have the privilege to learn about statistics, they could learn about the power of statistics, as wells its limitations.

Never before did more people in the world have the chance to study statistics. While of course statistics can only offer a part of the puzzle, I would still dare to say that this is reason for hope. If more people can learn to unlock this knowledge, we might be able to move out of ignorance and more towards knowledge. I think it would be very helpful if in a controversial debate everybody could dig deep into the available information, and make up their own mind, without other people telling them what to believe. Learning about statistics is like learning about anything else, it is lifelong learning. I believe that true masters never achieve mastership, instead they never stop to thrive for it.