Difference between revisions of "Correlations"

| Line 20: | Line 20: | ||

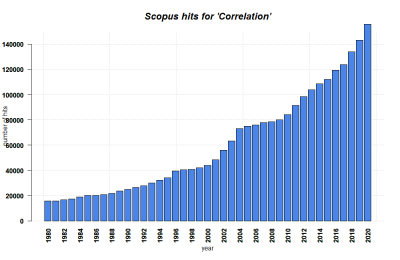

[[File:Correlation.png|400px|thumb|right|'''SCOPUS hits per year for Correlations until 2020.''' Search terms: 'Correlation' in Title, Abstract, Keywords. Source: own.]] | [[File:Correlation.png|400px|thumb|right|'''SCOPUS hits per year for Correlations until 2020.''' Search terms: 'Correlation' in Title, Abstract, Keywords. Source: own.]] | ||

| − | Karl Pearson is considered to be the founding father of mathematical statistics; hence it is no surprise that one of the central methods in statistics - to test the relationship between two continuous variables - was invented by him at the brink of the 20th century (see Karl Pearson's "Notes on regression and inheritance in the case of two parents" from 1895). His contribution was based on work from Francis Galton and Auguste Bravais. With more data becoming available and the need for an “exact science” as part of the industrialization and the rise of modern science, the Pearson correlation paved the road to modern statistics at the beginning of the 20th century. While other approaches such as the t-test or the Analysis of Variance ([[ANOVA]]) by Pearson's arch-enemy Fisher demanded an experimental approach, the correlation simply required data with a continuous measurement level. Hence it appealed to the demand for an analysis that could be conducted based solely on measurements done in engineering, or on counting as in economics, without being preoccupied too deeply with the reasoning on why variables correlated. '''Pearson recognized the predictive power of his discovery, and the correlation analysis became one of the most abundantly used statistical approaches in diverse disciplines such as economics, ecology, psychology and social sciences.''' Later came the regression analysis, which implies a causal link between two continuous variables. This makes it different from a correlation, where two variables are related, but not necessarily causally linked. This article focuses on correlation analysis | + | Karl Pearson is considered to be the founding father of mathematical statistics; hence it is no surprise that one of the central methods in statistics - to test the relationship between two continuous variables - was invented by him at the brink of the 20th century (see Karl Pearson's "Notes on regression and inheritance in the case of two parents" from 1895). His contribution was based on work from Francis Galton and Auguste Bravais. With more data becoming available and the need for an “exact science” as part of the industrialization and the rise of modern science, the Pearson correlation paved the road to modern statistics at the beginning of the 20th century. While other approaches such as the t-test or the Analysis of Variance ([[ANOVA]]) by Pearson's arch-enemy Fisher demanded an experimental approach, the correlation simply required data with a continuous measurement level. Hence it appealed to the demand for an analysis that could be conducted based solely on measurements done in engineering, or on counting as in economics, without being preoccupied too deeply with the reasoning on why variables correlated. '''Pearson recognized the predictive power of his discovery, and the correlation analysis became one of the most abundantly used statistical approaches in diverse disciplines such as economics, ecology, psychology and social sciences.''' Later came the regression analysis, which implies a causal link between two continuous variables. This makes it different from a correlation, where two variables are related, but not necessarily causally linked. This article focuses on correlation analysis and only touches upon regressions. For more, please refer to the entry on [[Regression Analysis]].) |

| + | |||

== What the method does == | == What the method does == | ||

| Line 40: | Line 41: | ||

<br/> | <br/> | ||

| − | '''There are some core questions related to the application of correlations:'''<br/> | + | '''There are some core questions related to the application and reading of correlations:'''<br/> |

| − | 1) ''Is the relationship between two variables positive or negative?'' For instance, being taller leads to a significant increase in [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3534609/ body weight] | + | 1) ''Is the relationship between two variables positive or negative?'' If one variable increases, and the other one increases, too, we have a positive ("+") correlation. This is also true if both variables decrease. For instance, being taller leads to a significant increase in [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3534609/ body weight]. On the other hand, if one variable increases, and the other decreases, the correlation is negative ("-"): for example, the relationship between 'pizza eaten' and 'pizza left' is negative. The more pizza slices are eaten, the fewer slices are still there. |

| + | 2) ''Is the relation positive or negative?'' | ||

| + | Relations can be positive or negative (or neutral). The normative value of a positive or negative relation typically has strong implications, especially if both directions are theoretically possible. Therefore it is vital to be able to interpret the direction of a correlative relationship. | ||

| − | 2) ''Is the estimated correlation coefficient small or large?'' It can range from -1 to +1, and is an important measure when we evaluate the strength of a statistical relationship. An example for a perfect positive correlation (with a correlation coefficient ''r'' of +1) is the relationship between temperature in [[To_Rule_And_To_Measure#Celsius_vs_Fahrenheit_vs_Kelvin|Celsius and Fahrenheit]]. This should not be surprising, since Fahrenheit is defined as 32 + 1.8° C. Therefore, their relationship is perfectly linear, which results in such a strong correlation coefficient. We can thus say that 100% of the variation in temperature in Fahrenheit is explained by the temperature in Celsius. | + | 2) ''Is the estimated correlation coefficient small or large?'' It can range from -1 to +1, and is an important measure when we evaluate the strength of a statistical relationship. An example for a perfect positive correlation (with a correlation coefficient ''r'' of +1) is the relationship between temperature in [[To_Rule_And_To_Measure#Celsius_vs_Fahrenheit_vs_Kelvin|Celsius and Fahrenheit]]. This should not be surprising, since Fahrenheit is defined as 32 + 1.8° C. Therefore, their relationship is perfectly linear, which results in such a strong correlation coefficient. We can thus say that 100% of the variation in temperature in Fahrenheit is explained by the temperature in Celsius. On the other hand, you might encounter data of two variables that is scattered all the way in an x-y-plot and you cannot find a significant relationship. The correlation coefficient ''r'' might be around 0.1, or 0.2. Here, you can assume that there is no strong relationship between these two variables, and that one variable does not explain the other one. |

| + | 1) ''How strong is the relation?'' | ||

| + | As we explained above, the data points may scatter widely, or there may be a rather linear relationship - and everything in between. The stronger the correlation estimate of a relation is, the more may these relations matter, some may argue. If the points are distributed like stars in the sky, then the relationship is probably not significant and interesting. Of course this is not entirely generalisable, but it is definitely true that a neutral relation only tells you, that the relation does not matter. At the same time, even weaker relations may give important initial insights into the data, and if two variables show any kind of relation, it is good to know the strength. By practising to quickly grasp the strength of a correlation, you become really fast in understanding relationships in data. Having this kind of skill is essential for anyone interested in approximating facts through quantitative data. | ||

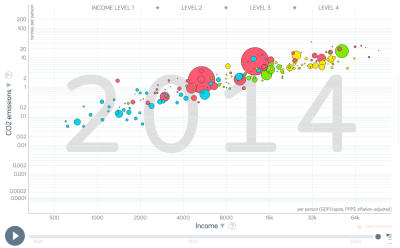

3) ''What does the relationship between two variables explain?'' To illustrate this, let us have a look at the example of the percentage of people working in [https://ourworldindata.org/employment-in-agriculture?source=post_page--------------------------- Agriculture] within individual countries. Across the world, people at a low income (<5000 Dollar/year) have a high variability in terms of agricultural employment: half of the population of the Chad work in agriculture, while in Zimbabwe it is only 10 %. However, at an income above 15000 Dollar/year, there is hardly any variance in the percentage of people that work in agriculture: it is always very low. This has reasons, and there are probably one or several variables that explain this variability. A correlation analysis helps us identify such variances in the data relationship, and we should look at correlation coefficients for different parts of the data to identify those parts of the data where we might want to have a closer look to better understand how variables are related. | 3) ''What does the relationship between two variables explain?'' To illustrate this, let us have a look at the example of the percentage of people working in [https://ourworldindata.org/employment-in-agriculture?source=post_page--------------------------- Agriculture] within individual countries. Across the world, people at a low income (<5000 Dollar/year) have a high variability in terms of agricultural employment: half of the population of the Chad work in agriculture, while in Zimbabwe it is only 10 %. However, at an income above 15000 Dollar/year, there is hardly any variance in the percentage of people that work in agriculture: it is always very low. This has reasons, and there are probably one or several variables that explain this variability. A correlation analysis helps us identify such variances in the data relationship, and we should look at correlation coefficients for different parts of the data to identify those parts of the data where we might want to have a closer look to better understand how variables are related. | ||

| + | |||

| + | 3) ''Does the relation change within parts of the data?'' | ||

| + | This is already an advanced skill. So if you have looked at the strength of a correlation, and its direction, you are good to go generally. But sometimes, these change in different parts of the data. | ||

| + | Regarding this, the best advice is to look at the initial scatterplot, but also the [https://www.youtube.com/watch?v=-qlb_nZvN_U residuals]. If the scattering of all points is more or less equal across the whole relation, then you may realise that all errors are equally distributed across the relation. In reality, this is often not the case. Instead we often know less about one part of the data, and more about another part of the data. In addition to this, we often have a stronger relation across parts of the dataset, and a weaker relation across other parts of the dataset. These differences are important, as they hint at underlying influencing variables or factors that we did not understand yet. Becoming versatile in reading scatter plots becomes a key skill here, as it allows you to rack biases and flaws in your dataset and analysis. This is probably the most advanced skill when it comes to reading a correlation plot. | ||

| + | |||

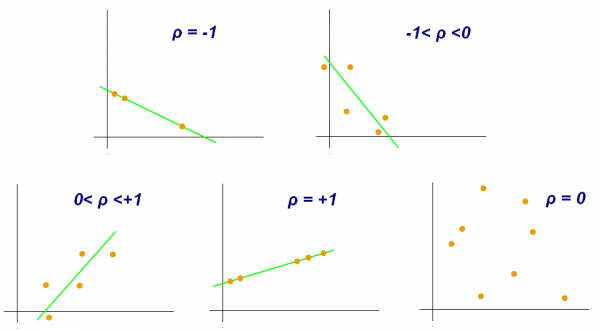

| + | As you can see, correlation analysis is first and foremost a matter of identifying ''if'' and ''how'' two variables are related. However, we do not necessarily assume that we can predict the value of one variable based on the value of the other variable. We only see how they are related. People often show a correlation in an x-y-plot, and put a line on the data. You can see this in the example below. The x-axis is one variable, the y-axis the other one. The green line - the "regression line" - is the best approximation for all data points. This means that this line has the minimum distance to all data points. This distance is what we measure with the correlation coefficient: if all data points are exactly on the line, we have a correlation of +1 or -1 (depending on the direction of the line). However, the further the data points are from the line, the closer the correlation coefficient is to 0. | ||

[[File:Correlation coefficient examples.png|600px|thumb|center|'''Examples for the correlation coefficient.''' Source: Wikipedia, Kiatdd, CC BY-SA 3.0]] | [[File:Correlation coefficient examples.png|600px|thumb|center|'''Examples for the correlation coefficient.''' Source: Wikipedia, Kiatdd, CC BY-SA 3.0]] | ||

| − | + | It is however important to know two things: | |

| − | |||

| − | 1) | + | 1) Do not confuse the slope of this line - i.e. the number of y-values that the slope steps per x-value - with the correlation coefficient. They are not the same, and this often leads to confusion. The slope of the line can easily be 5 or 10, but the correlation coefficient will always be between -1 and +1. |

| − | |||

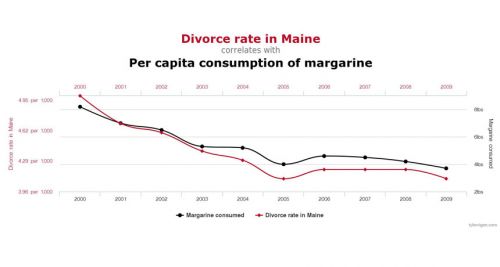

| − | 2) | + | 2) Regressions only really make sense if there is some kind of causal explanation for the relationship. We can create a regression line for all correlations of all pairs of two variables, but we might end up with something like the correlation below. It does not really make sense that the divorce rate in Maine and the margarine consumption are related - but their correlation coefficient is quite high! So you should always question correlations, and ask yourself which kinds of variables are tested for their relationship, and if you can derive meaningful results from doing so. |

| − | |||

| − | + | [[File:860-header-explainer-correlationchart.jpg|500px|thumb|left|'''Correlations can be deceitful'''. Source: [http://www.tylervigen.com/spurious-correlations Spurious Correlations]]] | |

| − | |||

| − | |||

== Strengths & Challenges == | == Strengths & Challenges == | ||

Revision as of 08:48, 6 July 2021

| Method categorization | ||

|---|---|---|

| Quantitative | Qualitative | |

| Inductive | Deductive | |

| Individual | System | Global |

| Past | Present | Future |

In short: Correlation analysis examines the statistical relationship between two continuous variables.

Background

Karl Pearson is considered to be the founding father of mathematical statistics; hence it is no surprise that one of the central methods in statistics - to test the relationship between two continuous variables - was invented by him at the brink of the 20th century (see Karl Pearson's "Notes on regression and inheritance in the case of two parents" from 1895). His contribution was based on work from Francis Galton and Auguste Bravais. With more data becoming available and the need for an “exact science” as part of the industrialization and the rise of modern science, the Pearson correlation paved the road to modern statistics at the beginning of the 20th century. While other approaches such as the t-test or the Analysis of Variance (ANOVA) by Pearson's arch-enemy Fisher demanded an experimental approach, the correlation simply required data with a continuous measurement level. Hence it appealed to the demand for an analysis that could be conducted based solely on measurements done in engineering, or on counting as in economics, without being preoccupied too deeply with the reasoning on why variables correlated. Pearson recognized the predictive power of his discovery, and the correlation analysis became one of the most abundantly used statistical approaches in diverse disciplines such as economics, ecology, psychology and social sciences. Later came the regression analysis, which implies a causal link between two continuous variables. This makes it different from a correlation, where two variables are related, but not necessarily causally linked. This article focuses on correlation analysis and only touches upon regressions. For more, please refer to the entry on Regression Analysis.)

What the method does

Correlation analysis examines the relationship between two continuous variables, and test whether the relation is statistically significant. For this, correlation analysis takes the sample size and the strength of the relation between the two variables into account. The so-called correlation coefficient indicates the strength of the relation, and ranges from -1 to 1. A coefficient close to 0 indicates a weak correlation. A coefficient close to 1 indicates a strong positive correlation, and a coefficient close to -1 indicates a strong negative correlation.

Correlations can be applied to all kinds of quantitative continuous data from all spatial and temporal scales, from diverse methodological origins including Surveys and Census data, ecological measurements, economical measurements, GIS and more. Correlations are also used in both inductive and deductive approaches. This versatility makes correlation analysis one of the most frequently used quantitative methods to date.

There are different forms of correlation analysis. The Pearson correlation is usually applied to normally distributed data, or more precisely, data that shows a Student's t-distribution. Alternative correlation measures like Kendall's tau and Spearman's rho are usually applied to variables that are not normally distributed. I recommend you just look them up, and keep as a rule of thumb that Spearman's rho is the most robust correlation measure when it comes to non-normally distributed data.

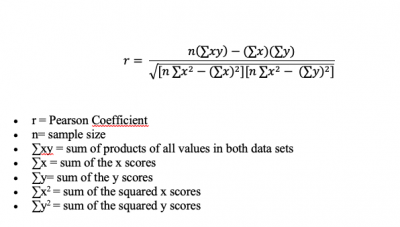

Calculating Pearson's correlation coefficient r

The formula to calculate a Pearson correlation coefficient is fairly simple. You just need to keep in mind that you have two variables or samples, called x and y, and their respective means (m).

There are some core questions related to the application and reading of correlations:

1) Is the relationship between two variables positive or negative? If one variable increases, and the other one increases, too, we have a positive ("+") correlation. This is also true if both variables decrease. For instance, being taller leads to a significant increase in body weight. On the other hand, if one variable increases, and the other decreases, the correlation is negative ("-"): for example, the relationship between 'pizza eaten' and 'pizza left' is negative. The more pizza slices are eaten, the fewer slices are still there.

2) Is the relation positive or negative?

Relations can be positive or negative (or neutral). The normative value of a positive or negative relation typically has strong implications, especially if both directions are theoretically possible. Therefore it is vital to be able to interpret the direction of a correlative relationship.

2) Is the estimated correlation coefficient small or large? It can range from -1 to +1, and is an important measure when we evaluate the strength of a statistical relationship. An example for a perfect positive correlation (with a correlation coefficient r of +1) is the relationship between temperature in Celsius and Fahrenheit. This should not be surprising, since Fahrenheit is defined as 32 + 1.8° C. Therefore, their relationship is perfectly linear, which results in such a strong correlation coefficient. We can thus say that 100% of the variation in temperature in Fahrenheit is explained by the temperature in Celsius. On the other hand, you might encounter data of two variables that is scattered all the way in an x-y-plot and you cannot find a significant relationship. The correlation coefficient r might be around 0.1, or 0.2. Here, you can assume that there is no strong relationship between these two variables, and that one variable does not explain the other one. 1) How strong is the relation? As we explained above, the data points may scatter widely, or there may be a rather linear relationship - and everything in between. The stronger the correlation estimate of a relation is, the more may these relations matter, some may argue. If the points are distributed like stars in the sky, then the relationship is probably not significant and interesting. Of course this is not entirely generalisable, but it is definitely true that a neutral relation only tells you, that the relation does not matter. At the same time, even weaker relations may give important initial insights into the data, and if two variables show any kind of relation, it is good to know the strength. By practising to quickly grasp the strength of a correlation, you become really fast in understanding relationships in data. Having this kind of skill is essential for anyone interested in approximating facts through quantitative data.

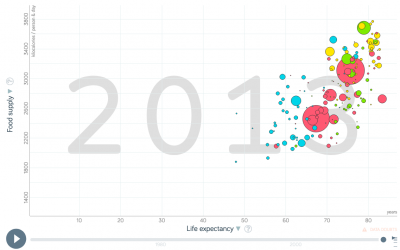

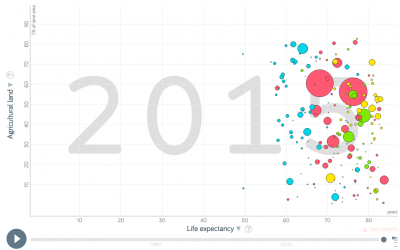

3) What does the relationship between two variables explain? To illustrate this, let us have a look at the example of the percentage of people working in Agriculture within individual countries. Across the world, people at a low income (<5000 Dollar/year) have a high variability in terms of agricultural employment: half of the population of the Chad work in agriculture, while in Zimbabwe it is only 10 %. However, at an income above 15000 Dollar/year, there is hardly any variance in the percentage of people that work in agriculture: it is always very low. This has reasons, and there are probably one or several variables that explain this variability. A correlation analysis helps us identify such variances in the data relationship, and we should look at correlation coefficients for different parts of the data to identify those parts of the data where we might want to have a closer look to better understand how variables are related.

3) Does the relation change within parts of the data? This is already an advanced skill. So if you have looked at the strength of a correlation, and its direction, you are good to go generally. But sometimes, these change in different parts of the data. Regarding this, the best advice is to look at the initial scatterplot, but also the residuals. If the scattering of all points is more or less equal across the whole relation, then you may realise that all errors are equally distributed across the relation. In reality, this is often not the case. Instead we often know less about one part of the data, and more about another part of the data. In addition to this, we often have a stronger relation across parts of the dataset, and a weaker relation across other parts of the dataset. These differences are important, as they hint at underlying influencing variables or factors that we did not understand yet. Becoming versatile in reading scatter plots becomes a key skill here, as it allows you to rack biases and flaws in your dataset and analysis. This is probably the most advanced skill when it comes to reading a correlation plot.

As you can see, correlation analysis is first and foremost a matter of identifying if and how two variables are related. However, we do not necessarily assume that we can predict the value of one variable based on the value of the other variable. We only see how they are related. People often show a correlation in an x-y-plot, and put a line on the data. You can see this in the example below. The x-axis is one variable, the y-axis the other one. The green line - the "regression line" - is the best approximation for all data points. This means that this line has the minimum distance to all data points. This distance is what we measure with the correlation coefficient: if all data points are exactly on the line, we have a correlation of +1 or -1 (depending on the direction of the line). However, the further the data points are from the line, the closer the correlation coefficient is to 0.

It is however important to know two things:

1) Do not confuse the slope of this line - i.e. the number of y-values that the slope steps per x-value - with the correlation coefficient. They are not the same, and this often leads to confusion. The slope of the line can easily be 5 or 10, but the correlation coefficient will always be between -1 and +1.

2) Regressions only really make sense if there is some kind of causal explanation for the relationship. We can create a regression line for all correlations of all pairs of two variables, but we might end up with something like the correlation below. It does not really make sense that the divorce rate in Maine and the margarine consumption are related - but their correlation coefficient is quite high! So you should always question correlations, and ask yourself which kinds of variables are tested for their relationship, and if you can derive meaningful results from doing so.

Strengths & Challenges

- Correlations test for mere relations, but do not depend on a deductive reasoning. Hence correlations can be powerful both regarding inductive predictions as well as for initial analysis of data without any underlying theoretical foundation. Yet, with the predictive power of correlations comes a great responsibility for the researcher who apply correlations, as it is tempting to infer causality purely from the results of correlations. Economics and other fields have a long history of causal interpretation based on basically inductive correlative results. It can be tempting to assume causality based purely on inductively created correlations, even if there is no logical connection explaining the correlation. For more thoughts on the connection between correlations and causality, have a look at this entry: Causality and correlation.

- Correlations are rather easy to apply, and most software allows to derive simple scatterplots that can then be analyzed using correlations. However, you need some minimal knowledge about data distribution, since for instance the Pearson correlation is based on data that is normally distributed.

- There is an endless debate which correlation coefficient value is high, and which one is low. In other words: how much does a correlation explain, and what is this worth? While this depends widely on the context, it is still remarkable that people keep discussing this. A high relation can be trivial or wrong, while a low relation can be an important scientific result. Most of all, also a lack of a statistical relation between two variables is already a statistical result.

Normativity

- While it is tempting to find causality in correlations, it is potentially difficult because correlations indicate statistical relations, but not causal explanations, which is a minute difference. Diverse disciplines - among them economics, psychology and ecology - are widely built on correlative analysis, yet do not always urge caution in the interpretation of correlations.

- Another normative problem of correlations is rooted in so called statistical fishing. With more and more data becoming available, there is an increasing chance that certain correlations are just significant by chance, for which there is a corrective procedure available called Bonferroni correction. However, this is seldom applied, and since p-value-driven statistics are increasingly seen critical, the resulting correlations should be seen as an initial form of a mostly inductive analysis, no more, but also not less. With some practice, p-value-driven statistics can be a robust tool to compare statistical relations in continuous data.

Outlook

Correlations are the among the foundational pillars of frequentist statistics. Nonetheless, with science engaging in more complex designs and analysis, correlations will increasingly become less important. As a robust working horse for initial analysis, however, they will remain a good starting point for many datasets. Time will tell whether other approaches - such as Bayesian statistics and machine learning - will ultimately become more abundant. Correlations may benefit from a clear comparison to results based on Bayesian statistics.

Key Publications

Hazewinkel, Michiel, ed. (2001). Correlation (in statistics). Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers.

Further Information

- If you want to practise recognizing whether a correlation is weak or strong I recommend spending some time on this website. There you can guess the correlation coefficients based on graphs: http://guessthecorrelation.com/

- The correlation coefficient: A very detailed and vivid article

- The relationship of temperature in Celsius and Fahrenheit: Several examples of interpreting the correlation coefficient

- Employment in Agriculture: A detailed database

- Kendall's Tau & Spearman's Rank: Two examples for other forms of correlation

- Strength of Correlation Plots: Some examples

- History of antibiotics: An example for findings when using the inductive approach

- Pearson's correlation coefficient: Many examples

- Pearson correlation: A quick explanation

- Pearson's r Correlation: An example calculation

The author of this entry is Henrik von Wehrden.