Difference between revisions of "Simple Statistical Tests"

(→f-test) |

|||

| (39 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [[File: | + | [[File:Quan dedu indi syst glob past pres.png|thumb|right|[[Design Criteria of Methods|Method Categorisation:]]<br> |

| − | + | '''Quantitative''' - Qualitative<br> | |

| − | <br | + | '''Deductive''' - Inductive<br> |

| − | + | '''Individual''' - '''System''' - '''Global'''<br> | |

| − | + | '''Past''' - '''Present''' - Future]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

'''In short:''' Simple statistical tests encapsule an array of simple statistical tests that are all built on probability, and no other validation criteria. | '''In short:''' Simple statistical tests encapsule an array of simple statistical tests that are all built on probability, and no other validation criteria. | ||

== Background == | == Background == | ||

| − | Simple statistical tests statistics provide the baseline for advanced statistical thinking. While they are not so much used today within empirical analysis, simple tests are the foundation of modern statistics. The student t-test which originated around 100 years ago provided the crucial link from the more inductive thinking of Sir Francis Bacon towards the testing of hypotheses and the actual statistical testing of hypotheses. The formulation of the so-called null hypothesis is the first step within simple tests. Informed from theory this test calculates the probability whether the sample confirms the hypothesis or not. Null hypotheses are hence the [[Glossary|assumptions]] we have about the world, and these assumptions can be confirmed or rejected. | + | Simple statistical tests statistics provide the baseline for advanced statistical thinking. While they are not so much used today within empirical analysis, simple tests are the foundation of modern statistics. The student t-test which originated around 100 years ago provided the crucial link from the more inductive thinking of Sir Francis Bacon towards the testing of hypotheses and the actual statistical testing of [[Glossary|hypotheses]]. The formulation of the so-called null hypothesis is the first step within simple tests. Informed from theory this test calculates the probability whether the sample confirms the hypothesis or not. Null hypotheses are hence the [[Glossary|assumptions]] we have about the world, and these assumptions can be confirmed or rejected. |

| + | |||

| + | The following information on simple statistical tests assumes some knowledge about data formats and data distribution. If you want to learn more about these, please refer to the entries on [[Data formats]] and [[Data distribution]]. | ||

| + | |||

== Most relevant simple tests == | == Most relevant simple tests == | ||

| − | |||

| − | |||

====One sample t-test==== | ====One sample t-test==== | ||

| − | '''The easiest example is the [https://www.youtube.com/watch?v=VPd8DOL13Iw one sample t-test]''': it allows us to test a dataset (more specifically, its mean value) versus a specified value. For this purpose, the t-test gives you a p-value at the end. If the p-value is below 0.05, the sample differs significantly from the reference value. | + | '''The easiest example is the [https://www.youtube.com/watch?v=VPd8DOL13Iw one sample t-test]''': it allows us to test a dataset (more specifically, its mean value) versus a specified value. For this purpose, the t-test gives you a p-value at the end. If the p-value is below 0.05, the sample differs significantly from the reference value. Important: The data of the sample(s) has to be normally distributed. |

'''Example''': Do the packages of your favourite cookie brand always contain as many cookies as stated on the outside of the box? Collect some of the packages, weigh the cookies contained therein and calculate the mean weight. Now, you can compare this value to the weight that is stated on the box using a one sample t-test. | '''Example''': Do the packages of your favourite cookie brand always contain as many cookies as stated on the outside of the box? Collect some of the packages, weigh the cookies contained therein and calculate the mean weight. Now, you can compare this value to the weight that is stated on the box using a one sample t-test. | ||

| + | |||

| + | For more details and R examples on t-tests, please refer to the [[T-Test]] entry. | ||

====Two sample t-test==== | ====Two sample t-test==== | ||

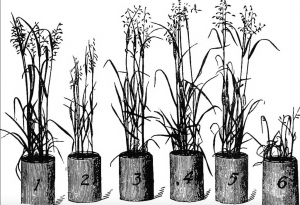

[[File:Bildschirmfoto 2020-04-26 um 17.57.43.png|thumb|With a two sample test we can compare the mean gross of both our groups - with or without fertilizer - and state whether they are significantly different.]] | [[File:Bildschirmfoto 2020-04-26 um 17.57.43.png|thumb|With a two sample test we can compare the mean gross of both our groups - with or without fertilizer - and state whether they are significantly different.]] | ||

| − | '''[https://www.youtube.com/watch?v=NkGvw18zlGQ Two sample tests] are the next step'''. These allow a [https://explorable.com/independent-two-sample-t-test comparison of two different datasets] within an experiment. They tell you if the means of the two datasets differ significantly. If the p-value is below 0,05, the two datasets differ significantly. It is clear that the usefulness of this test widely depends on the number of samples - the more samples we have for each dataset, the more we can understand about the difference between the datasets | + | '''[https://www.youtube.com/watch?v=NkGvw18zlGQ Two sample tests] are the next step'''. These allow a [https://explorable.com/independent-two-sample-t-test comparison of two different datasets] within an experiment. They tell you if the means of the two datasets differ significantly. If the p-value is below 0,05, the two datasets differ significantly. It is clear that the usefulness of this test widely depends on the number of samples - the more samples we have for each dataset, the more we can understand about the difference between the datasets. |

| + | |||

| + | Important: The data of the sample(s) has to be normally distributed. Also, the kind of t-test you should apply depends on the variance in the parent populations of the samples. For a '''Student’s t-test''', equal variances in the two groups are required. A '''Welch t-test''', by contrast, can deal with samples that display differing variances (1). To know whether the datasets have equal or varying variances, have a look at the F-Test. | ||

'''Example:''' The classic example would be to grow several plants and to add fertiliser to half of them. We can now compare the gross of the plants between the control samples without fertiliser and the samples that had fertiliser added. | '''Example:''' The classic example would be to grow several plants and to add fertiliser to half of them. We can now compare the gross of the plants between the control samples without fertiliser and the samples that had fertiliser added. | ||

| − | Plants with fertiliser (cm): 7.44 6.35 8.52 11.40 10.48 11.23 8.30 9.33 9.55 10.40 8.36 9.69 7.66 8.87 12.89 10.54 6.72 8.83 8.57 7.75 | + | ''Plants with fertiliser (cm):'' 7.44 6.35 8.52 11.40 10.48 11.23 8.30 9.33 9.55 10.40 8.36 9.69 7.66 8.87 12.89 10.54 6.72 8.83 8.57 7.75 |

| − | Plants without fertiliser (cm): 6.07 9.55 5.72 6.84 7.63 5.59 6.21 3.05 4.32 8.27 6.13 7.92 4.08 7.33 9.91 8.35 7.26 6.08 5.81 8.46 | + | ''Plants without fertiliser (cm):'' 6.07 9.55 5.72 6.84 7.63 5.59 6.21 3.05 4.32 8.27 6.13 7.92 4.08 7.33 9.91 8.35 7.26 6.08 5.81 8.46 |

The result of the two-sample t-test is a p-value of 7.468e-05, which is close to zero and definitely below 0,05. Hence, the samples differ significantly and the fertilizer is likely to have an effect. | The result of the two-sample t-test is a p-value of 7.468e-05, which is close to zero and definitely below 0,05. Hence, the samples differ significantly and the fertilizer is likely to have an effect. | ||

| + | For more details on t-tests, please refer to the [[T-Test]] entry. | ||

| − | = | + | {| class="wikitable mw-collapsible mw-collapsed" style="width: 100%; background-color: white" |

| − | + | |- | |

| − | + | ! Expand here for an R example for two-sample t-Tests. | |

| − | + | |- | |

| − | + | |<syntaxhighlight lang="R" line> | |

| − | + | #R example for a two-sample t-test. | |

| − | + | #The two-sample t-test can be used to test if two samples of a population differ significantly regarding their means. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | <syntaxhighlight lang="R" line> | ||

| − | |||

| − | #- | ||

| − | #The t-test can be used | ||

| − | |||

#H0 hypothesis: The two samples' means are not different. | #H0 hypothesis: The two samples' means are not different. | ||

#H1 hypothesis: The two samples' means are different. | #H1 hypothesis: The two samples' means are different. | ||

| − | |||

#We want to analyse the iris Dataset provided by R | #We want to analyse the iris Dataset provided by R | ||

iris <- iris | iris <- iris | ||

| Line 94: | Line 54: | ||

str(iris) | str(iris) | ||

summary(iris) | summary(iris) | ||

| − | #The dataset gives the measurements in centimeters of the variables sepal length and width | + | #The dataset gives the measurements in centimeters of the variables 'sepal length' and 'sepal width' |

| − | #and petal length and width, | + | #and 'petal length' and 'petal width', |

| − | #respectively, for 50 flowers from each of 3 species of | + | #respectively, for 50 flowers from each of 3 species of Iris (Iris setosa, versicolor, and virginica). |

| − | |||

| − | # | + | #Lets split the dataset into 3 datasets for each species: |

versicolor <- subset(iris, Species == "versicolor") | versicolor <- subset(iris, Species == "versicolor") | ||

virginica <- subset(iris, Species == "virginica") | virginica <- subset(iris, Species == "virginica") | ||

setosa <- subset(iris, Species == "setosa") | setosa <- subset(iris, Species == "setosa") | ||

| − | # | + | #Let us test if the difference in petal length between versicolor and virginica is significant: |

#H0 hypothesis: versicolor and virginica do not have different petal length | #H0 hypothesis: versicolor and virginica do not have different petal length | ||

#H1 hypothesis: versicolor and virginica have different petal length | #H1 hypothesis: versicolor and virginica have different petal length | ||

| − | # | + | #What does the t-test say? |

t.test(versicolor$Petal.Length,virginica$Petal.Length) | t.test(versicolor$Petal.Length,virginica$Petal.Length) | ||

| Line 114: | Line 73: | ||

#Therefore we neglect the H0 Hypothesis and adopt the H1 Hypothesis. | #Therefore we neglect the H0 Hypothesis and adopt the H1 Hypothesis. | ||

| + | #--------------additional remarks on Welch's t-test---------------------- | ||

| + | #The widely known Student's t-test requires equal variances of the samples. | ||

| + | #Let's check whether this is the case for our example from above by means of an f-test. | ||

| + | #If variances are unequal, we could use a Welch t-test instead, which is able to deal with unequal variances of samples. | ||

| + | var.test(versicolor$Petal.Length, virginica$Petal.Length) | ||

| + | |||

| + | #the resulting p-value is above 0.05 which means the variances of the samples are not different. | ||

| + | #Hence, this would justify the use of a Student's t-test. | ||

| + | #Interestingly, R uses the Welch t-test by default, because it can deal with differing variances. Accordingly, what we performed above is a Welch t-test. | ||

| + | #As we know that the variances of our samples do not differ significantly, we could use a Student's t-test. | ||

| + | #Therefore, we change the argument "var.equal" of the t-test command to "TRUE". R will use the Student's t-test now. | ||

| + | |||

| + | t.test(versicolor$Petal.Length,virginica$Petal.Length, var.equal = TRUE) | ||

| + | |||

| + | #In comparison to the above performed Welch t-test, the df changed a little bit, but the p-value is exactly the same. | ||

| + | #Our conclusion therefore stays the same. What a relief. | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |} | ||

| − | ==== Paired t-test ==== | + | ====Paired t-test==== |

| − | + | '''[https://www.statisticssolutions.com/manova-analysis-paired-sample-t-test/ Paired t-tests] are the third type of simple statistics.''' These allow for a comparison of a sample before and after an intervention. Within such an experimental setup, specific individuals are compared before and after an event. This way, the influence of the event on the dataset can be evaluated. If the sample changes significantly, comparing start and end state, you will receive again a p-value below 0,05. | |

| + | |||

| + | Important: for the paired t-test, a few assumptions need to be met. | ||

| + | * Differences between paired values follow a [[Data distribution|normal distribution]]. | ||

| + | * The data is continuous. | ||

| + | * The samples are paired or dependent. | ||

| + | * Each unit has an equal probability of being selected | ||

| − | + | '''Example:''' An easy example would be the behaviour of nesting birds. The range of birds outside of the breedig season dramatcally differs from the range when they are nesting. | |

| − | + | For more details on t-tests, please refer to the [[T-Test]] entry. | |

| − | |||

| − | + | {| class="wikitable mw-collapsible mw-collapsed" style="width: 100%; background-color: white" | |

| + | |- | ||

| + | ! Expand here for an R example on paired t-tests. | ||

| + | |- | ||

| + | |<syntaxhighlight lang="R" line> | ||

| − | # | + | # R Example for a Paired Samples T-Test |

| − | + | # The paired t-test can be used when we want to see whether a treatment had a significant effect on a sample, compared to when the treatment was not applied. The samples with and without treatment are dependent, or paired, with each other. | |

| − | # | ||

| − | |||

# Setting up our Hypotheses (Two-Tailed): | # Setting up our Hypotheses (Two-Tailed): | ||

| − | |||

# H0: The pairwise difference between means is 0 / Paired Population Means are equal | # H0: The pairwise difference between means is 0 / Paired Population Means are equal | ||

# H1: The pairwise difference between means is not 0 / Paired Population Means are not equal | # H1: The pairwise difference between means is not 0 / Paired Population Means are not equal | ||

# Example: | # Example: | ||

| − | + | # Consider the following supplement dataset to show the effect of 2 supplements on the time | |

| − | # Consider the supplement dataset to show the effect of 2 supplements on the time | ||

#it takes for an athlete (in minutes) to finish a practice race | #it takes for an athlete (in minutes) to finish a practice race | ||

| Line 169: | Line 150: | ||

# Alternatively, if we wanted to check which supplement gives a better result, | # Alternatively, if we wanted to check which supplement gives a better result, | ||

# we could use a one tailed test by changing the alternate argument to less or greater | # we could use a one tailed test by changing the alternate argument to less or greater | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| − | |||

| + | ====Chi-square Test of Stochastic Independence==== | ||

| + | [[File:Chi square example.png|frameless|500px|right|Source: John Oliver Engler]] | ||

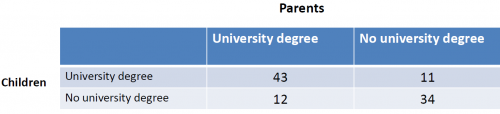

| + | The Chi-Square Test can be used to check if one variable influences another one, or if they are independent of each other. The Chi Square test works for data that is only categorical. | ||

| + | |||

| + | '''Example''': Do the children of parents with an academic degree visit a university more often, for example because they have higher chances to achieve good results in school? The table on the right shows the data that we can use for the Chi-Square test. | ||

| + | |||

| + | For this example, the chi-quare test yields a p-value of 2.439e-07, which is close to zero. We can reject the null hypothesis that there is no dependency, but instead assume that, based on our sample, the education of parents has an influence on the education of their children. | ||

| + | |||

| + | {| class="wikitable mw-collapsible mw-collapsed" style="width: 100%; background-color: white" | ||

| + | |- | ||

| + | ! Expand here for an R example for the Chi-Square Test. | ||

| + | |- | ||

| + | |<syntaxhighlight lang="R" line> | ||

| + | |||

| + | #R example for Chi-Square Test | ||

| + | #We create a table in which two forests are differentiated according to their distribution of male, female and juvenile trees. | ||

| + | |||

| + | chi_sq<-as.table(rbind(c(16,20,19), c(23,12,10))) | ||

| + | dimnames(chi_sq)<- list(forest= c("ForestA","ForestB"), gender= c("male", "female", "juvenile")) | ||

| − | + | View(chi_sq) | |

| − | |||

| − | # | + | #The question is, whether the abundance of trees in these forests is random (i.e. stochastically independent) or do the two variables (forest, gender) influence each other? |

| + | #For example, is it just coincidence that there are 23 male trees in forst B but only 16 in forest A? | ||

| − | + | #H0:there is no dependence between the variables | |

| − | |||

| − | |||

| − | #H0:there is no dependence between | ||

#H1:there is a dependency | #H1:there is a dependency | ||

| − | + | chisq.test(chi_sq) | |

| − | |||

| − | |||

| − | |||

| − | #Chi- | + | #Results of the Pearson's Chi-squared test |

| − | + | data: chi_sq | |

| + | X-squared = 5.1005, df = 2, p-value = 0.07806 | ||

| − | # | + | #p-value > 0.05 -> We cannot reject hypothesis H0. |

| + | #Thus, there is probably no dependency between the variables. | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |} | ||

| − | ==== Wilcoxon Test ==== | + | ====Wilcoxon Test==== |

| − | + | '''The next important test is the [https://data.library.virginia.edu/the-wilcoxon-rank-sum-test/ Wilcoxon rank sum test]'''. This test is also a paired test. What is most relevant here is that not the real numbers are introduced into the calculation, but instead these numbers are transformed into ranks. In other words, you get rid of the question about normal distribution and instead reduce your real numbers to an order of numbers. This can come in handy when you have very skewed distribution - so a exceptionally non-normal distribution - or large gaps in your data. The test will tell you if the means of two samples differ significantly (i.e. p-value below 0,05) by using ranks. | |

| − | + | ||

| + | '''Example:''' An example would be the comparison in growths of young people compared to their size as adults. Imagine you have a sample where half of your people are professional basketball players then the real size of people would not make sense. Therefore, as a robust measure in modern statistics, rank tests were introduced. | ||

| + | |||

| + | {| class="wikitable mw-collapsible mw-collapsed" style="width: 100%; background-color: white" | ||

| + | |- | ||

| + | ! Expand here for an R example for the Wilcoxon Test. | ||

| + | |- | ||

| + | |<syntaxhighlight lang="R" line> | ||

| + | #R example for an Wilcoxon rank-sum test | ||

#We want to analyse the iris Dataset provided by R | #We want to analyse the iris Dataset provided by R | ||

iris <- iris | iris <- iris | ||

| Line 224: | Line 230: | ||

shapiro.test(setosa$Sepal.Length) | shapiro.test(setosa$Sepal.Length) | ||

shapiro.test(virginica$Sepal.Length) | shapiro.test(virginica$Sepal.Length) | ||

| − | #both are | + | #both are normally distributed |

#wilcoxon sum of ranks test | #wilcoxon sum of ranks test | ||

| Line 237: | Line 243: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | |||

| + | ====f-test==== | ||

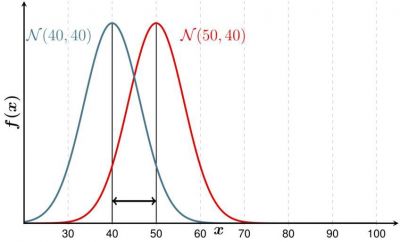

| + | '''The f-test allows you to compare the ''variance'' of two samples.''' Variance is calculated by taking the average of squared deviations from the mean and tells you the degree of spread in your data set. The more spread the data, the larger the variance is in relation to the mean. If the p-value of the f-test is lower than 0,05, the variances differ significantly. Important: for the f-Test, the data of the samples has to be normally distributed. | ||

| + | |||

| + | '''Example''': If you examine players in a basketball and a hockey team, you would expect their heights to be different on average. But maybe the variance is not. Consider Figure 1 where the mean is different, but the variance the same - this could be the case for your hockey and basketball team. In contrast, the height could be distributed as shown in Figure 2. The f-test then would probably yield a p-value below 0,05. | ||

| + | [[File:Normal distribution.jpg|400px|thumb|left|Figure 1 shows '''two datasets which are normally distributed, but shifted.''' Source: [https://snappygoat.com/s/?q=bestof%3ALda-gauss-variance-small.svg+en+Plot+of+two+normal+distributed+variables+with+small+variance+de+Plot+zweier+Normalverteilter+Variablen+mit+kleiner+Varianz#7c28e0e4295882f103325762899f736091eab855,0,3 snappy goat]]] | ||

| + | [[File:NormalDistribution2.png|400px|thumb|right|Figure 2 '''shows two datasets that are normally distributed, but have different variances'''. Source: [https://www.notion.so/sustainabilitymethods/Simple-Statistical-Tests-bcc0055304d44564bc41661453423134#7d57d8c251f94da8974eeb8a658aaa29 Wikimedia]]] | ||

| − | == | + | {| class="wikitable mw-collapsible mw-collapsed" style="width: 100%; background-color: white" |

| − | <syntaxhighlight lang="R" line> | + | |- |

| − | # | + | ! Expand here for an R example for the f-Test. |

| + | |- | ||

| + | |<syntaxhighlight lang="R" line> | ||

| + | #R example for an f-Test. | ||

| + | #We will compare the variances of height of two fictive populations. First, we create two vectors with the command 'rnorm'. Using rnorm, you can decide how many values your vector should contain, besides the mean and the standard deviation of the vector. To learn, what else you can do with rnorm, type: | ||

?rnorm | ?rnorm | ||

| Line 267: | Line 286: | ||

0.3858411 | 0.3858411 | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |} | ||

| − | |||

| − | |||

| − | == | + | == Normativity & Future of Simple Tests == |

| − | + | '''Simple tests are not abundantly applied these days in scientific research, and often seem outdated.''' Much of the scientific designs and available datasets are more complicated than what we can do with simple tests, and many branches of sciences established more complex designs and a more nuanced view of the world. Consequently, simple tests grew kind of out of fashion. | |

| − | |||

| − | + | However, simple tests are not only robust, but sometimes still the most parsimonious approach. In addition, many simple tests are a basis for more complicated approaches, and initiated a deeper and more applied starting point for frequentist statistics. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Simple tests are often the endpoint of many introductionary teachings on statistics, which is unfortunate. Overall, their lack in most of recent publications as well as wooden design frames of these approaches make these tests an undesirable starting point for many students, yet they are a vital stepping stone to more advanced models. | Simple tests are often the endpoint of many introductionary teachings on statistics, which is unfortunate. Overall, their lack in most of recent publications as well as wooden design frames of these approaches make these tests an undesirable starting point for many students, yet they are a vital stepping stone to more advanced models. | ||

| − | |||

| − | |||

Hopefully, one day school children will learn simple test, because they could, and the world would be all the better for it. If more people early on would learn about probability, and simple tests are a stepping stone on this long road, there would be an education deeper rooted in data and analysis, allowing for better choices and understanding of citizens. | Hopefully, one day school children will learn simple test, because they could, and the world would be all the better for it. If more people early on would learn about probability, and simple tests are a stepping stone on this long road, there would be an education deeper rooted in data and analysis, allowing for better choices and understanding of citizens. | ||

| Line 305: | Line 310: | ||

== Further Links == | == Further Links == | ||

| − | + | * Videos | |

[https://www.youtube.com/watch?v=HZ9xZHWY0mw The Hypothesis Song]: A little musical introduction to the topic | [https://www.youtube.com/watch?v=HZ9xZHWY0mw The Hypothesis Song]: A little musical introduction to the topic | ||

| Line 332: | Line 337: | ||

[https://www.youtube.com/watch?v=7_cs1YlZoug Chi-Square Test]: Example calculations from the world of e-sports | [https://www.youtube.com/watch?v=7_cs1YlZoug Chi-Square Test]: Example calculations from the world of e-sports | ||

| − | = | + | [https://www.youtube.com/watch?v=FlIiYdHHpwU F test]: An example calculation |

| + | |||

| + | * Articles | ||

[https://www.sciencedirect.com/science/article/pii/S0092867408009537 History of the Hypothesis]: A brief article through the history of science | [https://www.sciencedirect.com/science/article/pii/S0092867408009537 History of the Hypothesis]: A brief article through the history of science | ||

| Line 364: | Line 371: | ||

[https://data.library.virginia.edu/the-wilcoxon-rank-sum-test/ Wilcoxon Rank Sum Test]: A detailed example calculation in R | [https://data.library.virginia.edu/the-wilcoxon-rank-sum-test/ Wilcoxon Rank Sum Test]: A detailed example calculation in R | ||

| + | |||

| + | [http://www.sthda.com/english/wiki/f-test-compare-two-variances-in-r F test]: An example in R | ||

---- | ---- | ||

Revision as of 15:34, 18 March 2024

Quantitative - Qualitative

Deductive - Inductive

Individual - System - Global

Past - Present - Future

In short: Simple statistical tests encapsule an array of simple statistical tests that are all built on probability, and no other validation criteria.

Contents

Background

Simple statistical tests statistics provide the baseline for advanced statistical thinking. While they are not so much used today within empirical analysis, simple tests are the foundation of modern statistics. The student t-test which originated around 100 years ago provided the crucial link from the more inductive thinking of Sir Francis Bacon towards the testing of hypotheses and the actual statistical testing of hypotheses. The formulation of the so-called null hypothesis is the first step within simple tests. Informed from theory this test calculates the probability whether the sample confirms the hypothesis or not. Null hypotheses are hence the assumptions we have about the world, and these assumptions can be confirmed or rejected.

The following information on simple statistical tests assumes some knowledge about data formats and data distribution. If you want to learn more about these, please refer to the entries on Data formats and Data distribution.

Most relevant simple tests

One sample t-test

The easiest example is the one sample t-test: it allows us to test a dataset (more specifically, its mean value) versus a specified value. For this purpose, the t-test gives you a p-value at the end. If the p-value is below 0.05, the sample differs significantly from the reference value. Important: The data of the sample(s) has to be normally distributed.

Example: Do the packages of your favourite cookie brand always contain as many cookies as stated on the outside of the box? Collect some of the packages, weigh the cookies contained therein and calculate the mean weight. Now, you can compare this value to the weight that is stated on the box using a one sample t-test.

For more details and R examples on t-tests, please refer to the T-Test entry.

Two sample t-test

Two sample tests are the next step. These allow a comparison of two different datasets within an experiment. They tell you if the means of the two datasets differ significantly. If the p-value is below 0,05, the two datasets differ significantly. It is clear that the usefulness of this test widely depends on the number of samples - the more samples we have for each dataset, the more we can understand about the difference between the datasets.

Important: The data of the sample(s) has to be normally distributed. Also, the kind of t-test you should apply depends on the variance in the parent populations of the samples. For a Student’s t-test, equal variances in the two groups are required. A Welch t-test, by contrast, can deal with samples that display differing variances (1). To know whether the datasets have equal or varying variances, have a look at the F-Test.

Example: The classic example would be to grow several plants and to add fertiliser to half of them. We can now compare the gross of the plants between the control samples without fertiliser and the samples that had fertiliser added.

Plants with fertiliser (cm): 7.44 6.35 8.52 11.40 10.48 11.23 8.30 9.33 9.55 10.40 8.36 9.69 7.66 8.87 12.89 10.54 6.72 8.83 8.57 7.75

Plants without fertiliser (cm): 6.07 9.55 5.72 6.84 7.63 5.59 6.21 3.05 4.32 8.27 6.13 7.92 4.08 7.33 9.91 8.35 7.26 6.08 5.81 8.46

The result of the two-sample t-test is a p-value of 7.468e-05, which is close to zero and definitely below 0,05. Hence, the samples differ significantly and the fertilizer is likely to have an effect.

For more details on t-tests, please refer to the T-Test entry.

| Expand here for an R example for two-sample t-Tests. |

|---|

#R example for a two-sample t-test. #The two-sample t-test can be used to test if two samples of a population differ significantly regarding their means. #H0 hypothesis: The two samples' means are not different. #H1 hypothesis: The two samples' means are different. #We want to analyse the iris Dataset provided by R iris <- iris #Lets have a first look on the Data head(iris) str(iris) summary(iris) #The dataset gives the measurements in centimeters of the variables 'sepal length' and 'sepal width' #and 'petal length' and 'petal width', #respectively, for 50 flowers from each of 3 species of Iris (Iris setosa, versicolor, and virginica). #Lets split the dataset into 3 datasets for each species: versicolor <- subset(iris, Species == "versicolor") virginica <- subset(iris, Species == "virginica") setosa <- subset(iris, Species == "setosa") #Let us test if the difference in petal length between versicolor and virginica is significant: #H0 hypothesis: versicolor and virginica do not have different petal length #H1 hypothesis: versicolor and virginica have different petal length #What does the t-test say? t.test(versicolor$Petal.Length,virginica$Petal.Length) #the p-value is 2.2e-16, which is below 0.05 (it's almost 0). #Therefore we neglect the H0 Hypothesis and adopt the H1 Hypothesis. #--------------additional remarks on Welch's t-test---------------------- #The widely known Student's t-test requires equal variances of the samples. #Let's check whether this is the case for our example from above by means of an f-test. #If variances are unequal, we could use a Welch t-test instead, which is able to deal with unequal variances of samples. var.test(versicolor$Petal.Length, virginica$Petal.Length) #the resulting p-value is above 0.05 which means the variances of the samples are not different. #Hence, this would justify the use of a Student's t-test. #Interestingly, R uses the Welch t-test by default, because it can deal with differing variances. Accordingly, what we performed above is a Welch t-test. #As we know that the variances of our samples do not differ significantly, we could use a Student's t-test. #Therefore, we change the argument "var.equal" of the t-test command to "TRUE". R will use the Student's t-test now. t.test(versicolor$Petal.Length,virginica$Petal.Length, var.equal = TRUE) #In comparison to the above performed Welch t-test, the df changed a little bit, but the p-value is exactly the same. #Our conclusion therefore stays the same. What a relief. |

Paired t-test

Paired t-tests are the third type of simple statistics. These allow for a comparison of a sample before and after an intervention. Within such an experimental setup, specific individuals are compared before and after an event. This way, the influence of the event on the dataset can be evaluated. If the sample changes significantly, comparing start and end state, you will receive again a p-value below 0,05.

Important: for the paired t-test, a few assumptions need to be met.

- Differences between paired values follow a normal distribution.

- The data is continuous.

- The samples are paired or dependent.

- Each unit has an equal probability of being selected

Example: An easy example would be the behaviour of nesting birds. The range of birds outside of the breedig season dramatcally differs from the range when they are nesting.

For more details on t-tests, please refer to the T-Test entry.

| Expand here for an R example on paired t-tests. |

|---|

# R Example for a Paired Samples T-Test # The paired t-test can be used when we want to see whether a treatment had a significant effect on a sample, compared to when the treatment was not applied. The samples with and without treatment are dependent, or paired, with each other. # Setting up our Hypotheses (Two-Tailed): # H0: The pairwise difference between means is 0 / Paired Population Means are equal # H1: The pairwise difference between means is not 0 / Paired Population Means are not equal # Example: # Consider the following supplement dataset to show the effect of 2 supplements on the time #it takes for an athlete (in minutes) to finish a practice race s1 <- c(10.9, 12.5, 11.4, 13.6, 12.2, 13.2, 10.6, 14.3, 10.4, 10.3) s2 <- c(15.7, 16.6, 18.2, 15.2, 17.8, 20.0, 14.0, 18.7, 16.0, 17.3) id <- c(1,2,3,4,5,6,7,8,9,10) supplement <- data.frame(id,s1,s2) supplement$difference <- supplement$s1 - supplement$s2 View(supplement) # Exploring the data summary(supplement) str(supplement) # Now, we can go for the Paired Samples T-Test # We can define our Hypotheses as (Two-Tailed): # H0: There is no significant difference in the mean time taken to finish the race # for the 2 supplements # H1: There is a significant difference in the mean time taken to finish the race # for the 2 supplements t.test(supplement$s1, supplement$s2, paired = TRUE) # As the p-value is less than the level of significance (0.05), we have # sufficient evidence to reject H0 and conclude that there is a significant # difference in the mean time taken to finish the race with the 2 supplements # Alternatively, if we wanted to check which supplement gives a better result, # we could use a one tailed test by changing the alternate argument to less or greater |

Chi-square Test of Stochastic Independence

The Chi-Square Test can be used to check if one variable influences another one, or if they are independent of each other. The Chi Square test works for data that is only categorical.

Example: Do the children of parents with an academic degree visit a university more often, for example because they have higher chances to achieve good results in school? The table on the right shows the data that we can use for the Chi-Square test.

For this example, the chi-quare test yields a p-value of 2.439e-07, which is close to zero. We can reject the null hypothesis that there is no dependency, but instead assume that, based on our sample, the education of parents has an influence on the education of their children.

| Expand here for an R example for the Chi-Square Test. |

|---|

#R example for Chi-Square Test

#We create a table in which two forests are differentiated according to their distribution of male, female and juvenile trees.

chi_sq<-as.table(rbind(c(16,20,19), c(23,12,10)))

dimnames(chi_sq)<- list(forest= c("ForestA","ForestB"), gender= c("male", "female", "juvenile"))

View(chi_sq)

#The question is, whether the abundance of trees in these forests is random (i.e. stochastically independent) or do the two variables (forest, gender) influence each other?

#For example, is it just coincidence that there are 23 male trees in forst B but only 16 in forest A?

#H0:there is no dependence between the variables

#H1:there is a dependency

chisq.test(chi_sq)

#Results of the Pearson's Chi-squared test

data: chi_sq

X-squared = 5.1005, df = 2, p-value = 0.07806

#p-value > 0.05 -> We cannot reject hypothesis H0.

#Thus, there is probably no dependency between the variables.

|

Wilcoxon Test

The next important test is the Wilcoxon rank sum test. This test is also a paired test. What is most relevant here is that not the real numbers are introduced into the calculation, but instead these numbers are transformed into ranks. In other words, you get rid of the question about normal distribution and instead reduce your real numbers to an order of numbers. This can come in handy when you have very skewed distribution - so a exceptionally non-normal distribution - or large gaps in your data. The test will tell you if the means of two samples differ significantly (i.e. p-value below 0,05) by using ranks.

Example: An example would be the comparison in growths of young people compared to their size as adults. Imagine you have a sample where half of your people are professional basketball players then the real size of people would not make sense. Therefore, as a robust measure in modern statistics, rank tests were introduced.

| Expand here for an R example for the Wilcoxon Test. |

|---|

#R example for an Wilcoxon rank-sum test #We want to analyse the iris Dataset provided by R iris <- iris #Lets have a first look on the Data head(iris) str(iris) summary(iris) #The dataset gives the measurements in centimeters of the variables sepal length and width #and petal length and width, #respectively, for 50 flowers from each of 3 species of iris. #The species are Iris setosa, versicolor, and virginica. #lets split the dataset into 3 datasets for each species versicolor <- subset(iris, Species == "versicolor") virginica <- subset(iris, Species == "virginica") setosa <- subset(iris, Species == "setosa") #Now we can for example test, if the difference in sepal length between setosa and virginica is significant: #H0 hypothesis: The medians (distributions) of setosa and virginica are equal #H1 hypothesis: The medians (distributions) of setosa and virginica differ #test for normality shapiro.test(setosa$Sepal.Length) shapiro.test(virginica$Sepal.Length) #both are normally distributed #wilcoxon sum of ranks test wilcox.test(setosa$Sepal.Length,virginica$Sepal.Length) #the p-value is 2.2e-16, which is below 0.05 (it's almost 0). #Therefore we neglect the H0 Hypothesis and adopt the H1 Hypothesis. #Based on this result we may conclude the medians of these two distributions differ. #The alternative hypothesis is stated as the “true location shift is not equal to 0”. #That’s another way of saying “the distribution of one population is shifted to the left or #right of the other,” which implies different medians. |

f-test

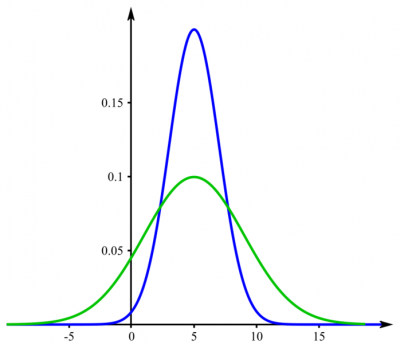

The f-test allows you to compare the variance of two samples. Variance is calculated by taking the average of squared deviations from the mean and tells you the degree of spread in your data set. The more spread the data, the larger the variance is in relation to the mean. If the p-value of the f-test is lower than 0,05, the variances differ significantly. Important: for the f-Test, the data of the samples has to be normally distributed.

Example: If you examine players in a basketball and a hockey team, you would expect their heights to be different on average. But maybe the variance is not. Consider Figure 1 where the mean is different, but the variance the same - this could be the case for your hockey and basketball team. In contrast, the height could be distributed as shown in Figure 2. The f-test then would probably yield a p-value below 0,05.

| Expand here for an R example for the f-Test. |

|---|

#R example for an f-Test.

#We will compare the variances of height of two fictive populations. First, we create two vectors with the command 'rnorm'. Using rnorm, you can decide how many values your vector should contain, besides the mean and the standard deviation of the vector. To learn, what else you can do with rnorm, type:

?rnorm

#Creating two vectors

PopA=rnorm(40, mean=175, sd=1)

PopB=rnorm(40, mean=182, sd=2)

#Comparing them visually by creating histograms

hist(PopA)

hist(PopB)

#Conducting a f-test to compare the variances

var.test(PopA, PopB)

#And this is the result, telliing you that the two variances differ significantly

F test to compare two variances

data: PopA and PopB

F = 0.38584, num df = 39, denom df = 39, p-value = 0.00371

alternative hypothesis: true ratio of variances is not equal to 1

95 percent confidence interval:

0.2040711 0.7295171

sample estimates:

ratio of variances

0.3858411

|

Normativity & Future of Simple Tests

Simple tests are not abundantly applied these days in scientific research, and often seem outdated. Much of the scientific designs and available datasets are more complicated than what we can do with simple tests, and many branches of sciences established more complex designs and a more nuanced view of the world. Consequently, simple tests grew kind of out of fashion.

However, simple tests are not only robust, but sometimes still the most parsimonious approach. In addition, many simple tests are a basis for more complicated approaches, and initiated a deeper and more applied starting point for frequentist statistics.

Simple tests are often the endpoint of many introductionary teachings on statistics, which is unfortunate. Overall, their lack in most of recent publications as well as wooden design frames of these approaches make these tests an undesirable starting point for many students, yet they are a vital stepping stone to more advanced models.

Hopefully, one day school children will learn simple test, because they could, and the world would be all the better for it. If more people early on would learn about probability, and simple tests are a stepping stone on this long road, there would be an education deeper rooted in data and analysis, allowing for better choices and understanding of citizens.

Key Publications

- Student" William Sealy Gosset. 1908. The probable error of a mean. Biometrika 6 (1). 1–25.

- Cochran, William G. 1952. The Chi-square Test of Goodness of Fit. The Annals of Mathematical Statistics 23 (3). 315–345.

- Box, G. E. P. 1953. Non-Normality and Tests on Variances. Biometrika 40 (3/4). 318–335.

References

(1) Article on the "Student's t-test" on Wikipedia

Further Links

- Videos

The Hypothesis Song: A little musical introduction to the topic

Hypothesis Testing: An introduction of the Null and Alternative Hypothesis

The Scientific Method: The musical way to remember it

Popper's Falsification: The explanation why not all swans are white

Type I & Type II Error: A quick explanation

Validity: An introduction to the concept

Reliability: A quick introduction

The Confidence Interval: An explanation with vivid examples

Choosing which statistical test to use: A very detailed videos with lots of examples

One sample t-test: An example calculation

Two sample t-test: An example calculation

Introduction into z-test & t-test: A detailed video

Chi-Square Test: Example calculations from the world of e-sports

F test: An example calculation

- Articles

History of the Hypothesis: A brief article through the history of science

The James Lind Initiative: One of the earliest examples for building hypotheses

The Scientific Method: A detailed and vivid article

Falsification: An introduction to Critical Rationalism (German)

Statistical Validity: An overview of all the different types of validity

Reliability & Validity: An article on their relationship

Statistical Reliability: A brief article

Reliability Analysis: An overview about different approaches

How reliable are the Social Sciences?: A short article by The New York Times

Uncertainty, Error & Confidence: A very long & detailed article

Student t-test: A detailed summary

One sample t-test: A brief explanation

Two sample t-test: A short introduction

Paired test: A detailed summary

Chi-Square Test: A vivid article

Wilcoxon Rank Sum Test: A detailed example calculation in R

F test: An example in R

The authors of this entry are Henrik von Wehrden and Carlo Krügermeier.