Difference between revisions of "Content Analysis"

| (7 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [[File: | + | [[File:Qual dedu indu indi past pres.png|thumb|right|[[Design Criteria of Methods|Method Categorisation:]]<br> |

| − | + | Quantitative - '''Qualitative'''<br> | |

| − | <br | + | '''Deductive''' - '''Inductive'''<br> |

| − | + | '''Individual''' - System - Global<br> | |

| − | + | '''Past''' - '''Present''' - Future]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

''' In short:''' Content Analysis relies on the summarizing of data (most commonly text) into content categories based on pre-determined rules and the analysis of the "coded" data. | ''' In short:''' Content Analysis relies on the summarizing of data (most commonly text) into content categories based on pre-determined rules and the analysis of the "coded" data. | ||

== Background == | == Background == | ||

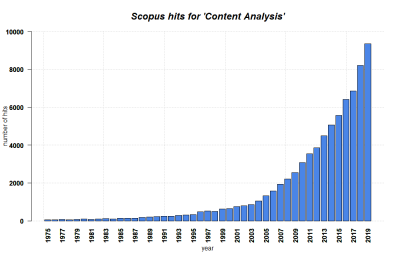

[[File:Content Analysis SCOPUS.png|400px|thumb|right|'''SCOPUS hits for Content Analysis until 2019.''' Search term: 'content analysis' in Title, Abstract, Keywords. Source: own.]] | [[File:Content Analysis SCOPUS.png|400px|thumb|right|'''SCOPUS hits for Content Analysis until 2019.''' Search term: 'content analysis' in Title, Abstract, Keywords. Source: own.]] | ||

| − | Early approaches to text analysis can be found in Hermeneutics, but Content Analysis itself emerged during the 1930s based on the sociological work of Lazarsfeld and Lasswell in the USA (4). The method prospered in the study of mass communications in the 1950s (2). [[History of Methods|Starting in the 1960s]], the method was subsequently adapted to the specific needs of diverse disciplines, with the Qualitative Content Analysis emerging from a critique on the lack of communicative contextualization in the earlier purely quantitative approaches (4). Today, the method is applied in a wide range of social sciences and humanities, including communicative science, anthropology, political sciences, psychology, education, and literature, among others (1, 2). | + | Early approaches to text analysis can be found in Hermeneutics, but quantitative Content Analysis itself emerged during the 1930s based on the sociological work of Lazarsfeld and Lasswell in the USA (4). The method prospered in the study of mass communications in the 1950s (2). [[History of Methods|Starting in the 1960s]], the method was subsequently adapted to the specific needs of diverse disciplines, with the Qualitative Content Analysis emerging from a critique on the lack of communicative contextualization in the earlier purely quantitative approaches (4). Today, the method is applied in a wide range of social sciences and humanities, including communicative science, anthropology, political sciences, psychology, education, and literature, among others (1, 2). |

== What the method does == | == What the method does == | ||

| − | Content Analysis is a "(...) systematic, replicable technique for compressing many words of text into fewer content categories based on explicit rules of coding" (Stemler 2000, p.1). However, the method entails more than mere word-counting. Instead, Content Analysis relies on the interpretation of the data on behalf of the researcher. Not only the content of a source is evaluated, but also formal aspects as well as contextual psychological, institutional, and cultural elements of the communication process (1, 4). " | + | Content Analysis is a "(...) systematic, replicable technique for compressing many words of text into fewer content categories based on explicit rules of coding" (Stemler 2000, p.1). However, the method entails more than mere word-counting. Instead, Content Analysis relies on the interpretation of the [[Glossary|data]] on behalf of the researcher. The mostly qualitative data material is assessed by creating a category system relevant to the material, and attributing parts of the content to individual categories (Schreier 2014). Not only the content of a source is evaluated, but also formal aspects as well as contextual psychological, institutional, and cultural elements of the communication process (1, 4). "[Content Analysis] seeks to analyze data within a specific context in view of the meanings someone - a group or a culture - attributes to them." (Krippendorff 1989, p.403). Because of this, Content Analysis is a potent method to identify trends and patterns in (text) sources, to determine authorship, or to monitor (public) opinions on a specific topic (3). |

Apart from text, a diverse set of data can be analyzed using Content Analysis. "Anything that occurs in sufficient numbers and has reasonably stable meanings for a specific group of people may be subjected to content analysis." (Krippendorff 1989, p.404). The data must convey a message to the receiver and be durable (2, 3). Often, Content Analysis focuses on data that are difficult or impossible to interpret with other methods (3). The data may exist 'naturally' and be publicly available, for example verbal discourse, written documents, or visual representations from mass media (newspapers, books, films, comics etc.); or be rather unavailable to the public, such as personal letters or witness accounts. The data may also be generated for the research purpose (e.g. interview transcripts) (1, 2, 4). | Apart from text, a diverse set of data can be analyzed using Content Analysis. "Anything that occurs in sufficient numbers and has reasonably stable meanings for a specific group of people may be subjected to content analysis." (Krippendorff 1989, p.404). The data must convey a message to the receiver and be durable (2, 3). Often, Content Analysis focuses on data that are difficult or impossible to interpret with other methods (3). The data may exist 'naturally' and be publicly available, for example verbal discourse, written documents, or visual representations from mass media (newspapers, books, films, comics etc.); or be rather unavailable to the public, such as personal letters or witness accounts. The data may also be generated for the research purpose (e.g. interview transcripts) (1, 2, 4). | ||

| − | + | While there is a wide range of qualitative Content Analysis approaches, this entry will focus on joint characteristics of these. For more information on the different variations, please refer to Schreier (2014) in the Key Publications. | |

==== General Methodological Process ==== | ==== General Methodological Process ==== | ||

| Line 33: | Line 22: | ||

* Next, the ''Unitizing'' is done, i.e. the definition of analysis units. It may be distinguished between sampling units (= sources of data, e.g. newspaper articles, interviews) and analysis units (= units of data that are coded and analyzed, e.g. single words or broader messages), with different approaches to identifying both (see Krippendorff 2004). | * Next, the ''Unitizing'' is done, i.e. the definition of analysis units. It may be distinguished between sampling units (= sources of data, e.g. newspaper articles, interviews) and analysis units (= units of data that are coded and analyzed, e.g. single words or broader messages), with different approaches to identifying both (see Krippendorff 2004). | ||

* Also, the ''sampling'' method is determined and the sample is drawn. | * Also, the ''sampling'' method is determined and the sample is drawn. | ||

| − | * Then, the ''Coding Scheme'' is developed and the data are coded. | + | * Then, the ''Coding Scheme'' - or 'Codebook' - is developed and the data are coded. 'Coding' generally describes the transfer of the available data into more abstract groups. A code is a label that represents a group of words that share a similar meaning (3). Codes may also be grouped into categories if they thematically belong together. There are two typical approaches to developing the coding scheme: a rather theory-driven and a rather data-driven approach. In a theory-driven approach, codes are developed based on theoretical constructs, research questions and elements such as an interview guide, which leads to a rather deductive coding procedure. In a data-driven approach, the coding system is developed as the researcher openly scans subsamples of the available material and develops codes based on these initial insights, which is a rather inductive process. Often, both approaches are combined, with an initial set of codes being derived from theory, and then iteratively adapted through a first analysis of the available material. |

* With the coding scheme at hand, the researcher reads (repeatedly) through the data material and assigns each analysis unit to one of the codes. The ''coding process'' may be conducted by humans, or - if sufficiently explicit coding instructions are possible - by a computer. In order to provide reliability, the codes should be intersubjective, i.e. every researcher should be able to code similarly, which is why the codes must be exhaustive in terms of the overarching construct, mutually exclusive, clearly defined, have unambiguous examples as well as exclusion criteria. | * With the coding scheme at hand, the researcher reads (repeatedly) through the data material and assigns each analysis unit to one of the codes. The ''coding process'' may be conducted by humans, or - if sufficiently explicit coding instructions are possible - by a computer. In order to provide reliability, the codes should be intersubjective, i.e. every researcher should be able to code similarly, which is why the codes must be exhaustive in terms of the overarching construct, mutually exclusive, clearly defined, have unambiguous examples as well as exclusion criteria. | ||

* Last, the coded data are ''analyzed and interpreted'' whilst taking into account the theoretical constructs that underlie the research. Inferences are drawn, which - according to Mayring (2000) - may focus on the communicator, the message itself, the socio-cultural context of the message, or on the message's effect. The learnings may be validated in the face of other information sources, which is often difficult when Content Analysis is used in cases where there is no other possibility to access this knowledge. Finally, new hypotheses may also be formulated (all from 1, 2). | * Last, the coded data are ''analyzed and interpreted'' whilst taking into account the theoretical constructs that underlie the research. Inferences are drawn, which - according to Mayring (2000) - may focus on the communicator, the message itself, the socio-cultural context of the message, or on the message's effect. The learnings may be validated in the face of other information sources, which is often difficult when Content Analysis is used in cases where there is no other possibility to access this knowledge. Finally, new hypotheses may also be formulated (all from 1, 2). | ||

| Line 41: | Line 30: | ||

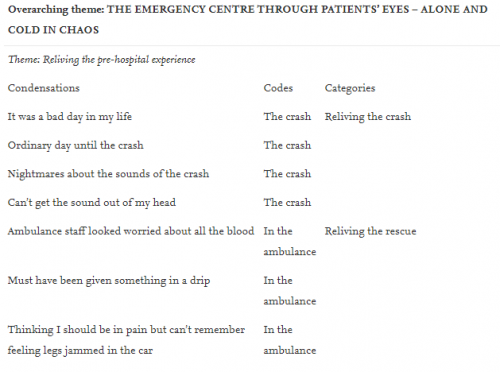

[[File:Example Content Analysis (2).png|500px|thumb|right|'''The coding results for statements from the interview transcript above, grouped into one of several themes.''' Source: Erlingsson & Brysiewicz 2017.]] | [[File:Example Content Analysis (2).png|500px|thumb|right|'''The coding results for statements from the interview transcript above, grouped into one of several themes.''' Source: Erlingsson & Brysiewicz 2017.]] | ||

| − | + | Qualitative Content Analysis is a rather inductive process. The process is guided by the research questions - hypotheses may be tested, but this is not the main goal. As with much of qualitative research, this method attempts to understand the (subjective) meaning behind the analyzed data and to draw conclusions from there. Its purpose is to get hold of the 'bigger picture', including the communicative context surrounding the genesis of the data. The qualitative Content Analysis is a very iterative process. The coding scheme is often not determined prior to the coding process. Instead, its development is guided by the research questions and done in close contact to the data, e.g. by reading through all data first and identifying relevant themes. The codes are then re-defined iteratively as the researcher applies them to the data. As an example, the comparative analysis of two texts can be mentioned, where codes are developed based on the first text, re-iterated by reading through the second text, then jointly applied to the first text again. Also, new research questions may be added during the analysis if the first insights into the data raise them. This approach is closely related to the methodological concept of [[Grounded Theory]] approaches. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | With regards to the coding process, the analysis units are assigned to the codes but not statistically analysed. There is an exemption to this - the evaluative content analysis, where statements are evaluated on a nominal or ordinal scale, which makes simple quantitative (statistical) analyses possible. However, for most forms of qualitative Content Analysis, the assigned items per code are interpreted qualitatively and conclusions are inferred from these interpretations. Examples and quotes may be used to illustrate the findings. Overall, in this approach, a deep understanding of the data and their meaning is relevant, as is a proper description of specific cases, and possibly a triangulation with other data sources on the same subject. | |

| Line 68: | Line 52: | ||

== Outlook == | == Outlook == | ||

| − | * The usage of automated coding with the use of computers may be seen as one important future direction of the method (1, 5). To date, the human interpretation of ambiguous language imposes a high validity of the results which cannot (yet) be provided by a computer | + | * The usage of automated coding with the use of computers may be seen as one important future direction of the method (1, 5). To date, the human interpretation of ambiguous language imposes a high validity of the results which cannot (yet) be provided by a computer. Alas, the development of an appropriate algorithm and text recognition software pose a challenge. The meaning of words changes in different contexts and several expressions may mean the same. Especially in terms of qualitative analyses, this currently makes human coders indispensable. Yet, the emergence of big data, Artificial Intelligence and [[Machine Learning]] might make it possible in the foreseeable future to use automated coding more regularly and with a high validity. |

* Another relevant direction is the shift from text to visual and audio data as a primary form of data (5). With ever-increasing amounts of pictures, video and audio files on the internet, future studies may refer to these kinds of sources more often. | * Another relevant direction is the shift from text to visual and audio data as a primary form of data (5). With ever-increasing amounts of pictures, video and audio files on the internet, future studies may refer to these kinds of sources more often. | ||

| Line 102: | Line 86: | ||

---- | ---- | ||

[[Category:Qualitative]] | [[Category:Qualitative]] | ||

| − | |||

[[Category:Inductive]] | [[Category:Inductive]] | ||

[[Category:Deductive]] | [[Category:Deductive]] | ||

Latest revision as of 12:31, 7 March 2024

Quantitative - Qualitative

Deductive - Inductive

Individual - System - Global

Past - Present - Future

In short: Content Analysis relies on the summarizing of data (most commonly text) into content categories based on pre-determined rules and the analysis of the "coded" data.

Contents

Background

Early approaches to text analysis can be found in Hermeneutics, but quantitative Content Analysis itself emerged during the 1930s based on the sociological work of Lazarsfeld and Lasswell in the USA (4). The method prospered in the study of mass communications in the 1950s (2). Starting in the 1960s, the method was subsequently adapted to the specific needs of diverse disciplines, with the Qualitative Content Analysis emerging from a critique on the lack of communicative contextualization in the earlier purely quantitative approaches (4). Today, the method is applied in a wide range of social sciences and humanities, including communicative science, anthropology, political sciences, psychology, education, and literature, among others (1, 2).

What the method does

Content Analysis is a "(...) systematic, replicable technique for compressing many words of text into fewer content categories based on explicit rules of coding" (Stemler 2000, p.1). However, the method entails more than mere word-counting. Instead, Content Analysis relies on the interpretation of the data on behalf of the researcher. The mostly qualitative data material is assessed by creating a category system relevant to the material, and attributing parts of the content to individual categories (Schreier 2014). Not only the content of a source is evaluated, but also formal aspects as well as contextual psychological, institutional, and cultural elements of the communication process (1, 4). "[Content Analysis] seeks to analyze data within a specific context in view of the meanings someone - a group or a culture - attributes to them." (Krippendorff 1989, p.403). Because of this, Content Analysis is a potent method to identify trends and patterns in (text) sources, to determine authorship, or to monitor (public) opinions on a specific topic (3).

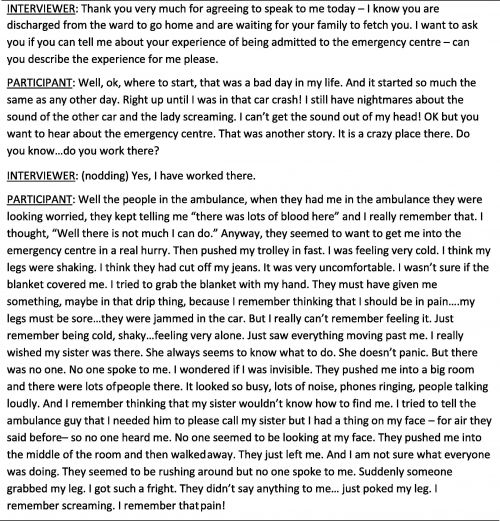

Apart from text, a diverse set of data can be analyzed using Content Analysis. "Anything that occurs in sufficient numbers and has reasonably stable meanings for a specific group of people may be subjected to content analysis." (Krippendorff 1989, p.404). The data must convey a message to the receiver and be durable (2, 3). Often, Content Analysis focuses on data that are difficult or impossible to interpret with other methods (3). The data may exist 'naturally' and be publicly available, for example verbal discourse, written documents, or visual representations from mass media (newspapers, books, films, comics etc.); or be rather unavailable to the public, such as personal letters or witness accounts. The data may also be generated for the research purpose (e.g. interview transcripts) (1, 2, 4).

While there is a wide range of qualitative Content Analysis approaches, this entry will focus on joint characteristics of these. For more information on the different variations, please refer to Schreier (2014) in the Key Publications.

General Methodological Process

- Any Content Analysis starts with the Design Phase in which the research questions are defined, the potential sources are gathered, and the analytical constructs are established that connect the prospective data to the general target of the analysis. These constructs can be based on existing theories or practices, the experience and knowledge of experts, or previous research (2).

- Next, the Unitizing is done, i.e. the definition of analysis units. It may be distinguished between sampling units (= sources of data, e.g. newspaper articles, interviews) and analysis units (= units of data that are coded and analyzed, e.g. single words or broader messages), with different approaches to identifying both (see Krippendorff 2004).

- Also, the sampling method is determined and the sample is drawn.

- Then, the Coding Scheme - or 'Codebook' - is developed and the data are coded. 'Coding' generally describes the transfer of the available data into more abstract groups. A code is a label that represents a group of words that share a similar meaning (3). Codes may also be grouped into categories if they thematically belong together. There are two typical approaches to developing the coding scheme: a rather theory-driven and a rather data-driven approach. In a theory-driven approach, codes are developed based on theoretical constructs, research questions and elements such as an interview guide, which leads to a rather deductive coding procedure. In a data-driven approach, the coding system is developed as the researcher openly scans subsamples of the available material and develops codes based on these initial insights, which is a rather inductive process. Often, both approaches are combined, with an initial set of codes being derived from theory, and then iteratively adapted through a first analysis of the available material.

- With the coding scheme at hand, the researcher reads (repeatedly) through the data material and assigns each analysis unit to one of the codes. The coding process may be conducted by humans, or - if sufficiently explicit coding instructions are possible - by a computer. In order to provide reliability, the codes should be intersubjective, i.e. every researcher should be able to code similarly, which is why the codes must be exhaustive in terms of the overarching construct, mutually exclusive, clearly defined, have unambiguous examples as well as exclusion criteria.

- Last, the coded data are analyzed and interpreted whilst taking into account the theoretical constructs that underlie the research. Inferences are drawn, which - according to Mayring (2000) - may focus on the communicator, the message itself, the socio-cultural context of the message, or on the message's effect. The learnings may be validated in the face of other information sources, which is often difficult when Content Analysis is used in cases where there is no other possibility to access this knowledge. Finally, new hypotheses may also be formulated (all from 1, 2).

Qualitative Content Analysis is a rather inductive process. The process is guided by the research questions - hypotheses may be tested, but this is not the main goal. As with much of qualitative research, this method attempts to understand the (subjective) meaning behind the analyzed data and to draw conclusions from there. Its purpose is to get hold of the 'bigger picture', including the communicative context surrounding the genesis of the data. The qualitative Content Analysis is a very iterative process. The coding scheme is often not determined prior to the coding process. Instead, its development is guided by the research questions and done in close contact to the data, e.g. by reading through all data first and identifying relevant themes. The codes are then re-defined iteratively as the researcher applies them to the data. As an example, the comparative analysis of two texts can be mentioned, where codes are developed based on the first text, re-iterated by reading through the second text, then jointly applied to the first text again. Also, new research questions may be added during the analysis if the first insights into the data raise them. This approach is closely related to the methodological concept of Grounded Theory approaches.

With regards to the coding process, the analysis units are assigned to the codes but not statistically analysed. There is an exemption to this - the evaluative content analysis, where statements are evaluated on a nominal or ordinal scale, which makes simple quantitative (statistical) analyses possible. However, for most forms of qualitative Content Analysis, the assigned items per code are interpreted qualitatively and conclusions are inferred from these interpretations. Examples and quotes may be used to illustrate the findings. Overall, in this approach, a deep understanding of the data and their meaning is relevant, as is a proper description of specific cases, and possibly a triangulation with other data sources on the same subject.

Strenghts & Challenges

- Content Analysis counteracts biases because it "(...) assures not only that all units of analysis receive equal treatment, whether they are entered at the beginning or at the end of an analysis but also that the process is objective in that it does not matter who performs the analysis or where and when." (see Normativity) (Krippendorff 1989, p.404). In this case, 'objective' refers to 'intersubjective'. Yet, biases cannot be entirely prevented, e.g. in the sampling process, the development of the coding scheme, or the interpretation and evaluation of the coded data.

- Content Analysis allows for researchers to apply their own social-scientific constructs "by which texts may become meaningful in ways that a culture may not be aware of." (Krippendorff 1989, p.404)

- However, especially in case of qualitative analysis of smaller data sample sizes, the theory and hypotheses derived from data cannot be generalized beyond this data (1). Triangulation, i.e. the comparison of the findings to other knowledge on the same topic, may provide more validity to the conclusions (see Normativity).

Normativity

Quality Criteria

- Reliability is difficult to maintain in the Content Analysis. A clear and unambiguous definition of codes as well as testings for inter-coder reliability represent attempts to ensure inter-subjectivity and thus stability and reproducibility (3, 4). However, the ambiguous nature of the data demands an interpretative analysis process - especially in the qualitative approach. This interpretation process of the texts or contents may interfere with the intended inter-coder reliability.

- Validity of the inferred results - i.e. the fitting of the results to the intended kind of knowledge - may be reached a) through the researchers' knowledge of contextual factors of the data and b) through validation with other sources, e.g. by the means of triangulation. However, the latter can be difficult to do due to the uniqueness of the data (1-3). Any content analysis is a reduction of the data, which should be acknowledged critically, and which is why the coding scheme should be precise and include the aforementioned explanation, examples and exclusion criteria.

Connectedness

- Since verbal discourse is an important data source for Content Analysis, the latter is often used as an analysis method of transcripts of standardized, open, or semi-structured interviews.

- Content Analysis is one form of textual analysis. The latter also includes other approaches such as discourse analysis, rhetorical analysis, or ethnographic analysis (2). However, Content Analysis differs from these methods in terms of methodological elements and the kinds of questions it addresses (2).

Outlook

- The usage of automated coding with the use of computers may be seen as one important future direction of the method (1, 5). To date, the human interpretation of ambiguous language imposes a high validity of the results which cannot (yet) be provided by a computer. Alas, the development of an appropriate algorithm and text recognition software pose a challenge. The meaning of words changes in different contexts and several expressions may mean the same. Especially in terms of qualitative analyses, this currently makes human coders indispensable. Yet, the emergence of big data, Artificial Intelligence and Machine Learning might make it possible in the foreseeable future to use automated coding more regularly and with a high validity.

- Another relevant direction is the shift from text to visual and audio data as a primary form of data (5). With ever-increasing amounts of pictures, video and audio files on the internet, future studies may refer to these kinds of sources more often.

Key publications

Kuckartz, U. (2016). Qualitative Inhaltsanalyse: Methoden, Praxis, Computerunterstützung (3., überarbeitete Auflage). Grundlagentexte Methoden. Weinheim, Basel: Beltz Juventa.

- A very exhaustive (German language) work in which the author explains different types of qualitative content analysis by also giving tangible examples.

Krippendorff, K. 2004. Content Analysis - An Introduction to Its Methodology. Second Edition. SAGE Publications.

- A much-quoted, extensive description of the method's history, conceptual foundations, uses and application.

Berelson, B. 1952. Content analysis in communication research. Glencoe, Ill.: Free Press.

- An early review of concurrent forms of (quantitative) content analysis.

Schreier, M. 2014. Varianten qualitativer Inhaltsanalyse: Ein Wegweiser im Dickicht der Begrifflichkeiten. Forum Qualitative Sozialforschung 15(1). Artikel 18.

- A (German language) differentiation between the variations of the qualitative content analysis.

Erlingsson, C. Brysiewicz, P. 2017. A hands-on guide to doing content analysis. African Journal of Emergency Medicine 7(3). 93-99.

- A very helpful guide to content analysis, using the examples shown above.

References

(1) Krippendorff, K. 1989. Content Analysis. In: Barnouw et al. (Eds.). International encyclopedia of communication. Vol. 1. 403-407. New York, NY: Oxford University Press.

(2) White, M.D. Marsh, E.E. 2006. Content Analysis: A Flexible Methodology. Library Trends 55(1). 22-45.

(3) Stemler, S. 2000. An overview of content analysis. Practical Assessment, Research, and Evaluation 7. Article 17.

(4) Mayring, P. 2000. Qualitative Content Analysis. Forum Qualitative Social Research 1(2). Article 20.

(5) Stemler, S. 2015. Content Analysis. Emerging Trends in the Social and Behavioral Sciences: An Interdisciplinary, Searchable, and Linkable Resource. 1-14.

(6) Erlingsson, C. Brysiewicz, P. 2017. A hands-on guide to doing content analysis. African Journal of Emergency Medicine 7(3). 93-99.

The author of this entry is Christopher Franz.