Ethics and Statistics

Contents

Following Sidgwicks "Methods of ethics", I define ethics as the world how it ought to be. Derek Parfit argued that if philosophy be a mountain, western philosophy climbs it from three sides. One is Utilitarianism, which is widely preoccupied with the question how we can evaluate the outcome of an action. The most ethical choice would be the action that creates the most good for the largest amount of people. The second side of Parfit's mountain is reason, which can be understood as the human capability to reflect what one is ought to do. Kant said much to this end, and Parfit associated it to the individual, or better, the reasonable individual. The third side of the mountain is the social contract, which states that moral obligations are agreed upon in societies, a thought that was strongly developed by Locke. Parfit associated this even wider, referring in his tripple theory to the whole society. Western thought has attempted to climb the same mountain from three sides, and we owe it to Derek Parfit that there is no an attempt to understand the mountain as such.

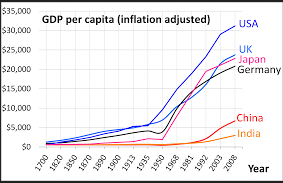

How does this matters for statistics? Personally, I think this matters deeply. Let me try to convince you. Looking at the epistemology of statistics, we learned that much of modern civilisation is build on statistical approaches, such as the design and analysis of experiments or the correlation of two continuous variables. Statistics propelled much of the exponential growth in our society, and are responsible for many of the problems we currently face through our unsustainable behaviour. Many would argue now that if statistics were a weapon itself would not kill but the human hand that uses it. This is insofar true as statistics would not exists without us, just as weapons were forged by us. However, I would argue that statistics are still deeply normative, as they are associated to our culture, society, social strata, economies and so much more. Much in our society depends on statistics, and many decisions are taken because of statistics. As we have learned, some of these statistics might be problematic or even wrong, and this can render the decisions consequently wrong as well. More strangely, our statistics can be correct, but as we have learned, statistics can even then contribute to our downfall. We can calculate something correctly, but the result can be morally wrong. Ideally, our statistics would always be correct, and the moral implications that follow out of our actions that are informed by statistics are also right. This would be especially relevant if our actions would have been both morally wrong and informed by statistics.

However, statistics are more often than not seen as something that is not normative. This is rooted in the deep traditions and norms of the disciplines where statistics are an established methodological approach. Obvious moral dilemmas and ambiguities were implemented if these were necessary for the design of the study. Preventing blunders was hence established early on, and is now part of the canon of many disciplines, with medicine probably leading the way. Such problems often deal with questions of sample size, randomisation and the question when a successful treatment should be given to all participants. What is however widely lacking is how the utilisation of statistics can have wider normative consequences, both during the application of statistics, but also due to the consequences that arise out of results that were propelled by statistics. In her book "Making comparisons count", Ruth Chang explores one example of such relations, but the gap between ethics and statistics is so large, we might define it altogether as uncharted territory.

Looking at ethics from statistics

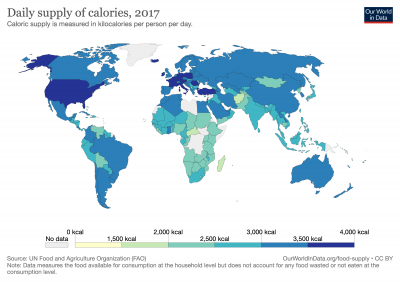

When looking at this problem from the perspective of statistics, there are several items which can help us to understand how statistics interact with ethics. First and foremost, the concept of validity can be considered to be highly normative. Two extreme lines of thinking come into mind: One technical, orientating on mathematical formulas and the other line of thinking, being informed actually by the content. Both are normative, but of course there is a large difference between a model being correct in terms of a statistical validation, and a model approximating a reality that is valid. Empirical research makes compromises by looking at pieces of the puzzle of reality. Hence empirical research will always have to compromise as we choose versions of reality. If there is such a thing as general truth, you may find it in philosophy. However, knowledge in philosophy will never be empirical, at least not if it equally fulfils the criteria of being generally applicable. Statistics can thus generate empirical knowledge, and philosophy originally served to generate criteria for validity. The three concepts philosophy is preoccupied with, namely utilitatrianism, reason and social contract dissolved into scientific disciplines as economics, psychology, social science or political science. These disciplines hence tried to bridge the void between philosophy and empirical research. Statistics did however indirectly contributed to altering our values, as was mentioned before. Take utilitarianism. It depends deeply on our values how we calculate utility, and hence how we manage our systems through such calculations. While world hunger decreased and more people get education or medical help as compared to the distant past, other measures propose that inequality increases, for instance income inequality. While ethics can compare two values in terms of their meaning for people, statistics can provide the methodological design, mathematical calculations and experience in interpretation. This links to plausability.

Statistics builds on plausability, as models can be both probable and reasonable. While statistics focus predominantly on probability, this is already a normative concept, as the significance level is arbitrary albeit rather robust. Furthermore, p-values are partly prone to statistical fishing, hence probability in statistics is widely established but not prone to flaws in any given case. Statistical calculations can also differ in terms of the model choice. Assuming a normal distribution is a precondition not always met, and this illustrates that this precondition alone is not only often violated, but again deeply normative. Non-normal distribution may matter deeply, if it is about income inequalities, and statistics may not be able to evade the responsibility that is implied in such an analysis. Another example is the question of non-linearity. Prefering non-linear relationships to linear relationships is something that especially recently became more important due to the wish to have a higher predictive power. By bending models - mechanically speaking - into a situation that allows to increase the model fit, or in other words how much the model can predict, comes with the price that we sacrifice any possibility of causal understanding, since the zig-zag relations of non-linear models are hard to match with our theories. While of course there are some examples of rapid shifts in systems, this has next to nothing to do with non-linearity that is assumed in many modern statistical analysis schemes. Many disciplines gravitated towards non-linear statistics in the last decades, sacrificing explainable relationships for an ever increasing model fit. Hence the clear demarkation between deductive and inductive research became blurry, adding an ontological burden on research. In many papers, the question of plausability and validity has been deeply affected by this issue, and I would argue that this casts a shadow on the reputation of science. Philosophy alone cannot solve this problem, since it looks at the general side of reality. Only by teaming up with empirical research, we may arrive at measures of validity that are not on a high horse, but also can be connected to the real world.

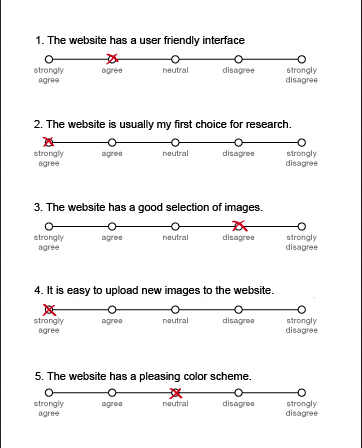

One last point I want to highlight are constructs. Constructs are deeply normative, and often associated to existing norms within a discipline. The Likert scale in psychology is such an example. The positive aspect of such a scaling is, that it becomes part of the established body of knowledge, and much experience is available on the benefits and drawbacks of such a scaling. Often, scales are even part of the tacit signature of cultural and social norms, and these indirectly influence the science that is conducted in this setting. Philosophy and especially cultural studies have engaged in such questions increasingly in the last decades, yet often focussing on isolated constructs or underlying fundamental aspects such as racism, colonialism and privilege. It will be challenging to link these concepts of clear importance to the flaws of empirical research, yet I recognize a greater awareness among empirical researchers to avoid errors implemented through such constructs. Personally, I believe that constructs will always be associated to personal identity and thus pose a problem for a general acceptance. Time will tell if this gap can be bridged.

Looking at statistics from ethics

A very long misunderstanding - objective knowledge and statistics

Science often claims to create objective knowledge. While Karl Popper and others introduced the recognition that this claim is false, it is not only perceived by many people that science is de facto objective, but more importantly, many scientists often claim that their knowledge production is objective. Much of science likes to spread an air of superiority, which is one of many explanations for the science society gap. When scientists should talk about possible facts, they often just talk about facts. When they talk about a true reality, they should talk about a version of reality. Of course it is not only their fault, since society increasingly demands easy explanations. A philosopher would be probably deeply disturbed if this philosopher would understand how statistics are applied, but even more disturbed if this philosopher would see how statistics are being interpreted and marketed. Precision and clarity in communicating results, as well as information on bias and caveats are more often than not missing.

Unreasonable statisticians vs. unreasonable statistics

There are many facets that could be highlighted under such a provocative heading. I choose an obvious caveat that is increasingly moving into the focus of scientific discourse: Publish or perish. This slogan highlights the current culture dominating most parts of science. If you do not produce a large amount of peer-reviewed publications, your career will end. This created quite a demand on statistics as well, and the urge to arrive at significant results created frequent violations of Occam's razor. Another problem is the fact that with this ever increasing demand for "innovation", models evolve, and it is hard to catch up. Thus, instead of having robust and parsimonious models, there are more and more unsuitable and overcomplicated models. The level of statistics at an average certainly increased. Yet I can only state that I also recognise a certain increase in errors or at least slippery slopes. Statistics are part of the publish or perish, and with this pressure still rising, unreasonable actions in the application of statistics may still increase.

The imperfection of measurements

One could say that utilitarianism created the single largest job boost for the profession of statisticians. Predicting economic development, calculating engineering problems, finding the right lever to tilt elections, statistics are dominating in almost all aspects of modern society. Calculating the maximisation of utility is one of the large drivers of change in our globalised economy. But it does not stop there. Followers on social media and reply time to messages are two of many factors how too many measure success in life, often draining their direct human interactions in the process. Philosophy is deeply engaged in discussing these conditions and dynamics, yet statistics needs to embed these topics more into their curriculum. If we become mercenaries for people with questionable goals, then we follow a long string of people that maximised utility for better or worse. History teaches us of the horrors that were conducted in the process of utility maximisation , and we need to end this string of willing aids to illegitimate goals.

Of inabilities to compromise

Another problem that emerges out of statistics as seen from a perspective of ethics is the inability to accept different versions of reality. Statistics often arrives at a specific construction of reality, which is the confirmed or rejected. Continuously, such a version of reality becomes then more and more refined, until it becomes ultimately the accepted reality. Such a reality cannot only clash with many worldview from ethics, but may even be unable to access these altogether. Statistics thus builds a refined version of reality, while ethics can claim and alter between different realities. This freedom in ethics i.e. through thought experiments is not possible in statistics. Here, the empirical with the workload and time it takes to conduct statistics creates a penalty that ultimately makes statistics less able to compromise. Future considerations need to clearly indicate what the limitations of statistics are, and how this problem of linking to other lines of thinking can be solved, even if such other worldviews violate statistical results or assumptions.

A critical perspective of statistics in society

A famous example from Derek Parfit are 50 people dying of thirst in the desert. Imagine you could give them one glas of water, leaving a few drops for each person. This would not prevent them from dying, but it would still make a difference. You tried to help, and some would even argue, that if these people die, they still die with the knowledge that somebody tried to help them. Such a thing can matter deeply, and just as classical ethics fail to acknowledge this importance, so does statistical measures. Another example is the tragic question of triage. Based on previous data and experience, it is sometimes necessary to prioritise patients with the highest chance of survival in a catastrophe setting. This has severe ethical ramifications, and is known to put a severe emotional burden on medical personal. While this follows a utilitarian approach, there are wider consequences that fall within the realms of medical ethics.

A third example is the repugnant conclusion. Are many quite happy people comparable to few very happy people? This question is widely considered to be a paradox, which is also known as the mere addition problem. What this teaches us is that it is difficult for us to predict whether a life is happy and worth living, even if we do not consider it to be this way. People can have fulfilled lives without us understanding this. Here, statistics fail once more, partly because of our normative perspective to interpret the different scenarios.

Another example, kindly borrowed from Daniel Dennet, are nuclear disasters. In Chernobyl many workers had to work under conditions that were creating a large harm to them, and a great emotional and medical burden. To this date, assumptions about the death toll vary widely. However, without their efforts it would be unclear what would have happened otherwise, as the molten nuclear lava would probably have reached the drainage water, leading to an explosion that might have rendered many parts of eastern Europe uninhabitable. All this developments were hard to estimate, and even more difficult to balance against the efforts of the workers. One has to state that these limitations were early stated in Utilitarianism in Moore's Principia Ethics, but this has been widely overseen by many, who still guide their actions through imperfect predictions.

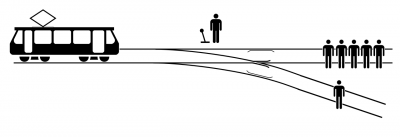

One last example of the relation between ethics and statistics is the problem of inaction. What if you could save 5 people, but you would know that somebody else then dies through the action that saves the other five. Many people prefer not to act, as an inaction is considered to be ethically more ok to many people, then the actual action to actively be responsible for the death of another person. While this is partly related to action, it is also related to knowledge and certainty. Much knowledge exists that should lead people to act, but they prefer to not act at all. Obviously, this is a greta challenge, and while psychology investigates this knowledge-action gap, I propose that it will still be here to stay for an unfortunately long time. If people would be able to emotionally connect to informations derived from numbers, just as the example above, and would also be able to consider the ethical consequences of such numbers, then, much inaction could be avoided. This consequentialism is widely missing to date, albeit much of our constructed systems are based on quite similar rules. Hence it is not only an inaction between knowledge and action, but also a gap between constructed systems and individuals. There is much to explore here, and as Martha Nussbaum rightly concluded in the Monarchy of Fear, even under dire political circumstances, "hope really is both a choice and a practical habit."

The way forward

The Feynman lectures provide the most famous textbook volumes on physics, yet Richard Feynman and his coauthors compiled these books in the 1960. To date, many consider these volumes to be the most concise and understandable introduction to physics. Beside Feynmans brilliance in physics, this also means that in the 1960s the majority of the knowledge necessary for an introduction in physics was already available. Something similar can be claimed for statistics, although it should be noted that physics is a scientific discipline, while statistics is a scientific method. Some disciplines use different methods, and many disciplines use statistics as a method. However, the final word on statistics has not been said, as the differences between probability statistics and Bayesian statistics are not yet deeply explored. Of course something similar can be said about physics, but even with a "great unified theory of physics" we would prbably have undergraduate students learn the same introductionary material.

Something suprisingly similiar can be said about philosophy. While much of the basic principles are known, these are hardly connected. Philosophy hence works ever deeper on specifics, but most of its contributors move away from a unified line of thinking. This is what makes Derek Parfits work stand out, since he tried to connect the different dots, and it will be the work of the next decades to come to build on his work, and improve it if necessary.

While philosophy and statistics both face a struggle to align different lines of thinking, it is even more concerning how little these two are aligned with each other. Statistics make use of philosophy at some rare occasions, for instance when it comes to the ethical dilemmas of negatively affecting people that are part of a study. While these links are vital for the development of specific aspects of statistics, the link between moral philosophy and statistics has hardly been explored so far. In order to enable statistics to contribute to the question how we ought to act, a systematic interaction is needed. I propose that exploring possible links is a first step, and we start to investigate how such connections work. The next step would be the proposal of a systematic conceptualisation of these different connections. This conceptualisation would then need to be explored and amended, and this will be very hard work, since both statistics and moral philosophy are scattered due to a lack of a unified theory. What makes a systematic exploration of such a unifying link even more difficult to explore is the question who would actually do that. Within most parts of the current educational system, students learn either empirical research and statistics, or the deep conceptual understanding of philosophy. Only when we enable more researcher to approach from both sides - empirical and conceptual - will we become increasingly able to bridge the gap between these two worlds.

External links

Articles

The Methods of Ethics: The whole book by the famous Henry Sidgwick

Derek Parfit: A short biography & a summary of his most important thoughts

Hunger and Food Provision: some data

Data dredging: An introduction into statistical fishing

The Likert Scale: A short definition

Ethics & Statistics: A guideline

Science Society Gap: An interesting paper on the main issues between science and society

Utilitarianism & Economics: A few words from the economic point of view

Videos

Utilitarianism: An introduction with many examples

The Nature of Truth: some thoughts from philosophy

Model Fit: An explanation using many graphs and example calculations

The author of this entry is Henrik von Wehrden.