Difference between revisions of "Introduction to Pandas"

| (33 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

THIS ARTICLE IS STILL IN EDITING MODE | THIS ARTICLE IS STILL IN EDITING MODE | ||

| − | Pandas is an important Python library for data analysis that was introduced in 2008 by McKinney. The name Pandas comes from "'''Pan'''el '''Da'''ta", which is an econometrics term for multidimensional structured | + | Pandas is an important Python library for data analysis that was introduced in 2008 by McKinney. The name Pandas comes from "'''Pan'''el '''Da'''ta", which is an econometrics term for multidimensional structured datasets. The library was originally designed to handle this type of data, especially for financial data analysis, which often requires complex manipulation and cleaning. |

| − | Nowadays, | + | Nowadays, Pandas is used in a variety of fields, because of its many useful methods for analyzing, cleaning, [https://sustainabilitymethods.org/index.php/Data_Inspection_in_Python inspecting] and manipulating data. |

==General Idea of Pandas== | ==General Idea of Pandas== | ||

| − | Pandas is developed and optimized for the work with tabular data stored in spreadsheets or databases. For this task two additional data structures are introduced, the Series (one-dimensional array of indexed data) and the DataFrame (two-dimensional array of indexed data). | + | Pandas is developed and optimized for the work with tabular data, stored in spreadsheets or databases. For this task two additional data structures are introduced, the Series (one-dimensional array of indexed data) and the DataFrame (two-dimensional array of indexed data). |

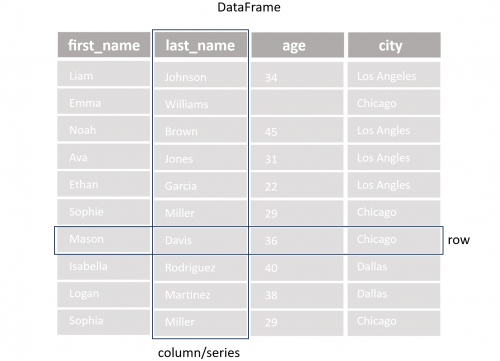

| − | The DataFrame can be seen as a collection of Series, which share the same index. The simple structure of People dataset`s DataFrame is visualized in figure 1. This data will be used throughout the article to explain the basic | + | The DataFrame can be seen as a collection of Series, which share the same index. The simple structure of People dataset`s DataFrame is visualized in figure 1. This data will be used throughout the article to explain the basic methods of Pandas. |

[[File:DataFrame.png|500px|center|thumb|Figure 1. DataFrame structure]] | [[File:DataFrame.png|500px|center|thumb|Figure 1. DataFrame structure]] | ||

| Line 23: | Line 23: | ||

==Reading and Writing Data== | ==Reading and Writing Data== | ||

| − | Before any data can be analyzed, it must be read into the current Python environment. Pandas can read a variety of file formats, such as CSV, Excel, JSON, HTML, SQL, etc. The following code gives an example for reading a CSV file. The file is read into a pandas DataFrame, which makes the data accessible for | + | Before any data can be analyzed, it must be read into the current Python environment. Pandas can read a variety of file formats, such as CSV, Excel, JSON, HTML, SQL, etc. The following code gives an example for reading a CSV file. The file is read into a pandas DataFrame, which makes the data accessible for Python. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 50: | Line 50: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | Furthermore, DataFrames can also be created manually with the help of dictionaries | + | Furthermore, DataFrames can also be created manually with the help of dictionaries. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 61: | Line 61: | ||

This manual creation of DataFrames can be useful to extend a DataFrame from a file by additional values at once. | This manual creation of DataFrames can be useful to extend a DataFrame from a file by additional values at once. | ||

| − | '''Note:''' Since | + | '''Note:''' Since <syntaxhighlight lang="Python" inline>append()</syntaxhighlight> method was removed from Pandas, the <syntaxhighlight lang="Python" inline>concat()</syntaxhighlight> method should be used. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 68: | Line 68: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | When all changes are done, Pandas also provides | + | When all changes are done, Pandas also provides methods to write the values of the Dataframe back into the old file or into a new one. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| − | # write to csv file | + | # write to a new csv file |

| − | df_people.to_csv(' | + | df_people.to_csv('people_new.csv', index=False) |

</syntaxhighlight> | </syntaxhighlight> | ||

==Getting General Information about DataFrames== | ==Getting General Information about DataFrames== | ||

| − | The following lines of code give an overview of common | + | The following lines of code give an overview of common methods that offer general information about a Dataframe and it is a good starting point for data [https://sustainabilitymethods.org/index.php/Data_Inspection_in_Python inspection]. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 137: | Line 137: | ||

# selection by condition | # selection by condition | ||

| − | # selection of rows where the age is greater than 50 | + | # selection of rows, where the age is greater than 50 |

df_people[df_people['age'] > 50] | df_people[df_people['age'] > 50] | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | The accessibility of a DataFrame can be improved by adding an index to the DataFrame if it does not | + | The accessibility of a DataFrame can be improved by adding an index to the DataFrame, if it does not exist yet. It can be a simple range index from 0 to n or an own customized index for more specific needs. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 159: | Line 159: | ||

===Changing Data=== | ===Changing Data=== | ||

| − | + | As we know how to access data, we can also modify the values. It implies similar way, as with other [https://sustainabilitymethods.org/index.php/Types,_Expressions,_and_Variables_in_Python datatypes] in Python, applying `=` operator. | |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 166: | Line 166: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | An important method to alter all values in a column at once is <syntaxhighlight lang="Python" inline>apply()</syntaxhighlight>. It is commonly used to ''apply'' changes along a whole axis of a DataFrame. This method is often implemented together with a ''lambda'' function, which is a short, anonymous function that is only used once and is not saved in the memory. It is introduced with the <syntaxhighlight lang="Python" inline>lambda</syntaxhighlight> keyword. This build-in function should be always preferred over a [https://sustainabilitymethods.org/index.php/Loops_in_Python loop] in Python, because it is much faster and more efficient. | |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 173: | Line 173: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | In many situations, it is also useful to replace [https://sustainabilitymethods.org/index.php/Handling_Missing_Values_in_Python missing | + | In many situations, it is also useful to replace [https://sustainabilitymethods.org/index.php/Handling_Missing_Values_in_Python missing] or undefined values in the DataFrame. These values are often represented by NaN (not a number). They can be replaced with the <syntaxhighlight lang="Python" inline>fillna</syntaxhighlight> method by the column`s mode, mean or median or any other appropriate number. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 187: | Line 187: | ||

===Rearranging and Grouping Data=== | ===Rearranging and Grouping Data=== | ||

| − | + | Often the data is not provided in a structured way, which makes it necessary to rearrange in order to get a better overview. The following code shows cases of sorting and grouping the data. | |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 203: | Line 203: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | The <syntaxhighlight lang="Python" inline>groupby()</syntaxhighlight> | + | The <syntaxhighlight lang="Python" inline>groupby()</syntaxhighlight> method splits the data into groups based on defined criteria. This is helpful for group-dependent analysis, such as in the next example about the calculation of the average age in each city. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 212: | Line 212: | ||

===Deleting Data=== | ===Deleting Data=== | ||

| − | The deletion of data plays an important role in data analysis for getting rid of unnecessary or inaccurate data. The following examples in the code give an overview of common | + | The deletion of data plays an important role in data analysis for getting rid of unnecessary or inaccurate data. The following examples in the code give an overview of a common removing method. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 231: | Line 231: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | There is a debate about the usage of the <syntaxhighlight lang="Python" inline>inplace=True</syntaxhighlight> parameter, which can be set in many | + | There is a debate about the usage of the <syntaxhighlight lang="Python" inline>inplace=True</syntaxhighlight> parameter, which can be set in many methods to change the data directly in the DataFrame. On the one hand, this way is more convenient, because there is no necessity to create an additional copy. On the other hand, it has some disadvantages, for example, not all methods support this parameter, such as <syntaxhighlight lang="Python" inline>concat()</syntaxhighlight>. Furthermore, the parameter prevents building a chain of methods, which is shown in the following code. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 241: | Line 241: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | As already mentioned, Pandas includes | + | As already mentioned, Pandas includes methods for handling rows and columns with missing or undefined values. |

| − | Instead of replacing the values with <syntaxhighlight lang="Python" inline>fillna</syntaxhighlight>, it is also possible to drop the rows or columns with the <syntaxhighlight lang="Python" inline>dropna</syntaxhighlight>. The final decision about filling or dropping these rows depends on the dataset and personal preferences. | + | Instead of replacing the values with <syntaxhighlight lang="Python" inline>fillna()</syntaxhighlight>, it is also possible to drop the rows or columns with the <syntaxhighlight lang="Python" inline>dropna()</syntaxhighlight>. The final decision about filling or dropping these rows depends on the dataset and personal preferences. |

| − | Like in many other | + | |

| − | Further | + | Like in many other methods the <syntaxhighlight lang="Python" inline>dropna()</syntaxhighlight>supports the <syntaxhighlight lang="Python" inline>axis</syntaxhighlight> parameter, which defines, if a method is applied to the rows or columns. Further methods, which support the <syntaxhighlight lang="Python" inline>axis</syntaxhighlight> parameter are <syntaxhighlight lang="Python" inline>sum()</syntaxhighlight>, <syntaxhighlight lang="Python" inline>mean()</syntaxhighlight>, <syntaxhighlight lang="Python" inline>min()</syntaxhighlight>, <syntaxhighlight lang="Python" inline>max()</syntaxhighlight>, <syntaxhighlight lang="Python" inline>count()</syntaxhighlight>, <syntaxhighlight lang="Python" inline>median()</syntaxhighlight>, and <syntaxhighlight lang="Python" inline>apply()</syntaxhighlight> to name only a few. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 259: | Line 259: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | Besides, NaN rows or duplicated rows occur often in datasets. These rows can be removed with the <syntaxhighlight lang="Python" inline>drop_duplicates</syntaxhighlight> | + | Besides, NaN rows or duplicated rows occur often in datasets. These rows can be removed with the <syntaxhighlight lang="Python" inline>drop_duplicates()</syntaxhighlight> method. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 285: | Line 285: | ||

The author of this entry is Felix Pappe. | The author of this entry is Felix Pappe. | ||

| − | |||

| − | |||

| − | |||

Latest revision as of 13:34, 3 September 2024

THIS ARTICLE IS STILL IN EDITING MODE

Pandas is an important Python library for data analysis that was introduced in 2008 by McKinney. The name Pandas comes from "Panel Data", which is an econometrics term for multidimensional structured datasets. The library was originally designed to handle this type of data, especially for financial data analysis, which often requires complex manipulation and cleaning. Nowadays, Pandas is used in a variety of fields, because of its many useful methods for analyzing, cleaning, inspecting and manipulating data.

Contents

General Idea of Pandas

Pandas is developed and optimized for the work with tabular data, stored in spreadsheets or databases. For this task two additional data structures are introduced, the Series (one-dimensional array of indexed data) and the DataFrame (two-dimensional array of indexed data). The DataFrame can be seen as a collection of Series, which share the same index. The simple structure of People dataset`s DataFrame is visualized in figure 1. This data will be used throughout the article to explain the basic methods of Pandas.

Install Pandas

In order to use Pandas, it is necessary to ensure that the library is installed in the Python environment. Otherwise, pip install pandas command in the terminal has to be executed. Afterwards, the library can be imported to the project with the following line of code. Furthermore, it is recommended to check the library`s current version.

import pandas as pd # show pandas version print(pd.__version__)

Reading and Writing Data

Before any data can be analyzed, it must be read into the current Python environment. Pandas can read a variety of file formats, such as CSV, Excel, JSON, HTML, SQL, etc. The following code gives an example for reading a CSV file. The file is read into a pandas DataFrame, which makes the data accessible for Python.

# read csv file

df_csv_people = pd.read_csv('people.csv')

# show the type of df

type(df_csv_people)

If the code is implemented in a final algorithm, it is important to pay attention to the error/exception handling, because there can happen various mistakes during the reading process.

file_path = 'people.csv'

try:

df = pd.read_csv(file_path)

except FileNotFoundError:

print(f"The file {file_path} was not found.")

except pd.errors.EmptyDataError:

print(f"The file {file_path} is empty.")

except pd.errors.ParserError:

print(f"Error parsing the file {file_path}.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

Furthermore, DataFrames can also be created manually with the help of dictionaries.

df_dic_people = {'first_name': ['Jason', 'Molly', 'Tina', 'Jake', 'Amy'],

'last_name': ['Miller', 'Jacobson', 'Ali', 'Milner', 'Cooze'],

'age': [42, 52, 36, 24, 73],

'city': ['Dallas', 'Dallas', 'Los Angles', 'Chicago', 'Chicago']}

This manual creation of DataFrames can be useful to extend a DataFrame from a file by additional values at once.

Note: Since append() method was removed from Pandas, the concat() method should be used.

# appand one dataframe to another df_people = pd.concat([df_csv_people, pd.DataFrame(df_dic_people)],ignore_index=True)

When all changes are done, Pandas also provides methods to write the values of the Dataframe back into the old file or into a new one.

# write to a new csv file

df_people.to_csv('people_new.csv', index=False)

Getting General Information about DataFrames

The following lines of code give an overview of common methods that offer general information about a Dataframe and it is a good starting point for data inspection.

# prints out the first 5 rows of the dataframe df_people.head() # prints out the last 5 rows of the dataframe df_people.tail() # summary of the dataframe (columns, data types, memory usage, Non-Null Count etc.) df_people.info() # shape of the dataframe (rows, columns) df_people.shape # information about the datatype in each column of the dataframe df_people.dtypes # statistics summary of the dataframe (count, mean, std, min, 25%, 50%, 75%, max) df_people.describe() # get the maximum value of each column df_people.max() # get the minimum value of each column df_people.min() # returns a boolean series about duplicate rows. True - the row is a duplicate, False - the row is not a duplicate df_people.duplicated()

Manipulation with DataFrames

Accessing Data

In order to manipulate the data inside a DataFrame it is necessary to access the data. This can be done in the following ways.

# selecting a single column df_people['first_name'] # selecting multiple columns df_people[['first_name', 'last_name']] # selecting rows by index # selection of the first row df_people.iloc[0] # selection of specific values by row and column df_people.iloc[0, 0] # selecting rows by index range # selection of rows 0, 1, 2 (row 3 is not selected) df_people.iloc[0:3] # boolean selection # selection of the row with the first name 'Tina' df_people[df_people['first_name'] == 'Tina'] # selection by condition # selection of rows, where the age is greater than 50 df_people[df_people['age'] > 50]

The accessibility of a DataFrame can be improved by adding an index to the DataFrame, if it does not exist yet. It can be a simple range index from 0 to n or an own customized index for more specific needs.

# creating a simple range index df_people.index = pd.RangeIndex(start=0, stop=len(df_people), step=1) # accessing data via the index df_people.loc[3] # creating a customized index df_people.index = ['a','b','c','d','e','f','g','h','i','j','k','l','m','n','o'] # accessing data via the index df_people.loc['c']

Changing Data

As we know how to access data, we can also modify the values. It implies similar way, as with other datatypes in Python, applying `=` operator.

# change the age of the person with the first name 'Tina' to 60 df_people.loc[df_people['first_name'] == 'Tina', 'age'] = 60

An important method to alter all values in a column at once is apply(). It is commonly used to apply changes along a whole axis of a DataFrame. This method is often implemented together with a lambda function, which is a short, anonymous function that is only used once and is not saved in the memory. It is introduced with the lambda keyword. This build-in function should be always preferred over a loop in Python, because it is much faster and more efficient.

# change the spelling of the first name to uppercase in all rows df_people['first_name'] = df_people['first_name'].apply(lambda x: x.upper())

In many situations, it is also useful to replace missing or undefined values in the DataFrame. These values are often represented by NaN (not a number). They can be replaced with the fillna method by the column`s mode, mean or median or any other appropriate number.

# calculating the mean, median and mode mode_age =df_people["age"].mode() median_age = df_people["age"].median() mean_age = df_people["age"].mean() # replace missing values with the mode df["age"].fillna(median_age, inplace = True)

Rearranging and Grouping Data

Often the data is not provided in a structured way, which makes it necessary to rearrange in order to get a better overview. The following code shows cases of sorting and grouping the data.

# sort by a single value (ascending) df_people.sort_values(by='age') # sort by a single value (descending) df_people.sort_values(by='age', ascending=False) # sort by multiple values (first name and last name) df_people.sort_values(by=['first_name', 'last_name']) # reorder the columns by a specific frequency df_people_reorder = df_people[['last_name','first_name', 'city', 'age']]

The groupby() method splits the data into groups based on defined criteria. This is helpful for group-dependent analysis, such as in the next example about the calculation of the average age in each city.

# caclaulte mean age by city

df_people.groupby('city')['age'].mean()

Deleting Data

The deletion of data plays an important role in data analysis for getting rid of unnecessary or inaccurate data. The following examples in the code give an overview of a common removing method.

# deleting a specific row: axis=0

df_people.drop('a', axis=0)

# deleting a row by index

df_people.drop(index=['b','c'], inplace=True)

# deleting a row by condition

df_people_copy = df_people[df_people['age'] > 50]

# deleting a column

df_people_copy = df_people.drop(columns=['age'])

# deleting multiple columns

df_people_copy = df_people.drop(columns=['age', 'city'])

There is a debate about the usage of the inplace=True parameter, which can be set in many methods to change the data directly in the DataFrame. On the one hand, this way is more convenient, because there is no necessity to create an additional copy. On the other hand, it has some disadvantages, for example, not all methods support this parameter, such as concat(). Furthermore, the parameter prevents building a chain of methods, which is shown in the following code.

result = (df_people

.drop(columns=['age', 'city']) # delete columns age and city

.sort_values(by=['first_name', 'last_name']) # sort by first_name and last_name

.reset_index(drop=True) # reset the index

)

As already mentioned, Pandas includes methods for handling rows and columns with missing or undefined values.

Instead of replacing the values with fillna(), it is also possible to drop the rows or columns with the dropna(). The final decision about filling or dropping these rows depends on the dataset and personal preferences.

Like in many other methods the dropna()supports the axis parameter, which defines, if a method is applied to the rows or columns. Further methods, which support the axis parameter are sum(), mean(), min(), max(), count(), median(), and apply() to name only a few.

# deleting row with NaN values df_people_copy = df_people.dropna() # deleting columns with NaN values # axis=1 means columns # axis=0 means rows df_people_copy = df_people.dropna(axis=1) # deleting row with NaN values in a specific column df_people_copy = df_people.dropna(subset=['age'])

Besides, NaN rows or duplicated rows occur often in datasets. These rows can be removed with the drop_duplicates() method.

# delete duplicate rows df_people_copy = df_people.drop_duplicates()

After deletion of data, it is often necessary to reset the index, because it is not adjusted automatically during the deletion process.

# reset the index of the dataframe # drop=True: the old index is replaced by the new one df_people_copy = df_people.reset_index(drop=True)

References

1. Pandas. (n.d.). Getting started with pandas. Retrieved from https://pandas.pydata.org/docs/getting_started/

2. W3Schools. (n.d.). Pandas Tutorial. Retrieved from https://www.w3schools.com/python/pandas/default.asp

3. DataCamp. (n.d.). Pandas Tutorial: DataFrames in Python. Retrieved from https://www.datacamp.com/tutorial/pandas

4. Real Python. (n.d.). Pandas DataFrames: Data Analysis with Python. Retrieved from https://realpython.com/pandas-dataframe/

The author of this entry is Felix Pappe.