Machine Learning

| Method categorization | ||

|---|---|---|

| Quantitative | Qualitative | |

| Inductive | Deductive | |

| Individual | System | Global |

| Past | Present | Future |

Background

Traditionally in the fields of computer science and mathematics, you have some input and some rule in form of a function. You feed the input into the rule and get some output. In this setting, you need to know the rule exactly in order to create a system that gives you the outputs that you need. This works quite well in many situations where the nature of inputs and/or the nature of the outputs is well understood.

However, in situations where the inputs can be noisy or the outputs are expected to be different in each case, you cannot hand-craft the "rules" that account for every type of inputs or for every type of output imaginable. In such a scenario, another powerful approach can be applied: machine learning. The core idea behind machine learning is that instead of being required to hand-craft all the rules that take inputs and provide outputs in a fairly accurate manner, you can train the machine to learn the rules based on the inputs and outputs that you provide.

The trained models have their foundations in the fields of mathematics and computer science. As computers of various types (from servers, desktops and laptops to smartphones, and sensors integrated in microwaves, robots and even washing machines) have become ubiquitous over the past two decades, the amount of data that could be used to train machine learning models have become more accessible and readily available. The advances in the field of computer science have made working with large volumes of data very efficient. As the computational resources have increased exponentially over the past decades, we are now able to train more complex models that are able to perform specific tasks with astonishing results; so much so that it almost seems magical.

This article presents the different types of machine learning tasks and the different machine learning approaches in brief. If you are interested in learning more about machine learning, you are directed to Russel and Norvig [2] or Mitchell [3].

What the method does

The term "machine learning" does not refer to one specific method. Rather, there are three main groups of methods that fall under the term machine learning: supervised learning, unsupervised learning, and reinforcement learning.

Types of Machine Learning Tasks

Supervised Learning

This family of machine learning methods rely on input-output pairs to "learn" rules. This means that the data that you have to provide can be represented as (X, y) pairs where X = (x_1, x_2, ..., x_n) is the input data (in the form of vectors or matrices) and y = (y_1, y_2, ..., y_n) is the output (in the form of vectors with numbers or categories that correspond to each input), also called true label.

Once you successfully train a model using the input-output pair (X, y) and one of the many training algorithms, you can use the model with new data (X_new) to make predictions (y_hat) in the future.

In the heart of supervised machine learning lies the concept of "learning from data" and from the data entirely. This begs the question what exactly is learning. In the case of computational analysis, "a computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E."[3]

If you deconstruct this definition, here is what you have:

- Task (T) is what we want the machine learning algorithm to be able to perform once the learning process has finished. Usually, this boils down to predicting a value (regression), deciding in which category or group a given data falls into (classification/clustering), or solve problems in an adaptive way (reinforcement learning).

- Experience (E) is represented by the data on which the learning is to be based. The data can either be structured - in a tabular format (eg. excel files) - or unstructured - images, audio files, etc.

- Performance measure (P) is a metric, or a set of metrics, that is used to evaluate the efficacy of the learning process and the "learned" algorithm. Usually, we want the learning process to take as little time as possible (i.e. we want training time time to be low), we want the learned algorithm to give us an output as soon as possible (i.e. we want prediction time to be low), and we want the error of prediction to be as low as possible.

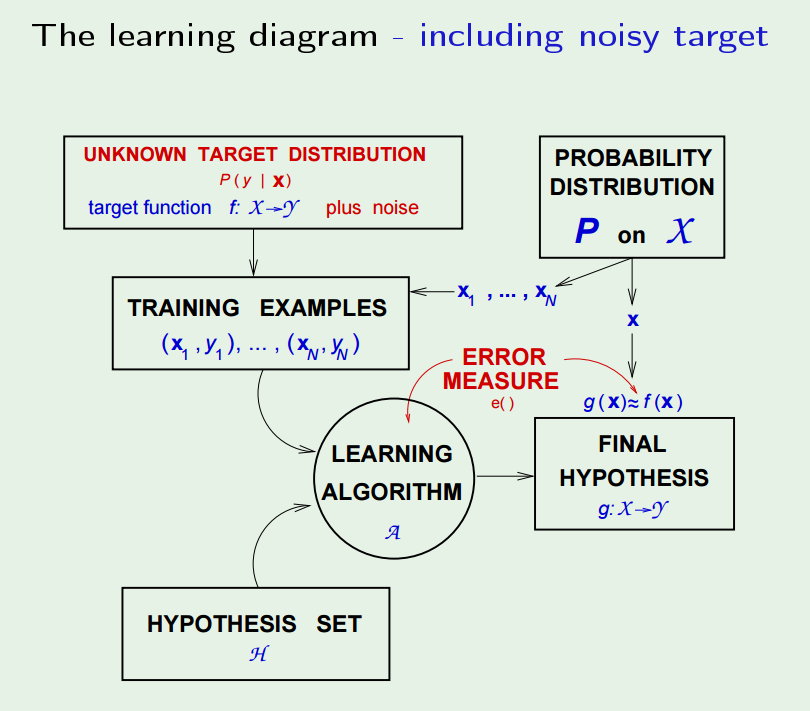

The following figure from the seminal book "Learning from Data" by Yaser Abu Mostafa [1] summarizes the process of supervised learning summarizes the concept in a succinct manner:

The target function is assumed to be unknown and the training data is assumed to be based on the target function that is to be estimated. The models that are trained based on the training data are assessed on some error measures (called metrics) which guide the strategy for improving upon the machine learning algorithm that was "learned" in the previous steps. If you have enough data, and adopted a good machine learning strategies, you can expect the learned algorithm to perform quite well with new input data in the future.

Supervised learning tasks can be broadly sub-categorized into regression learning and classification learning.

In regression learning, the objective is to predict a particular value when certain input data is given to the algorithm. An example of a regression learning task is predicting the price of a house when certain features of the house (eg. PLZ/ZIP code, no. of bedrooms, no. of bathrooms, garage size, energy raging, etc.) are given as an input to the machine learning algorithm that has been trained for this specific task.

In classification learning, the objective is to predict which class (or category) a given observation/sample falls under given the characteristics of the observation. There are 2 specific classification learning tasks: binary classification and multiclass classification. As their names suggest, in binary classification, a given observation falls under one of the two classes (eg. classifying whether an email is spam or not), and in multiclass classification, a given observation falls under one of many classes (eg. in digit recognition task, the class for a picture of a given hand-written digit can range from 0 to 9; there are 10 classes).

Unsupervised Learning

Whereas supervised learning is based on pairs of input and output data, unsupervised learning algorithms function based only on the inputs: X = (x_1, x_2, ..., x_n) and are rather used for recognizing patterns than for predicting specific value or class.

Some of the methods that are categorized under unsupervised learning are Principal Component Analysis, Clustering Methods, Collaborative Filtering, Hidden Markov Models, Gaussian Mixture Models, etc.

Reinforcement Learning

The idea behind reinforcement learning is that the "machine" learns from experiences much like a human or an animal would [1,2]. As such, the input data "does not contain the target output, but instead contains some possible output together with a measure of how good that output is" [1]. As such, the data looks like: (X, y, c) where X = (x_1, x_2, ..., x_n) is the input, y = (y_1, y_2, ... , y_n) is the list of corresponding labels, and c = (c_1, c_2, ..., c_n) is the list of corresponding scores for each input-label pair. The objective of the machine is to perform such that the overall score is maximized.

Approaches to Training Machine Learning Algorithms

Batch Learning

This machine learning approach, also called offline learning, is the most common approach to machine learning. In this approach, a machine learning model is built from the entire dataset in one go.

The main disadvantage of this approach is that depending on the computational infrastructure being used, the data might not fit into the memory and/or the training process can take a long time. Additionally, models based on batch learning need to be retrained on a semi-regular basis with new training examples in order for them to keep performing well.

Some examples of batch learning algorithms are Decision Trees(C4.5, ID3, CART), Support Vector Machines, etc.*

Online Learning

In this machine learning approach, data is ordered and fed into the training algorithm in a sequential order instead of training on the entire data set at once. This approach is adopted when the dataset is so large that batch learning is infeasible, or when the nature of the data makes it so that more data is available over time (eg. stock prices, sales data, etc.)

Some examples of online learning algorithms are Perceptron Learning Algorithm, stochastic gradient descent based classifiers and regressors, etc.

What about Neural Networks?

Neural networks are a specific approach to machine learning that can be adapted to solve tasks many different settings. As a result, they have been used for detecting spams in emails, identifying different objects in images, beating humans at games like Chess and Go, grouping customers based on their preferences and so on. In addition, neural networks can be used in all three types of machine learning tasks mentioned above - supervised, unsupervised, and reinforcement learning.

Please refer to this video series from 3blue1brown for more on Neural Networks.

Strengths & Challenges

Strengths

- Machine learning techniques perform very well - sometimes better than humans- on variety of tasks (eg. detecting cancer from x-ray images, playing chess, art authentication, etc.)

- In a variety of situations where outcomes are noisy, machine learning models perform better than rule-based models.

Challenges/Weaknesses

- Even though many methods in the field of machine learning have been researched quite extensively, the techniques still suffer from a lack of interpretability and explainability.

- Reproducibility crisis is a big problem in the field of machine learning research as highlighted in [6].

- Machine learning approaches have been criticized as being a "brute force" approach of solving tasks.

- Machine learning techniques only perform well when the dataset size is large. With large data sets, training a ML model takes a large computational resources that can be costly in terms of time and money.

Normativity

Machine learning gets criticized for not being as thorough as traditional statistical methods are. However, in their essence, machine learning techniques are not that different from statistical methods as both of them are based on rigorous mathematics and computer science. The main difference between the two fields is the fact that most of statistics is based on careful experimental design (including hypothesis setting and testing), the field of machine learning does not emphasize this as much as the focus is placed more on modeling the algorithm (find a function f(x) that predicts y) rather than on the experimental settings and assumptions about data to fit theories [4].

However, there is nothing stopping researchers from embedding machine learning techniques to their research design. In fact, a lot of methods that are categorized under the umbrella of the term machine learning have been used by statisticians and other researchers for a long time; for instance, k-means clustering, hierarchical clustering, various approaches to performing regression, principle component analysis etc.

Besides this, scientists also question how machine learning methods, which are getting more powerful in their predictive abilities, will be governed and used for the benefit (or harm) of the society. Jordan and Mitchell (2015) highlight that the society lacks a set of data privacy related rules that prevent nefarious actors from potentially using the data that exists publicly or that they own can be used with powerful machine learning methods for their personal gain at the other' expense [7].

The ubiquity of machine learning empowered technologies have made concerns about individual privacy and social security more relevant. Refer to Dwork [8] to learn about a concept called Differential Privacy that is closely related to data privacy in data governed world.

Outlook

The field of machine learning is going to continue to flourish in the future as the technologies that harness machine learning techniques are becoming more accessible over time. As such, academic research in machine learning techniques and their performances continue to be attractive in many areas of research moving forward.

There is no question that the field of machine learning has been able to produce powerful models with great data processing and prediction capabilities. As a result, it has produced powerful tools that governments, private companies, and researches alike use to further advance their objectives. In light of this, Jordan and Mitchell [7] conclude that machine learning is likely to be one of the most transformative technologies of the 21st century.

Key Publications

- Russell, S., & Norvig, P. (2002). Artificial intelligence: a modern approach.

- LeCun, Yann A., et al. "Efficient backprop." Neural networks: Tricks of the trade. Springer Berlin Heidelberg, 2012. 9-48.

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks." Advances in neural information processing systems. 2012.

- Cortes, Corinna, and Vladimir Vapnik. "Support-vector networks." Machine learning 20.3 (1995): 273-297.

- Valiant, Leslie G. "A theory of the learnable." Communications of the ACM27.11 (1984): 1134-1142.

- Blei, David M., Andrew Y. Ng, and Michael I. Jordan. "Latent dirichlet allocation." the Journal of machine Learning research 3 (2003): 993-1022.

References

- Abu-Mostafa, Y. S., Magdon-Ismail, M., & Lin, H. T. (2012). Learning from data (Vol. 4). New York, NY, USA:: AMLBook.

- Russell, S., & Norvig, P. (2002). Artificial intelligence: a modern approach.

- Mitchell, T. M. (1997). Machine learning. Mcgraw-Hill.

- Breiman, L. (2001). Statistical Modeling: The Two Cultures (with comments and a rejoinder by the author). Statistical Science, 16(3), 199–231.

- 5 things machines can already do better than humans (Vodafone Instut)

- Beam, A. L., Manrai, A. K., & Ghassemi, M. (2020). Challenges to the Reproducibility of Machine Learning Models in Health Care. JAMA, 323(4), 305–306.

- Jordan, M. I., & Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science, 349(6245), 255–260.

- Dwork, C. (2006). Differential privacy. In ICALP’06 Proceedings of the 33rd international conference on Automata, Languages and Programming - Volume Part II (pp. 1–12).

Further Information

- Introduction to Machine Learning in Python

- Machine Learning in R for Beginners

- Machine Learning Lecture from Andrew Ng (Stanford CS229 2018)

- Lex Fridman - Deep Learning State of the Art (2020) [MIT Deep Learning Series]