Difference between revisions of "Cronbach's Alpha"

(Created page with "[img[https://latex.codecogs.com/gif.latex?\alpha = (\frac{k}{k-1})(1 - \frac{\sum_{i=1}^{k}\sigma_{y_{i}}^{2} }{\sigma_{x}^{2}})]] What is Cronbach's Alpha? Cronbach’s alpha...") |

|||

| (3 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | '''In short:''' Cronbach’s alpha is a measure used to estimate the reliability, or internal consistency, of a composite score or test items. It is calculated by correlating the score for each scale item with the total score for each observation (usually individual test takers), and then comparing that to the variance for all individual item scores. | |

| − | |||

| − | Cronbach’s alpha is a measure used to estimate the reliability, or internal consistency, of a composite score or test items. | ||

| − | + | == What is Cronbach's Alpha? == | |

| − | |||

| − | + | In the context of test-reliability Cronbach's Alpha is one way of measuring the extent to which a given measurement is a consistent measure of a [[Glossary|concept]]. The concept of Internal consistency can be connected to the interrelatedness of a set of items. It describes the extent to which all items in a test measure the same concept; it is connected to the inter-relatedness of the items within the test. | |

| − | |||

| − | |||

| − | + | Imagine an individual takes a Happiness Survey. Your calculated happiness score would be highly reliable (consistent) if your test produces a similar result when the same individual re-takes the survey, under the same conditions. However, the measure would not be reliable at all if an individual at the same level of real happiness takes the Survey twice back-to-back and receives one high and one low happiness score. Cronbach's Alpha is sed under the assumption that you have multiple items measuring the same underlying construct. In this context you might have five questions asking different things, but when combined, could be said to measure overall happiness. | |

| − | Cronbach's Alpha is | ||

| − | |||

| − | + | == How to calculate Cronbach's Alpha? == | |

| + | Cronbach's Alpha is calculated by correlating the score for each scale item with the total score for each observation (usually individual test takers), and then comparing that to the variance for all individual item scores: | ||

| − | + | [[File:formulas.png|550px|frameless|center]] | |

| − | |||

| − | |||

| − | + | === Example: World Happiness Report === | |

| − | + | Data available at [https://drive.google.com/file/d/1DLZ_gVFhsT0dBROLE79h2Y5_6NXRF2Br/view Google Drive]. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | Example: World Happiness Report | ||

| − | Data available at | ||

For the sake of clarity, only 4 items are selected in this example to measure the construct "Happiness" (Happiness.Score), among others. | For the sake of clarity, only 4 items are selected in this example to measure the construct "Happiness" (Happiness.Score), among others. | ||

| − | + | <syntaxhighlight lang="R" line> | |

# use libraries tidyverse and psych | # use libraries tidyverse and psych | ||

library(tidyverse) | library(tidyverse) | ||

| Line 38: | Line 22: | ||

# load data and select four exemplary columns | # load data and select four exemplary columns | ||

| − | + | World_Happiness_Report %>% | |

| − | World_Happiness_Report %>% select(economy = Economy..GDP.per.Capita., Family, health = Health..Life.Expectancy., Freedom) -> World_Happiness_Report_4col | + | select(economy = Economy..GDP.per.Capita., Family, health = Health..Life.Expectancy., Freedom) -> World_Happiness_Report_4col |

# calculate Cronbach's Alpha | # calculate Cronbach's Alpha | ||

| − | # The argument 'check.keys' checkes if all items have the same polarity, if necessary wrongly polarized items are reversed | + | # The argument 'check.keys' checkes if all items have the same polarity, if necessary wrongly polarized items are reversed by R |

| − | Output: | + | alpha(World_Happiness_Report_4col, check.keys=TRUE) |

| + | |||

| + | #Output: | ||

| + | # Reliability analysis | ||

| + | # Call: alpha(x = World_Happiness_Report_4col, check.keys = TRUE) | ||

| + | # | ||

| + | # raw_alpha std.alpha G6(smc) average_r S/N ase mean sd median_r | ||

| + | # 0.81 0.83 0.83 0.55 4.8 0.017 0.78 0.23 0.52 | ||

| + | # | ||

| + | # lower alpha upper 95% confidence boundaries | ||

| + | # 0.78 0.81 0.85 | ||

| + | |||

| + | # Reliability if an item is dropped: | ||

| + | # raw_alpha std.alpha G6(smc) average_r S/N alpha se var.r med.r | ||

| + | # economy 0.71 0.72 0.65 0.46 2.6 0.034 0.018 0.42 | ||

| + | # Family 0.73 0.77 0.77 0.52 3.3 0.024 0.078 0.37 | ||

| + | # health 0.70 0.75 0.70 0.49 2.9 0.029 0.029 0.42 | ||

| + | # Freedom 0.85 0.88 0.86 0.71 7.5 0.016 0.014 0.69 | ||

| + | # | ||

| + | # Item statistics | ||

| + | # n raw.r std.r r.cor r.drop mean sd | ||

| + | # economy 155 0.94 0.89 0.90 0.82 0.98 0.42 | ||

| + | # Family 155 0.85 0.84 0.76 0.71 1.19 0.29 | ||

| + | # health 155 0.88 0.86 0.85 0.80 0.55 0.24 | ||

| + | # Freedom 155 0.55 0.66 0.45 0.42 0.41 0.15 | ||

| + | |||

| + | </syntaxhighlight> | ||

| + | '''Output:''' | ||

The first column contains the value for Cronbach's Alpha for the total scale (in this case 0.81) | The first column contains the value for Cronbach's Alpha for the total scale (in this case 0.81) | ||

| Line 57: | Line 68: | ||

image.png | image.png | ||

| − | How to interpret Cronbach's Alpha? | + | == How to interpret Cronbach's Alpha? == |

| − | The resulting alpha coefficient ranges from 0 to 1 | + | * The resulting alpha coefficient ranges from 0 to 1 |

| − | α = 0 if all the items share no covariance and are not correlated which means that all the scale items are entirely independent from one another | + | * ''α = 0'' if all the items share no covariance and are not correlated which means that all the scale items are entirely independent from one another |

| − | α | + | * ''α will approach 1'' if the items have high covariances and are highly correlated. The higher the coefficient the more the items probably measure the same underlying concept |

| − | Negative values for α indicate problems within your data e.g. you may have forgotten to reverse score some items. | + | * ''Negative values for α'' indicate problems within your data e.g. you may have forgotten to reverse score some items. |

When interpreting Cronbach's Alpha understandings for what makes a 'good' coefficient may differ according to your application field and depend on one's theoretical knowledge. | When interpreting Cronbach's Alpha understandings for what makes a 'good' coefficient may differ according to your application field and depend on one's theoretical knowledge. | ||

| − | Most often methodologists recommend a minimum coefficient between 0.65 and 0.8. Values above 0.8 are considered as best in many cases where values less than 0.5 are unacceptable. | + | Most often methodologists recommend a minimum coefficient between ''0.65'' and ''0.8''. Values above 0.8 are considered as best in many cases where values less than 0.5 are unacceptable. |

| − | Be aware of: | + | == Be aware of: == |

| − | Too high α coefficient as a possible sign for redundancy | + | * Too high α coefficient as a possible sign for redundancy |

| − | When interpreting a scale’s α coefficient, one should think about the alpha being a function of covariances among items and the number of items in the analysis. Therefore a high coefficient is not solely a markt of a reliable set of items as alpha can simply be increased by increasing the number of items in the analysis. Therefore a too high α coefficient (i.e. | + | When interpreting a scale’s α coefficient, one should think about the alpha being a function of covariances among items and the number of items in the analysis. Therefore a high coefficient is not solely a markt of a reliable set of items as alpha can simply be increased by increasing the number of items in the analysis. Therefore a too high α coefficient (i.e. > 0.95) can be a sign of redundancy in the scale items. |

| − | Cronbach's Alpha is also affected by the length of the test | + | * Cronbach's Alpha is also affected by the length of the test |

A larger number of items can result in a larger α, smaller number of items in a smaller α. | A larger number of items can result in a larger α, smaller number of items in a smaller α. | ||

If alpha is too high, your analysis may include items asking the same things whereas a low value for alpha may mean that there aren’t enough questions on the test. | If alpha is too high, your analysis may include items asking the same things whereas a low value for alpha may mean that there aren’t enough questions on the test. | ||

| − | For Accessing how 'good' a scale is at measuring a concept you'll need more than a simple test of reliability | + | * For Accessing how 'good' a scale is at measuring a concept you'll need more than a simple test of reliability |

Highly reliable measurements are defined as containing zero or very little random measurement error resulting in inconsistent results. However, having a scale with a high α coefficient does not tell about the scale's validity, e.g. if the items mirror the underlying concept in an appropriate way. Regarding examplary the construct validity one could adress it by examining whether or not there exist empirical relationships between the measure of the underlying concept of interest and other concepts to which it should be theoretically related. | Highly reliable measurements are defined as containing zero or very little random measurement error resulting in inconsistent results. However, having a scale with a high α coefficient does not tell about the scale's validity, e.g. if the items mirror the underlying concept in an appropriate way. Regarding examplary the construct validity one could adress it by examining whether or not there exist empirical relationships between the measure of the underlying concept of interest and other concepts to which it should be theoretically related. | ||

| − | Further useful links: | + | == Further useful links: == |

| − | + | * [https://www.rdocumentation.org/packages/psych/versions/2.0.9/topics/Alpha alpha-function in R documentation] | |

| − | https://www.rdocumentation.org/packages/psych/versions/2.0.9/topics/alpha | + | * [https://link.springer.com/article/10.1007/s11165-016-9602-2 The Use of Cronbach’s Alpha when Developingand Reporting Research Instruments in Science Education] |

| − | + | * [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2792363/ On the Use, the Misuse, an the very Limited Usefulness of Cronbach's Alpha] | |

| − | + | ||

| − | + | ---- | |

| − | + | [[Category:Statistics]] | |

| + | [[Category:R examples]] | ||

Latest revision as of 19:42, 18 May 2021

In short: Cronbach’s alpha is a measure used to estimate the reliability, or internal consistency, of a composite score or test items. It is calculated by correlating the score for each scale item with the total score for each observation (usually individual test takers), and then comparing that to the variance for all individual item scores.

Contents

What is Cronbach's Alpha?

In the context of test-reliability Cronbach's Alpha is one way of measuring the extent to which a given measurement is a consistent measure of a concept. The concept of Internal consistency can be connected to the interrelatedness of a set of items. It describes the extent to which all items in a test measure the same concept; it is connected to the inter-relatedness of the items within the test.

Imagine an individual takes a Happiness Survey. Your calculated happiness score would be highly reliable (consistent) if your test produces a similar result when the same individual re-takes the survey, under the same conditions. However, the measure would not be reliable at all if an individual at the same level of real happiness takes the Survey twice back-to-back and receives one high and one low happiness score. Cronbach's Alpha is sed under the assumption that you have multiple items measuring the same underlying construct. In this context you might have five questions asking different things, but when combined, could be said to measure overall happiness.

How to calculate Cronbach's Alpha?

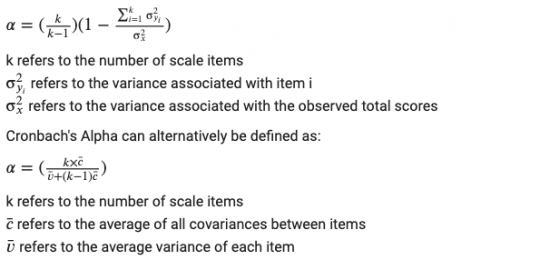

Cronbach's Alpha is calculated by correlating the score for each scale item with the total score for each observation (usually individual test takers), and then comparing that to the variance for all individual item scores:

Example: World Happiness Report

Data available at Google Drive. For the sake of clarity, only 4 items are selected in this example to measure the construct "Happiness" (Happiness.Score), among others.

# use libraries tidyverse and psych library(tidyverse) library(psych) # load data and select four exemplary columns World_Happiness_Report %>% select(economy = Economy..GDP.per.Capita., Family, health = Health..Life.Expectancy., Freedom) -> World_Happiness_Report_4col # calculate Cronbach's Alpha # The argument 'check.keys' checkes if all items have the same polarity, if necessary wrongly polarized items are reversed by R alpha(World_Happiness_Report_4col, check.keys=TRUE) #Output: # Reliability analysis # Call: alpha(x = World_Happiness_Report_4col, check.keys = TRUE) # # raw_alpha std.alpha G6(smc) average_r S/N ase mean sd median_r # 0.81 0.83 0.83 0.55 4.8 0.017 0.78 0.23 0.52 # # lower alpha upper 95% confidence boundaries # 0.78 0.81 0.85 # Reliability if an item is dropped: # raw_alpha std.alpha G6(smc) average_r S/N alpha se var.r med.r # economy 0.71 0.72 0.65 0.46 2.6 0.034 0.018 0.42 # Family 0.73 0.77 0.77 0.52 3.3 0.024 0.078 0.37 # health 0.70 0.75 0.70 0.49 2.9 0.029 0.029 0.42 # Freedom 0.85 0.88 0.86 0.71 7.5 0.016 0.014 0.69 # # Item statistics # n raw.r std.r r.cor r.drop mean sd # economy 155 0.94 0.89 0.90 0.82 0.98 0.42 # Family 155 0.85 0.84 0.76 0.71 1.19 0.29 # health 155 0.88 0.86 0.85 0.80 0.55 0.24 # Freedom 155 0.55 0.66 0.45 0.42 0.41 0.15

Output:

The first column contains the value for Cronbach's Alpha for the total scale (in this case 0.81) image.png

The first column (raw_alpha) lists the scale's internal consistency if it does not contain the specific item. The smaller this value is compared to the internal consistency for the total scale, the better the contribution of this item to the reliability. In this example the total alpha could be increased to 0.85 by dropping out the item 'Freedom' image.png

N explains the number of valid values for each item. The columns mean (average) and sd (standard deviation) can be used as an indicator for the difficulty of the items. The higher the mean of an item, the easier it is to agree with this item. Items with very high or very low mean values should not be included in the scale because they are too easy or too difficult, respectively. image.png

How to interpret Cronbach's Alpha?

- The resulting alpha coefficient ranges from 0 to 1

- α = 0 if all the items share no covariance and are not correlated which means that all the scale items are entirely independent from one another

- α will approach 1 if the items have high covariances and are highly correlated. The higher the coefficient the more the items probably measure the same underlying concept

- Negative values for α indicate problems within your data e.g. you may have forgotten to reverse score some items.

When interpreting Cronbach's Alpha understandings for what makes a 'good' coefficient may differ according to your application field and depend on one's theoretical knowledge. Most often methodologists recommend a minimum coefficient between 0.65 and 0.8. Values above 0.8 are considered as best in many cases where values less than 0.5 are unacceptable.

Be aware of:

- Too high α coefficient as a possible sign for redundancy

When interpreting a scale’s α coefficient, one should think about the alpha being a function of covariances among items and the number of items in the analysis. Therefore a high coefficient is not solely a markt of a reliable set of items as alpha can simply be increased by increasing the number of items in the analysis. Therefore a too high α coefficient (i.e. > 0.95) can be a sign of redundancy in the scale items.

- Cronbach's Alpha is also affected by the length of the test

A larger number of items can result in a larger α, smaller number of items in a smaller α. If alpha is too high, your analysis may include items asking the same things whereas a low value for alpha may mean that there aren’t enough questions on the test.

- For Accessing how 'good' a scale is at measuring a concept you'll need more than a simple test of reliability

Highly reliable measurements are defined as containing zero or very little random measurement error resulting in inconsistent results. However, having a scale with a high α coefficient does not tell about the scale's validity, e.g. if the items mirror the underlying concept in an appropriate way. Regarding examplary the construct validity one could adress it by examining whether or not there exist empirical relationships between the measure of the underlying concept of interest and other concepts to which it should be theoretically related.

Further useful links:

- alpha-function in R documentation

- The Use of Cronbach’s Alpha when Developingand Reporting Research Instruments in Science Education

- On the Use, the Misuse, an the very Limited Usefulness of Cronbach's Alpha