Web Scraping in Python

THIS ARTICLE IS STILL IN EDITING MODE

Contents

Web Scraping: The Definition

Web Scraping is the process of extracting information from websites in an automated manner. It thus primarily deals with the building blocks of these webpages, (Extensible) Hypertext Markup Language ((X)Html). Many people consider Data Scraping and Web Scraping to be synonyms. However, the former considers extracting data in general, regardless of its storage location, while the latter deals with the data from websites. There are other types of data scraping techniques, such as Screen Scraping and Report Mining that are also of interest but cannot be engaged with here.

When Do We Use Web Scraping?

In general, we can fetch data from websites by making API calls or by subscribing to RSS feeds. An API is an Interaction Programming Interface. You can imagine it to be two programs talking to each other via this interface. If you make an API call, you ask for permission that one program provides the other program information, in this case, data. An RSS (Rich Site Summary) Feed is basically an automated newsletter, informing you when there is new data on the webpage you are interested in. However, it is not necessary for a website to provide the data via these methods. In such cases, web scraping is a reliable way to extract data. Some practical scenarios where web scraping is useful are listed as follows,

- Market Research: To keep an eye on the competitors without performing time-consuming manual research

- Price Tracking: Tracking product prices on websites like Amazon as showcased by caramelcaramel

- News Monitoring: Periodically extracting news related to selected topics from various websites

- Real Estate Aggregation: Web scraping can be used to aggregate the real estate listings across multiple websites into a single database, see for example Zillow

Guidelines for Web Scraping

Following guidelines should always be considered to perform appropriate and considerate web scraping.

- Always read through the terms and conditions of a website and see if there are any particular rules about scraping.

- Verify whether the content has been published in the public domain or not. If it has been published in the public domain, there should be no problem in performing web scraping. The easiest way is to use public domain resources, which are often marked such as with the Creative Commons Zero (CCO) license. If you are unsure, consult someone who is in charge of the domain.

- Never scrape more than what you need and only hit the server at regular and acceptable intervals of time as web scraping consumes a lot of resources from the host server. This means trying to avoid a lot of requests in a short time period. You can also track the response time, as we will see later.

Web Scraping Using BeautifulSoup

We now focus our attention on the actual process of web scraping using the BeautifulSoup library in Python. Alternatives to this library are also available and have been mentioned in the Further Reading section.

In this tutorial, we attempt to scrape the data from the IMDB Top 250 Movies webpage. Specifically, we will be extracting the following movie details.

- Rank

- Rating

- Crew Details

- Release Year

- Number of user ratings

The prerequisites to follow the tutorial have been listed here:

- Python (Version 3.9.8 is used in this tutorial

- Requests library

- BeautifulSoup library

- pandas library

The entire process has been detailed in a stepwise manner as seen below.

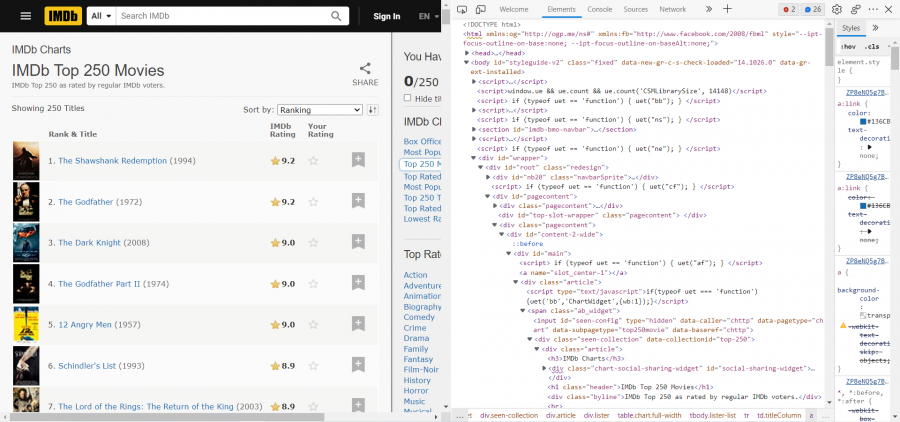

Step 1: Inspect Webpage

First, we use the "Inspect" option on the selected webpage by right-clicking somewhere on the webpage and choosing "inspect" to open the developer tools window and see the Html structure. Here's a glimpse of how that looks for our IMDB webpage.

Now, we search for the Html element where our data is present. We can do so by using the tool represented by this symbol in Chrome:

![]()

With this tool, you can just hover any element in the web page and it will show you the respective Html code.

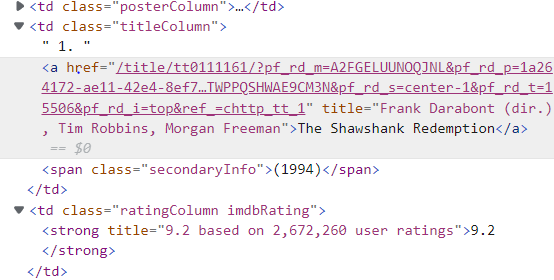

By using this tool in the IMDB webpage we find that our data is present in various td elements with the classes "titleColumn", "ratingColumn imdbRating". A sample is shown below:

Step 2: Fetching the Html Document

To extract the information from the Html document, we need to first fetch it into our code. We use the requests library here.

import requests

url = "http://www.imdb.com/chart/top"

headers = {'accept-language': 'en-US'}

response = requests.get(url, headers=headers)

Basically, we provide a URL from which the Html document needs to be fetched to the requests library and ask it to send a GET-type HTTP request to that URL since we only want to fetch the content and not modify it. The 'headers' object here refers to all the HTTP headers we are providing to the request we are about to make. Since we want our movie titles in English, we state that we are only going to accept the language English (en-US) in the HTTP response via the HTTP header accept-language.

Further information on HTTP requests has been provided in the Further Reading section.

Step 3: Processing Html Using Beautifulsoup

Now, we extract the required information from the Html document (stored in the 'response' object). For this, we use the BeautifulSoup library.

import time

start_time = time.time()

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text, "html.parser")

movies = soup.select('td.titleColumn')

ratings = [rating.attrs.get('title') for rating in soup.select('td.ratingColumn.imdbRating strong')]

movie_data = []

for i in range(len(movies)):

rating_info = ratings[i].split(" ")

data = {'rank': movies[i].get_text().strip().split(".")[0],

'title': movies[i].a.get_text(),

'crew': movies[i].a.attrs.get('title'),

'release_year': movies[i].span.get_text()[1:-1],

'rating': rating_info[0],

'num_user_ratings': rating_info[3].replace(",", "")}

movie_data.append(data)

end_time = time.time()

print("Execution time:", end_time - start_time, "seconds")

Let us now dissect this code snippet.

- First we install BeuatifulSoup which we need for the web scraping, and time, to track the response time. We have also set the start time in the beginning.

- Then, we create an Html parser to parse the Html document. Parsing means that the Html code is transformed in a way that we can use it in Python

- We then fetch the Html elements of interest using 'CSS selectors' which run in the backend of the select() function. By passing the 'td.titleColumn' argument, we ask the selector to fetch the td elements with the class 'titleColumn'. This data is stored in 'movies'.

- We have already seen that the information related to the ratings is present in a different element altogether. So, we use a CSS selector here with a different keystring 'td.ratingColumn.imdbRating strong'. This asks the selector to fetch those td elements which have the classes 'ratingColumn' and 'imdbRating'. Then, we ask the selector to fetch the 'strong' element within each of the chosen td elements (see the Html code in the last figure). Finally, we would like to fetch the content of the 'title' attribute for each selected strong element. This data is stored in 'ratings'.

- We now iterate over 'movies' and 'ratings' objects to fetch the individual strings and extract the required data per movie. The following explains what exactly is happening in each iteration:

# Since each string in 'ratings' object is formatted as "(rating) based on (count) user ratings", we split the string of the rating column at each space character and pick the first and fourth elements of the split string to get the rating and the number of user ratings. Also, we format the number of user ratings by removing "," in the string (the 2nd- 3rd line in the loop)

Rank details are at the very beginning of the text in each movie string (2nd-3rd last line in the loop).

# Crew details are present in the title attribute of the anchor tag of the string and the movie title is embedded between the anchor tags. Thus, we use the relevant code to extract these details # Release year is present between the span tags and is formatted as (year). Hence we fetch the content from the span tags and select the substring after removing the first and last elements of the original string.

- All the extracted details are put into a dictionary object 'data' and a list of all the dictionaries prepared in this manner is created.

- Lastly, we have set the end time so that we can print the response time. Have an eye on this if you send several requests.

To understand this code better, have the inspection tool open and look at the title and the rating column.

Step 4: Storing the Data

We now look at how to store the data prepared thus far. This is rather simple in Python as we simply store the data in a pandas DataFrame, with the appropriate column names provided, and then convert that to a .csv file.

import pandas as pd

df = pd.DataFrame(movie_data, columns = ['rank', 'title', 'crew', 'release_year', 'rating', 'num_user_ratings'])

df.to_csv('movie_data.csv', index=False)

We have successfully stored our data in a file titled 'movie_data.csv'.

External Links

- Data Scraping- Okta.com

- Data Scraping - Wikipedia

- Web Scraping Uses- Kinsta.com

- Dataquest tutorial For Web Scraping: Legal aspects of web scraping are also mentioned here

- Real Python Tutorial On BeautifulSoup

- GeeksForGeeks Tutorial For IMDB Ratings and Details

Further Readings

- What is Extensible Hypertext Markup Language?

- RSS feed and their uses

- What is API?

- BeautifulSoup Documentation: For more on CSS Selectors and other ways of using BeautifulSoup

- BeautifulSoup Alternatives

- Types of HTTP Requests

- HTTP Messages (Requests and Responses)

The author of this entry is XX. Edited by Milan Maushart