Difference between revisions of "Bootstrapping in Python"

| (16 intermediate revisions by one other user not shown) | |||

| Line 90: | Line 90: | ||

===Example 2. Bootstrapping as an alternative to t-test=== | ===Example 2. Bootstrapping as an alternative to t-test=== | ||

| − | Bootstrap can be | + | Bootstrap can be implemented for analyzing difference between mean values of two samples. |

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| Line 107: | Line 107: | ||

name='sample_B') | name='sample_B') | ||

| − | |||

plt.show() | plt.show() | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 117: | Line 116: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | Let us calculate the probability that we observe such difference (1.63 in this case) only by chance and how close the means are. We will run bootstrap simulation as follows: | |

| − | 1. | + | 1. Randomly sample with replacement from each group separately. The size of samples is equal to the size of the original group.<br> |

| − | 2. Calculate the difference of means between | + | 2. Calculate the difference of means between two created samples.<br> |

| − | 3. | + | 3. Repeat steps 1-2 n times. (We will choose n as num_samples = 1000).<br> |

| − | + | 4. Count p-value, as a proportion of bootstrap samples where the absolute value of mean difference is greater than or equal to the absolute value of the observed mean difference.<br> | |

| − | |||

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

| − | alpha = 0.05 | + | alpha = 0.05 # significance level |

num_samples = 1000 | num_samples = 1000 | ||

count = 0 | count = 0 | ||

diffs = [] | diffs = [] | ||

| − | |||

for i in range(num_samples): | for i in range(num_samples): | ||

| − | + | subsample_A = samples_A.sample(n=len(samples_A), replace=True) | |

| − | + | subsample_B = samples_B.sample(n=len(samples_B), replace=True) | |

| − | |||

| − | |||

| − | |||

# calculate difference of means of 2 subsamples | # calculate difference of means of 2 subsamples | ||

| Line 143: | Line 137: | ||

diffs.append(bootstrap_difference) | diffs.append(bootstrap_difference) | ||

| − | + | for el in diffs: | |

| − | if | + | if abs(el) >= abs(AB_difference): |

| − | + | count+=1 | |

| − | count += 1 | ||

| − | + | pvalue = count / num_samples | |

| − | |||

| − | pvalue = | ||

print('p-value =', pvalue) | print('p-value =', pvalue) | ||

if pvalue < alpha: | if pvalue < alpha: | ||

| − | print("Reject H0: mean values | + | print("Reject H0: mean values do differ significantly") |

else: | else: | ||

| − | print("Accept H0: mean values | + | print("Accept H0: mean values do not differ significantly") |

| − | |||

| − | |||

| − | |||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | ''p-value = 0.514''<br> | ||

| + | ''Accept H0: mean values do not differ significantly'' | ||

| − | + | ====Visualize the resulted distribution of saved mean differences==== | |

| − | |||

| − | |||

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

pd.Series(diffs).hist(bins=20, figsize=(5, 4)) | pd.Series(diffs).hist(bins=20, figsize=(5, 4)) | ||

plt.title( | plt.title( | ||

| − | "Example 2: bootstrap simulation | + | "Example 2: bootstrap simulation. The difference of mean values of 2 samples") |

| − | |||

plt.show() | plt.show() | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | [[File: | + | [[File:bootstr_2example_new28.png|500px]] |

| − | From the figure we conclude that value of 1.63 is | + | This histogram represents the variability of the mean difference that could arise from sampling variations. From the figure we conclude that value of 1.63 is not occurred by chance. |

| + | |||

| + | ====t-test==== | ||

| − | |||

<syntaxhighlight lang="Python" line> | <syntaxhighlight lang="Python" line> | ||

ttest_ind(samples_A.values, samples_B.values) | ttest_ind(samples_A.values, samples_B.values) | ||

| − | |||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | ''TtestResult(statistic=-1.7971305016442471, pvalue=0.07790788332728987, df=54.0)Out: TtestResult(statistic=-1.7971305016442471, pvalue=0.07790788332728987, df=54.0)'' | ||

| − | + | p-value of t-test is a bit higher than p-value of the bootstrap sampling, but they are both more than significance level. Therefore, we can say, that the mean values do not differ significantly. From the histigram we also could observe the values fluctuate from -2 to 5, having in mind that the mean values of samples A and B are 98.63 and 100.26, respectively. | |

==Further reading== | ==Further reading== | ||

| Line 194: | Line 182: | ||

3. https://en.wikipedia.org/wiki/Bootstrapping_(statistics) | 3. https://en.wikipedia.org/wiki/Bootstrapping_(statistics) | ||

| − | + | 4. https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.sample.html | |

| + | |||

| − | + | The author of this entry is Igor Kvachenok. Edited by Evgeniya Zakharova. | |

| − | |||

Latest revision as of 12:31, 3 September 2024

THIS ARTICLE IS STILL IN EDITING MODE

Contents

Introduction

The term "bootstrap" is euphonic with the metaphor of pulling oneself up by one's bootstraps, signifying the achievement of the seemingly impossible without external assistance. Analogously, as seen in Raspe's tales of Baron Munchausen, who extricated himself and his horse from a swamp by his own hair, the Bootstrap technique enables the attainment of statistical insights independently, bypassing the need for predefined formulas.

Bootstrap is applicable for any samples. It is useful when:

1. observations are not described by normal distribution;

2. there are no statistical tests for the desired quantities.

Theoretical aspect

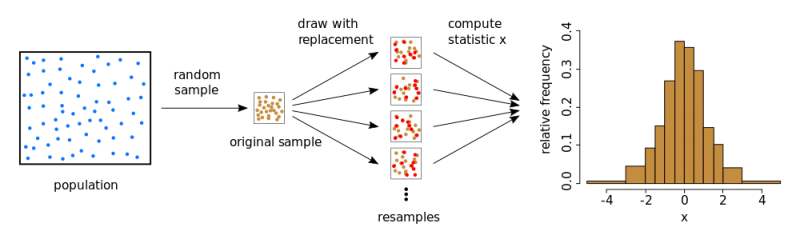

Bootstrapping (sometimes also just bootstrap) is a method for estimating statistical quantities without reliance on explicit formulas.

To derive a specified statistic for a sample (such as the mean or variance, quantile, etc.), using bootstrap, pseudo-samples or subsamples are generated from the original sample by drawing with replacement. The mean (variance, quantile, etc.) is then computed for each pseudo-sample. Theoretically, this process can be repeated multiple times, generating numerous instances of the parameter of interest and allowing for the assessment of its distribution.

Advantages:

1. Simplicity and flexibility.

2. No knowledge about data distribution is needed.

3. Helps to avoids the cost of repeating the experiment to get more data.

4. Usually provides a more accurate estimates of a statistic than "standard" methods utilizing normal distribution.

Disadvantages:

1. Computational intensity. The process involves resampling from the dataset multiple times, which might be time-consuming for extensive datasets.

2. Dependence on the original data. Bootstrapping assumes that the original dataset is representative of the population.

3. Sensitivity to outliers. Since bootstrap samples with replacement, outliers may be overrepresented in the resampled datasets, affecting the stability of the results.

Synthetic examples of bootstrapping

Example 1. Bootstrapping for quantile evaluation

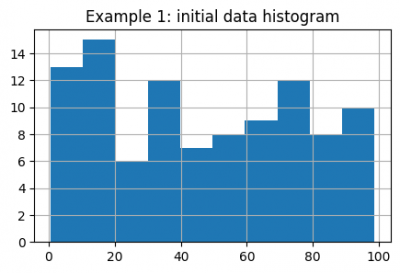

We will generate a sample with some random distribution:

import pandas as pd

import numpy as np

from scipy.stats import ttest_ind

import matplotlib.pyplot as plt

np.random.seed(42)

data = pd.Series(np.random.rand(100) * 100)

data.hist(figsize=(5, 3))

plt.title("Example 1: initial data histogram")

plt.show()

Using bootstrap to evaluate outliers in this case is much more reliable, than calculating normal distribution quantiles. For this purpose we will generate 1000 subsamples using pandas sample function and a loop. It is important to set replace=True, as bootstrap relies on drawing samples with replacement. We will evaluate the upper 0.99 and lower 0.01 quantiles.

upper_quantiles = []

lower_quantiles = []

for i in range(1000):

subsample = data.sample(frac=1, replace=True)

upper_quantiles.append(subsample.quantile(0.99))

lower_quantiles.append(subsample.quantile(0.01))

upper_quantiles = pd.Series(upper_quantiles)

lower_quantiles = pd.Series(lower_quantiles)

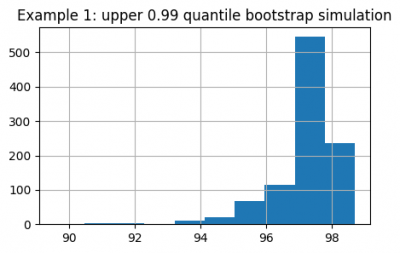

We get an experimental distribution for both quantiles:

upper_quantiles.hist(figsize=(5, 3))

plt.title("Example 1: upper 0.99 quantile bootstrap simulation")

plt.show()

Now we can estimate confidence intervals for the upper and lower 0.01 quantiles:

# upper 0.99 quantile lies in this interval with 95% confidence

print(f'{upper_quantiles.quantile(0.025):.2f}') # Out: 94.89

print(f'{upper_quantiles.quantile(0.975):.2f}') # Out: 98.69

# lower 0.01 quantile lies in this interval with 95% confidence

print(f'{lower_quantiles.quantile(0.025):.2f}') # Out: 0.55

print(f'{lower_quantiles.quantile(0.975):.2f}') # Out: 4.62

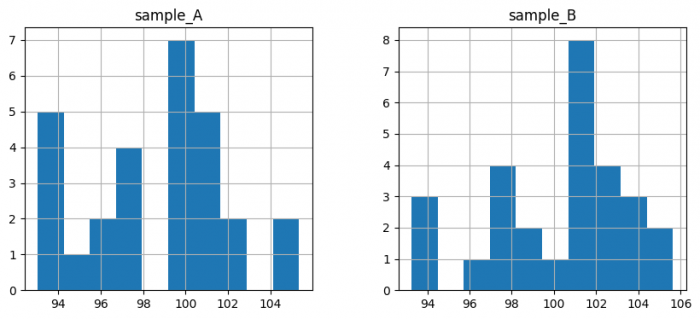

Example 2. Bootstrapping as an alternative to t-test

Bootstrap can be implemented for analyzing difference between mean values of two samples.

# generate 2 samples

samples_A = pd.Series(

[100.24, 97.77, 95.56, 99.49, 101.4 , 105.35, 93.33, 93.02,

101.37, 95.66, 93.34, 100.75, 104.93, 97. , 95.46, 100.03,

102.34, 93.23, 97.05, 97.76, 93.63, 100.32, 99.51, 99.31,

102.41, 100.69, 99.67, 100.99],

name='sample_A')

samples_B = pd.Series(

[101.67, 102.27, 97.01, 103.46, 100.76, 101.19, 99.11, 97.59,

101.01, 101.45, 94.3 , 101.55, 96.33, 99.03, 102.33, 97.32,

93.25, 97.17, 101.1 , 102.57, 104.59, 105.63, 93.93, 103.37,

101.62, 100.62, 102.79, 104.19],

name='sample_B')

plt.show()

AB_difference = samples_B.mean() - samples_A.mean()

print(f"Difference of mean values {AB_difference:.2f}") # Out: Difference of mean values 1.63

Let us calculate the probability that we observe such difference (1.63 in this case) only by chance and how close the means are. We will run bootstrap simulation as follows:

1. Randomly sample with replacement from each group separately. The size of samples is equal to the size of the original group.

2. Calculate the difference of means between two created samples.

3. Repeat steps 1-2 n times. (We will choose n as num_samples = 1000).

4. Count p-value, as a proportion of bootstrap samples where the absolute value of mean difference is greater than or equal to the absolute value of the observed mean difference.

alpha = 0.05 # significance level

num_samples = 1000

count = 0

diffs = []

for i in range(num_samples):

subsample_A = samples_A.sample(n=len(samples_A), replace=True)

subsample_B = samples_B.sample(n=len(samples_B), replace=True)

# calculate difference of means of 2 subsamples

bootstrap_difference = subsample_B.mean() - subsample_A.mean()

diffs.append(bootstrap_difference)

for el in diffs:

if abs(el) >= abs(AB_difference):

count+=1

pvalue = count / num_samples

print('p-value =', pvalue)

if pvalue < alpha:

print("Reject H0: mean values do differ significantly")

else:

print("Accept H0: mean values do not differ significantly")

p-value = 0.514

Accept H0: mean values do not differ significantly

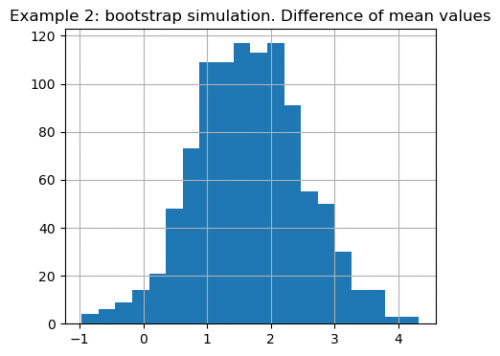

Visualize the resulted distribution of saved mean differences

pd.Series(diffs).hist(bins=20, figsize=(5, 4))

plt.title(

"Example 2: bootstrap simulation. The difference of mean values of 2 samples")

plt.show()

This histogram represents the variability of the mean difference that could arise from sampling variations. From the figure we conclude that value of 1.63 is not occurred by chance.

t-test

ttest_ind(samples_A.values, samples_B.values)

TtestResult(statistic=-1.7971305016442471, pvalue=0.07790788332728987, df=54.0)Out: TtestResult(statistic=-1.7971305016442471, pvalue=0.07790788332728987, df=54.0)

p-value of t-test is a bit higher than p-value of the bootstrap sampling, but they are both more than significance level. Therefore, we can say, that the mean values do not differ significantly. From the histigram we also could observe the values fluctuate from -2 to 5, having in mind that the mean values of samples A and B are 98.63 and 100.26, respectively.

Further reading

1. https://medium.com/analytics-vidhya/what-is-bootstrapping-in-machine-learning-777fc44e222a (a simple article, also contains a link to the original article)

2. https://towardsdatascience.com/an-introduction-to-the-bootstrap-method-58bcb51b4d60 (a more mathematical article explaining why bootstrap actually works, has a lot of further links)

3. https://en.wikipedia.org/wiki/Bootstrapping_(statistics)

4. https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.sample.html

The author of this entry is Igor Kvachenok. Edited by Evgeniya Zakharova.